We recently launched a new Async Audio API that you can use to process audio files and generate transcription and insights such as topics, action items, follow-ups and questions. In this blog, we will show you how to use the Async Audio App in your React application.

Requirement

Before we get started, you will need to make sure to have:

- A Symbl account

- Node >= 8.10 and npm >= 5.6 for React’s dependencies

- react >= 16.8.0 and react-dom >= 16.8.0 for Material-UI’s peer dependencies

Setup React Project

To create a project with Material-UI CSS library, run

npx create-react-app async-audio-app cd async-audio-app npm install @material-ui/core npm start Go to ./src folder and delete all files except index.js, and App.js. Clean the import statements in both files and return Hello World in App.js to test the application as shown below:

./src/index.js

```jsx import React from 'react'; import ReactDOM from 'react-dom'; import App from './App'; ReactDOM.render( , document.getElementById('root') ); ./src/App.js

import React from 'react'; function App() { return ( <div> <h1>Hello World</h1> </div> ); } export default App; If you go to https://localhost:3000 in the browser, you should be able to see “Hello World”.

Getting Started

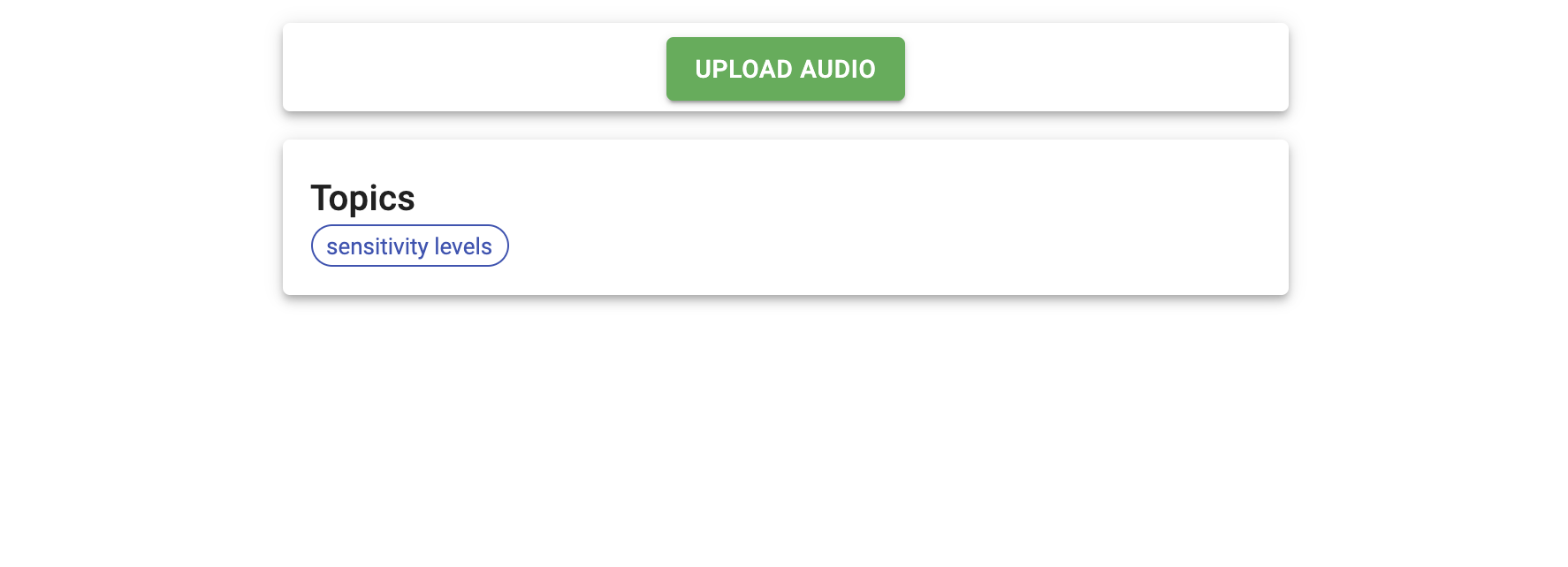

We will be creating a React app that uploads an audio file and returns topics from that audio. We will be using Async Audio API to process an audio file, Job API to retrieve the status of an ongoing async audio request and Conversation API to return the topics.

We will stick with a good React folder structure, so create a components folder inside ./src with the following folders and files.

├── src ├── components │ ├── Audio │ │ ├── Audio.js │ │ ├── index.js │ │ ├── hooks.js │ │ └── style.js │ └── Topics │ ├── Topics.js │ ├── index.js │ ├── hooks.js │ └── style.js ├── index.js ├── App.js ├── store.js └── auth.json Add the following inside index.js files

.../Audio/index.js

export { default } from './Audio'.../Topics/index.js

export { default } from './Topics'In this tutorial, we will not be making the api request for authentication from a server – we will be hardcoding the access token inside the auth.json which can be used temporarily for the app. Replace [ACCESS_TOKEN] with your temporary access token generated from POST Authentication request using your appId and appSecret from the Symbl Platform. You can use the Postman app to generate this access token as shown in this Youtube Video.

{ "accessToken": "[ACCESS_TOKEN]"} Building The App

Initialize Context Store

We are creating the .store.js to store our global state using Context API. The reason why use the Context API is that we want the Topics component to only to make an API request when there’s a new id state from the Audio component but we cannot pass the state in between components with props.

If we are not using context for global state management, we will have to place the Topics component under the Audio component and pass down the state using props. While this will also work, it will create an additional nested folder which is not a good practice.

Create idContext, loadingContext, and successContext like the following in our store.js file.

./src/store.js

import React, { useState, createContext } from 'react'; export const idContext = createContext() export const loadingContext = createContext() export const successContext = createContext() function Store(props){ const [id, setId] = useState(null) const [loading, setLoading] = useState(false) const [success, setSuccess] = useState(false) return ( {props.children} ) } export default Store; Wrap <App/> with <Store/> inside index.js file.

./src/index.js

import Store from './store'; ReactDOM.render( , document.getElementById('root') ); Creating UI for uploading Audio

Let’s create an input button in the Audio component to upload the audio file by writing the following code in theAudio.js file.

.../Audio/Audio.js

import React, { useRef, useState, useContext }from 'react'; import clsx from 'clsx'; import { Paper, Box, Button, CircularProgress} from '@material-ui/core' import { loadingContext, successContext } from '../../Store' import useAudioAsyncAPI from './hooks'; import { useStyles } from './style' export default function Audio(){ const inputRef = useRef() const classes = useStyles() const [file , setFile] = useState() const [loading, setLoading] = useContext(loadingContext) const [success, setSuccess] = useContext(successContext) useAudioAsyncAPI(file) const buttonClassname = clsx({ [classes.buttonSuccess]: success, }) async function handleButtonClick() { inputRef.current.click() if (!loading) { setSuccess(false); setLoading(true); } }; return( <div> <div><input id="input" accept="audio/mpeg, audio/wav, audio/wave" type="file" /> setFile(inputRef.current.files[0])} ref={inputRef} style = {{display: "none"}} /> <button disabled="disabled"> Upload Audio </button> {loading && }</div> </div> ) } In the above code snippet, we are creating a <Button/> with onClick event listener that will call an <input/> form through React’s useRef so that we can upload our audio file. The <input/> form will only accept audio MIME type files such as “audio/mpeg”, “audio/wav” and “audio/wave”.

The <input/> form has an onChange event listener that will update the file state with the new audio file path. It is important to note that we are using useRef here in order to get the reference object of the uploaded audio file instead of a shallow copy of the file path which will appear as a string of “C://fakepath/audiofilename.wav”.

Upon uploading, when the loading state is true, it will render a <CircularProgress/> until the api call in the custom hook useAudioAsyncAPI is completed.

Fetch Async Audio API and Job API in useEffect

Let’s code the fetch request using fetch in react’s useEffect inside the .hooks.js file.

.../Audio/hooks.js

import {useEffect, useContext } from 'react' import auth from '../../auth.json' import { idContext, loadingContext, successContext } from '../../Store'; export default function useAudioAsyncAPI(file){ const [id, setId] = useContext(idContext) const [, setLoading] = useContext(loadingContext) const [, setSuccess] = useContext(successContext) useEffect( () => { let controller = new AbortController() async function fetchData(){ const urlAudio = 'https://api.symbl.ai/v1/process/audio' const requestOptionsAudio = { method: 'POST', headers: { 'x-api-key': auth.accessToken, 'Content-Type': 'audio/wave', }, body: file, signal: controller.signal } const requestOptions = { method: 'GET', headers: { 'x-api-key': auth.accessToken, } } await fetch(urlAudio, requestOptionsAudio) .then((response) => { return response.json() }) .then((data) => { const [jobId, id] = [data.jobId, data.conversationId] return [jobId, id] }) .then( (ids) => { function check(jobId){ fetch(`https://api.symbl.ai/v1/job/${jobId}`, requestOptions) .then(response => response.json()) .then( data => { if (data.status === 'in_progress'){ check(data.id) return } if (data.status === 'completed'){ setId(ids[1]) setSuccess(true); setLoading(false); } }) } check(ids[0]) }).catch(err => console.log(err, err.message)) } file && fetchData().catch(err => console.log(err.message)) return(() => { controller.abort() }) },[file]) return id } There are 2 API requests here, the first one is a POST request to Symbl’s Async Audio API endpoint to process the uploaded audio file. This Async Audio returns a Conversation ID and a Job ID.

We can use the returned Job ID to check the progress status of the Async Audio API. The second API request is a GET request to Symbl’s Job API endpoint recursively that will return the status of the previous Async Audio API call which is either ‘in_progress’ or ‘completed’. Once the Job API return ‘completed’, we will update the success state to true and loading state to false.

Finally, we update the global state id with our new Conversation ID returned from Async Audio API which is ready to used with Symbl’s Conversation API.

To complete the Audio component, let’s add some styling for this component like the following:

.../Audio/style.js

import { makeStyles } from '@material-ui/core/styles'; import { green } from '@material-ui/core/colors'; export const useStyles = makeStyles((theme) => ({ wrapper: { position: 'relative', }, buttonSuccess: { backgroundColor: green[500], '&:hover': { backgroundColor: green[700], }, }, fabProgress: { color: green[500], position: 'absolute', top: -6, left: -6, zIndex: 1, }, buttonProgress: { color: green[500], position: 'absolute', top: '50%', left: '50%', marginTop: -12, marginLeft: -12, }, })); Creating UI for Topics Generated

Of course, we will need to create a UI to display the topics generated from the Conversation API. We will use <Chip/> from Material-UI to display each topic.

.../Topics/Topics.js

import React, { useContext } from 'react'; import { Paper, Typography, Chip, Box } from '@material-ui/core'; import LabelIcon from '@material-ui/icons/Label'; import useConversationAPI from './hooks' import { idContext } from '../../Store'; export default function Topics(props){ const [id] = useContext(idContext) const datas = useConversationAPI(id) return ( Topics { datas.map( (data) => { console.log(data) return( <div>}/></div> ) })} ) } Fetch Conversation API in useEffect

Once the id state is updated or changed, we will make API request to Symbl’s Conversation API using the Conversation ID returned from the previous useEffect hook. Let’s code the fetch request to generates the Topics like the following:

.../Topics/hooks.js

import {useState, useEffect } from 'react' import auth from '../../auth.json' export default function useConversationAPI(id){ const [data, setData] = useState([]) useEffect( () => { let controller = new AbortController() function fetchData(){ const requestOptions = { method: 'GET', headers: { 'x-api-key': auth.accessToken, } } fetch(`https://api.symbl.ai/v1/conversations/${id}/topics`, requestOptions) .then(response => response.json()) .then((data) => { if (data.topics){ const item = data.topics.map((item) => ([ item.id, item.type, item.text, ])) setData(item) } }) } id && fetchData() return(() => { controller.abort() }) },[id]) return data } In the code above, when the id state is true, we are making API calls using fetch to Symbl’s Conversation API specifically, GET Topics by specifying the Conversation ID in the API URL that will return the topics generated from the uploaded audio file.

We can delete .../Topics/style.js since we don’t need it.

Rendering Audio and Topics components

We only want to render Topics components after we uploaded the audio and topics generated, and only populate the UI with the topics. For this, we can again use the global state id and write a conditional rendering statement using &&. We can remove the previous “Hello World” statement.

./src/App.js

import React, { useContext } from 'react'; import Audio from './components/Audio'; import Topics from './components/Topics/Topics'; import { idContext } from './Store'; function App() { const [id] = useContext(idContext) return ( <div> <audio> {id && <topics></topics> } ); } Let’s add some responsive layout styling with padding using Material-UI’s <Grid/> and <Box/>.

./src/App.js

import React, { useContext } from 'react'; import Audio from './components/Audio'; import Topics from './components/Topics/Topics'; import { idContext } from './Store'; import { Grid, Box } from '@material-ui/core'; function App() { const [id] = useContext(idContext) return ( <div> <grid container=""> <grid item="" xs="{false}" sm="{2}"></grid> <grid item="" xs="{12}" sm="{8}"></grid> <box></box> <box padding="{1}"></box> <audio></audio> </grid></div> </audio> </Box > { id && } </div> ); } export default App; Run The App

And that’s it! Now you have a fully functional react app that can take an audio file — in mono audio format —, process it, show loading progress while it is been processed, and display all the topics keywords within the conversation in the audio file. You can go to [<https://localhost:3000>](<https://localhost:3000>) to view the running app and test it.

You can find the full open source code for this app on our Github.

Check out our API docs if you want to customize this integration further using the APIs that Symbl provides.