Build Real time AI for

Symbl.ai enables enterprises to build real-time AI Agents, orchestrate multimodal experiences, and drive analytics from voice, video, and chat conversations — at scale.

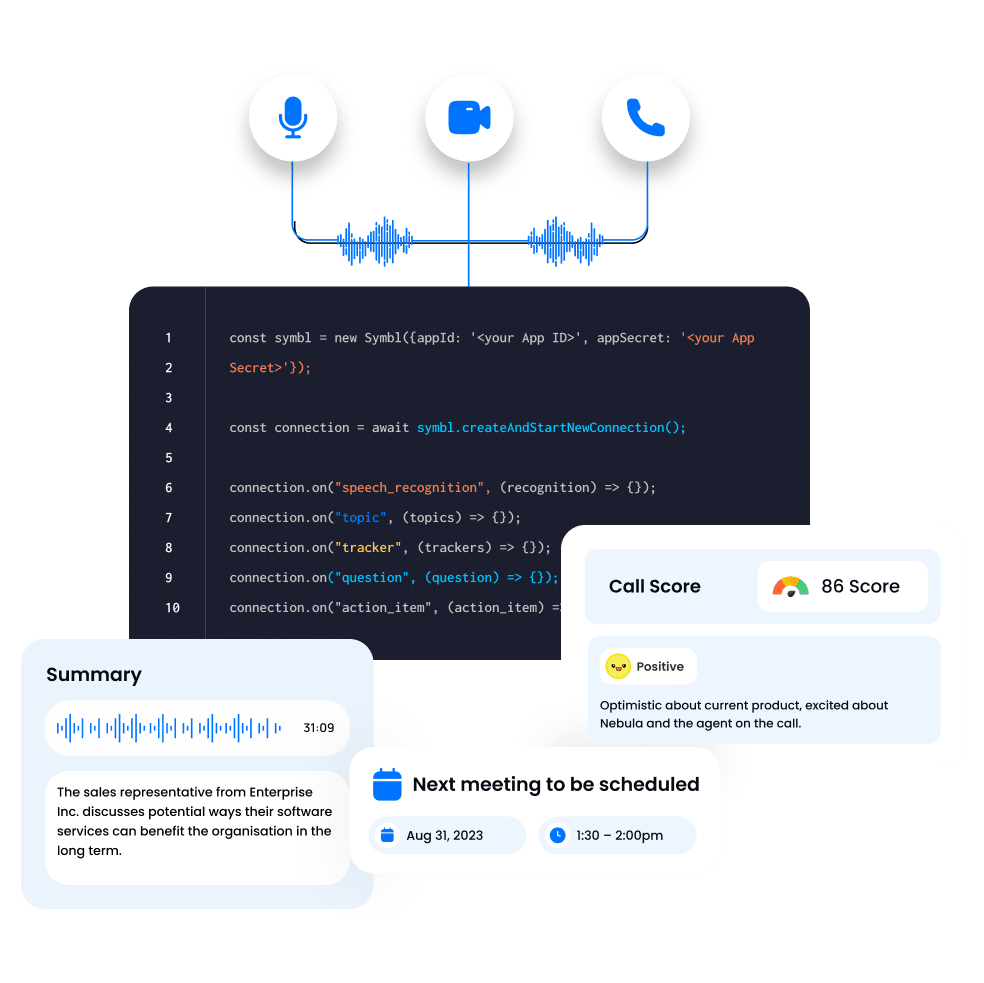

Tap into multimodal interactions in real-time

AI Teams

Fine-tune and build real-time RAG use cases with Nebula LLM and Embeddings

Product Teams

Build live experiences in your products and workflows, for example voice bots or live assist for specific roles, using low code APIs and SDKs

Revenue Teams

Get real time notifications on customer churn signals and business growth opportunities

Data Teams

Uncover patterns and predictions by combining conversation metadata with the rest of your data pipeline.

Why Symbl

- Specialized LLM and Models: Purpose-built for understanding and generating empathetic human conversations in real-time across voice and text channels with Nebula

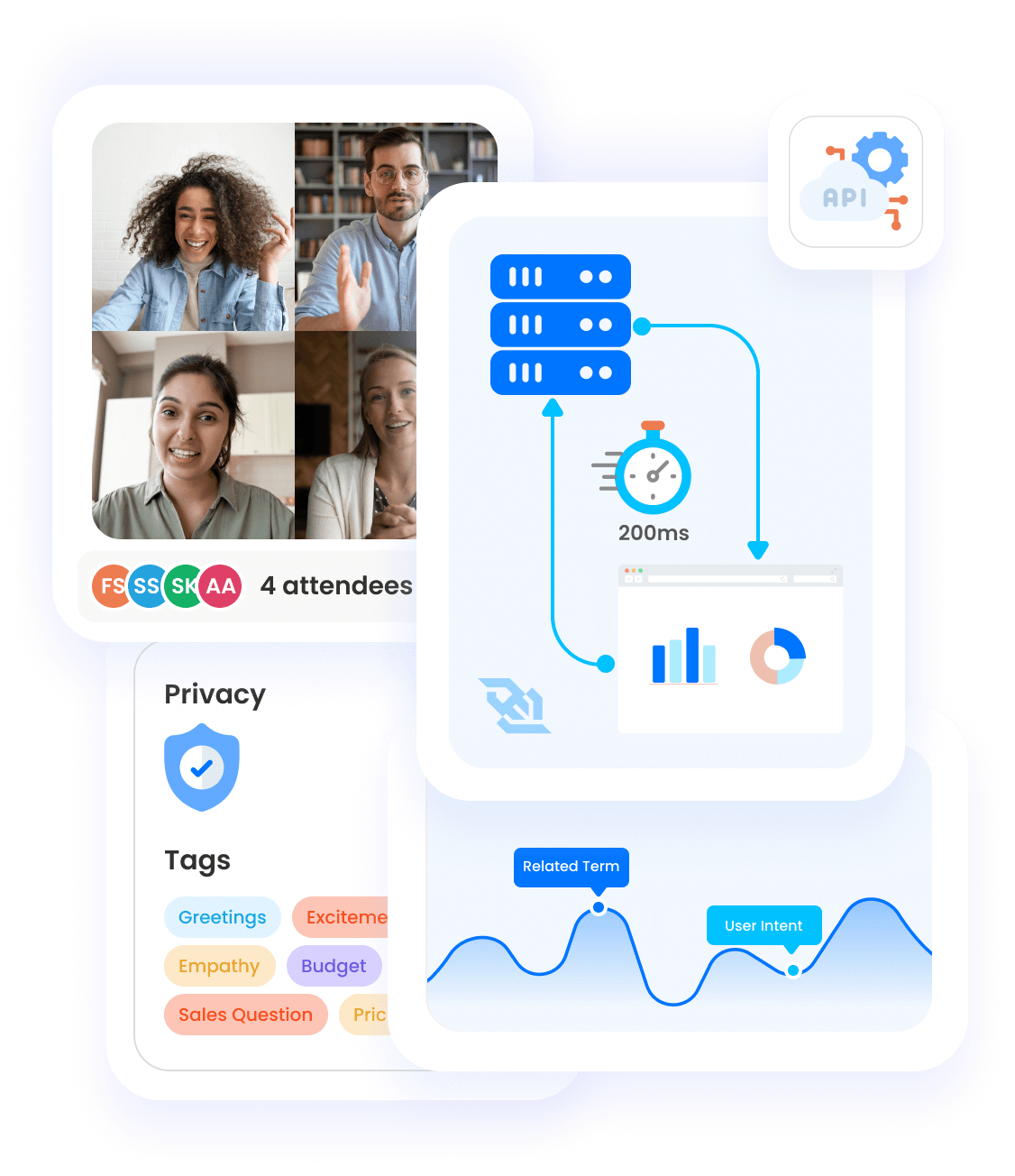

- Multi-Agent Platform: Create and deploy interconnected AI agents that collaborate to solve complex communication challenges within your business context

- Out-of-the-Box Solutions: Ready-made agents and experiences for product builders to quickly implement and customize for specific CX workflows

Enterprise-Ready AI for Every Conversation Touchpoint

Empower your teams with intelligent Agents, real-time Experiences, and actionable Analytics—built on Symbl.ai’s extensible developer platform.

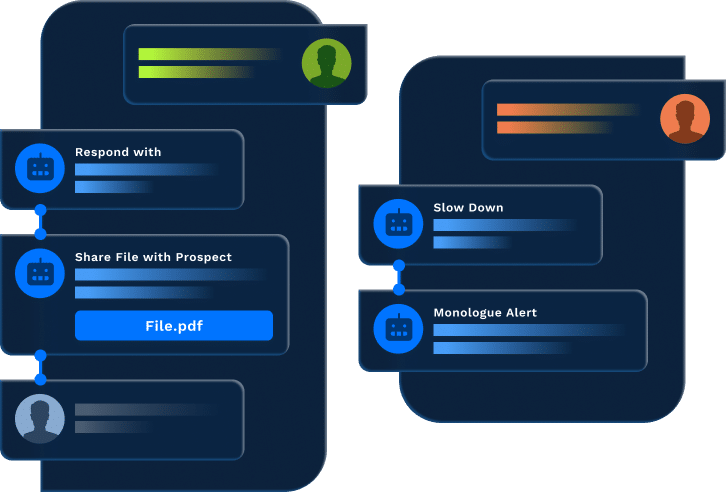

AI Agents for Every Conversation

Symbl’s agentic framework enables autonomous, adaptive AI agents that understand context, follow multi-turn logic, and take action across systems—purpose-built for support, sales, and service.

Real-Time, Personalized AI Experiences

Deliver dynamic in-call and post-call AI experiences—from proactive coaching to intelligent nudges—across voice, video, and chat. Tailored, fast, and fully integrated.

Unified Conversation Intelligence

Transform unstructured voice and text into structured insights—automatically. Measure performance, sentiment, and trends across every interaction to drive better outcomes and compliance.

By Developers, For Developers

Build real-time analysis and agentic workflows with a few lines of code

const fetch = require('node-fetch');

const url = 'https://api.symbl.ai/v1/process/video/url';

const options = {

method: 'POST',

headers: {

accept: 'application/json',

'content-type': 'application/json',

authorization: YOUR ACCESS TOKEN;

},

body: JSON.stringify({url: 'https://youtube/samplefile.wav', name: '', diarizationSpeakerCount: 4, "enableSpeakerDiarization": true})

};

fetch(url, options)

.then(res => res.json())

.then(json => console.log(json))

.catch(err => console.error('error:' + err));

const fetch = require('node-fetch');

const url = 'https://api.symbl.ai/v1/process/audio/url';

const options = {

method: 'POST',

headers: {

accept: 'application/json',

'content-type': 'application/json',

authorization: YOUR ACCESS TOKEN;

},

body: JSON.stringify({url: 'https://symbltestdata.s3.us-east-2.amazonaws.com/newPhonecall.mp3', name: '', diarizationSpeakerCount: 2, "enableSpeakerDiarization": true})

};

fetch(url, options)

.then(res => res.json())

.then(json => console.log(json))

.catch(err => console.error('error:' + err));

const fetch = require('node-fetch');

const url = 'https://api.symbl.ai/v1/process/text';

const options = {

method: 'POST',

headers: {

accept: 'application/json',

'content-type': 'application/json',

authorization: YOUR ACCESS TOKEN;

},

body: JSON.stringify({messages: [

{

"payload": {

"content": "Hey, this is Amy calling from Health Insurance Company, I wanted to remind you of your pending invoice and dropping a quick note to make sure the policy does not lapse and you can pay the outstanding balance no later than July 30, 2023. Please call us back at +1 459 305 3949 and extension 5 urgently. ",

"contentType": "text/plain"

},

"from": {

"name": "Amy Brown (Customer Service)",

"userId": "[email protected]"

},

"duration": {

"startTime": "2020-03-06T03:27:16.174Z",

"endTime": "2020-03-06T03:27:16.774Z"

}

},

], name: "ASYNC-1692941230681"})

};

fetch(url, options)

.then(res => res.json())

.then(json => console.log(json))

.catch(err => console.error('error:' + err));

const phoneNumber = PHONE_NUMBER;

const emailAddress = EMAIL_ADDRESS;

const authToken = YOUR ACCESS TOKEN;

const payload = {

"operation": "start",

"endpoint": {

"type" : "pstn",

"phoneNumber": phoneNumber,

"dtmf": '{DTMF_MEETING_ID}#,,{MEETING_PASSCODE}#'

},

"actions": [{

"invokeOn": "stop",

"name": "sendSummaryEmail",

"parameters": {

"emails": [

emailAddress

]

}

}],

"data" : {

"session": {

"name" : "My Meeting"

}

}

}

let request = new XMLHttpRequest();

request.onload = function() {

}

request.open('POST', 'https://api.symbl.ai/v1/endpoint:connect', true);

request.setRequestHeader('Authorization', `Bearer ${authToken}`)

request.setRequestHeader('Content-Type', 'application/json');

request.send(JSON.stringify(payload));

const {Symbl} = window;

(async () => {

try {

const symbl = new Symbl({ accessToken: 'YOUR_ACCESS_TOKEN' });

const connection = await symbl.createConnection();

await connection.startProcessing({

config: { encoding: "OPUS" },

speaker: { userId: "[email protected]", name: "Symbl" }

});

connection.on("speech_recognition", (speechData) => {

const { punctuated } = speechData;

const name = speechData.user ? speechData.user.name : "User";

console.log(`${name}:`, punctuated.transcript);

});

connection.on("question", (questionData) => {

console.log("Question:", questionData["payload"]["content"]);

});

await Symbl.wait(60000);

await connection.stopProcessing();

connection.disconnect();

} catch(e) {

// Handle errors here.

}

})();

Unlock Human Interactions at Scale

Build your own personalized experiences that augment, automate and analyze customer conversations across different channels.

Realtime Assist

Real-time AI Assist enables timely and accurate support to frontline workers such as sales or customer support agents on live calls by generating responses to customer queries, detecting intent, analyzing sentiment, scoring calls for coaching, and more.

Voice Agents

Voice Agents automate Q&A on inbound customer calls by understanding customer needs and providing personalized responses and recommendations.

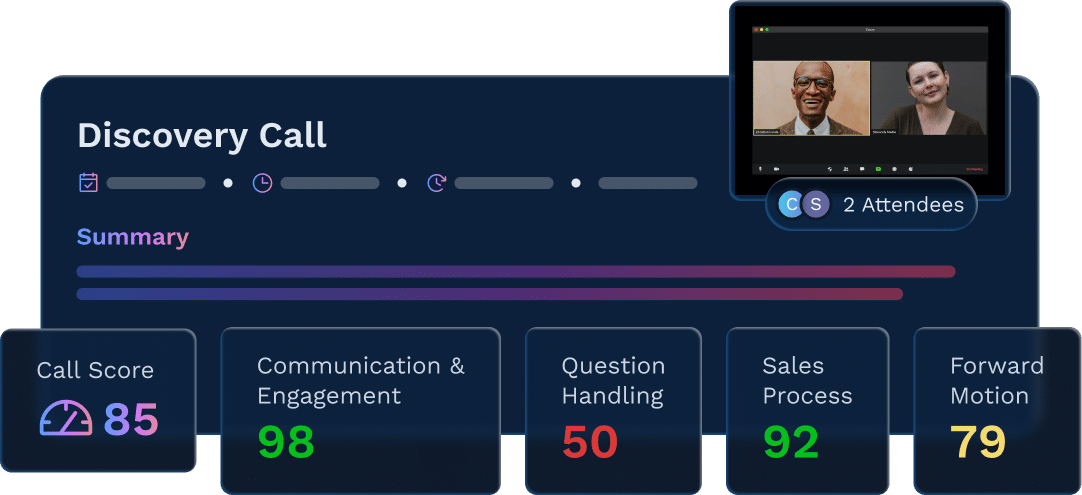

Call Score

Generate quality scores for multi-party calls that go beyond mere numbers on the criteria of your choice and are contextual to the conversation stage and business process.

Live Sentiment Analysis

Analyze and measure a speaker’s expressed feeling or enthusiasm about a specific topic

Real time alerts for Compliance

Receive real-time alerts on any gaps in adherence to compliance policy or regulations during conversations, mitigating legal risks and enforcing quality assurance.