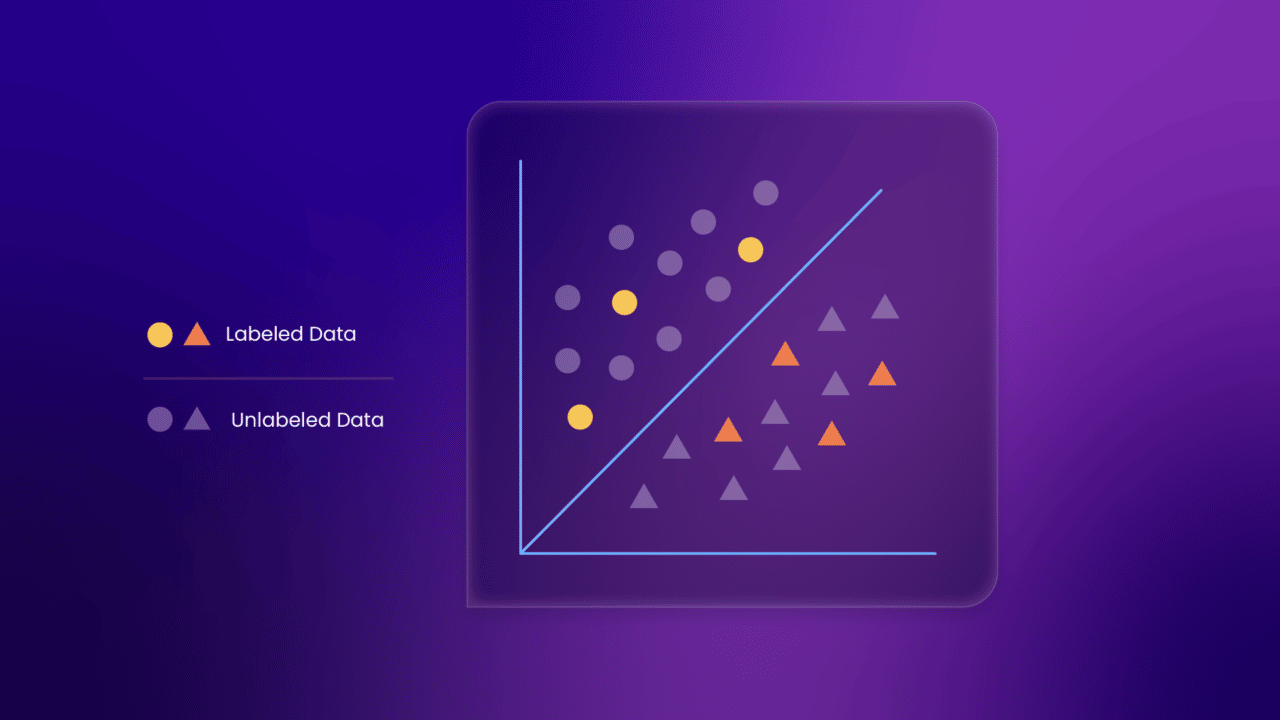

Until recently, machine learning focused on supervised and unsupervised learning techniques. Supervised learning methods such as regression and classification use labeled data—a group of samples tagged with one or more labels. Unsupervised methods, such as outlier detection and clustering, use unlabeled data—pieces of data that are not tagged with labels identifying any characteristics, properties, or classifications.

Semi-supervised learning (SSL) changes the learning behaviors of unsupervised and supervised learning by combining labeled and unlabeled data. Unlabeled data is readily available; however, labeled information is scarce and expensive.

This article compares unsupervised and supervised learning and describes how the two techniques relate to semi-supervised learning. It also explores how SSL works and presents several use cases where SSL excels.

What You Can Do With Semi-Supervised Learning

While a vast amount of data becomes available when working with machine learning—including time series data (data collected at different points), videos, audio, texts, and images—only a tiny fraction of that data is labeled. Supervised machine learning algorithms learn from labeled data; one must acquire an extensive amount of training data and label and classify the test data either manually or algorithmically.

Either way, it’s expensive to label large volumes of data. However, SSL requires a small amount of labeled data to train. It treats the few labeled patterns as training data and the rest of the unlabeled patterns as test data. Less labeling in SSL means working with less overhead than fully supervised learning requires.

On the other hand, you don’t need labeled data to train a model using unsupervised learning and you can easily save on the cost of labeling data. That being said, unsupervised learning uses the maximum likelihood approach or clustering approach to cluster data based on similarity in data points. This approach can result in inaccurate data clustering, so using both labeled and unlabeled data provides better learning results.

Semi-supervised learning offers the benefits of unsupervised and supervised learning because it uses a small portion of the labeled dataset and classifies the unlabeled dataset as accurately as possible.

Here is how you use SSL to train a model:

- Find a sizable unlabeled dataset.

- Label a small portion of the dataset.

- Use an unsupervised machine learning algorithm to organize the labeled dataset into clusters.

- Build a model that uses the labeled data to classify the rest of the unlabeled data.

SSL uses many learning methods, including label propagation, active learning, and support vector machines (SVMs). But this article will specifically discuss self-training, co-training, and tri-training.

Self-Training

Self-training uses the pseudo labeling technique to train models. Training with pseudo labeling is inherently an iterative process. Ideally, one model produces pseudo labels and the other learns from the generated pseudo labels.

This is how pseudo labeling works:

- You start by training a classifier with a small portion of labeled data.

- Once you have a trained classifier, you use it to estimate the labels for the unlabeled data. You must set a specific threshold for the predictions in this case.

- You can use only the most confident predictions that meet the threshold as training data for your next iteration.

- You repeat these steps several times until the classifier has exhausted its search for the most confident predictions about the unlabeled data points.

Co-Training

The co-training method trains two classifiers. A classifier is an algorithm that automatically assigns input data to a range of classes or categories. An email classifier, for example, examines emails and filters them into spam and non-spam categories.

Each classifier in co-training uses a unique view of the data. This approach assumes that you can describe each sample based on two distinct views and feature sets that provide separate yet complimentary information regarding the sample.

This is how co-training works:

- You start by building two different models for the two views of your classification problem.

- You train each model based on the initial small set of labeled data.

- Each model independently labels the data with high confidence predictions.

- Add this data to the training set and continue retraining the model until you find the most confident predictions about the unlabeled data.

Let’s take an example where you have two views, one containing image data and the other one audio data. With the co-training technique, you train the model on audio data and image data separately. Then, you combine the two models to label the unlabeled data.

Tri-Training

This SSL technique works like the co-training method but trains three classifiers. If you have three perspectives—one containing video, another image, and another audio data—you train the model with each view separately. Then, you combine the three models to label the unlabeled data.

Use-Cases for Semi-Supervised Learning

Using SSL to train models is more appropriate in cases with more unlabeled data than labeled data. SSL is applied in many areas of machine learning because it uses vast amounts of unlabeled data.

Speech Recognition

In speech recognition, recording massive amounts of speech doesn’t cost much. However, you require vast amounts of human labor to listen to and classify the speech. Supervised deep learning algorithms such as recurrent neural networks (RNN) can process speech sequences. However, conventional RNNs can only process data in context. This way, data is recognized and generated based on patterns established in a specific context.

The semi-supervised learning technique is used in speech recognition applications to improve the quality of speech recognition by using pre-recorded speech samples as input. The system uses these pre-recorded samples as a basis for training and testing. It then learns how to detect patterns in the speech data, which you can use to improve the quality of the overall system.

Audio Classification

Audio datasets are usually partially labeled. You must collect audio annotations to use supervised learning in classifying audios. Additionally, you need to classify audio components into a machine-readable format to annotate audio data.

However, classifying large-scale audio data is time-consuming and challenging for humans. Moreover, identifying multiple sound events in single audio is challenging for humans.

SSL uses both labeled and unlabeled audio to train a machine learning model, but it’s not uncommon for sizable audio classification datasets to have missing labels. Treating the missing labels as negative instances for their respective class is the standard way of handling missing annotations. This is a mistake, however, because although missing labels are considered absent instances, they can actually be present instances.

To further explain this, suppose you have audio containing piano and trombone, but it’s labeled only with the piano class. The conventional way would be to treat the missing trombone label as an explicit negative (an absent instance). However, this may affect the training of an audio classifier because the trombone label should be a present instance.

Semi-supervised learning allows you to handle data with missing labels. It involves using large amounts of data without requiring complete annotations to improve the audio classification models. You can use human expertise to label a small part of the audio dataset. Once you classify a small portion of data, you can let the trained algorithm classify all other audio data.

Web Classification

The internet contains billions of web pages, but not every page is labeled or has all the data you may need. You must rely on hand-labeled web pages to train a system that can automatically classify web pages. Acquiring these labels is expensive because they rely on human effort. At the same time, you can use a web crawler to collect hundreds of web pages at a low cost. Knowing this, having a learning algorithm that can take advantage of unlabeled data is important.

This is where using semi-supervised learning algorithms to label and rank pages makes sense. SSL uses a set of labeled web pages to predict the label of all other web pages you need. Search engines such as Google are already using this technique to label and rank pages in their search results.

Conclusion

This article compared unsupervised and supervised learning, then described how the two methods relate to SSL. You also learned what you can do with SSL, including self-training, co-training, and tri-training. There were also some use cases depicted for where SSL excels, such as the areas of speech recognition, audio classification, and web classification.

Every machine learning model needs data to train. There’s a large amount of unlabeled data and only a limited amount of labeled data. SSL uses a small portion of labeled data to train models and treats a large amount of unlabeled data as test data. This system results in better accuracy and cost-effectiveness than supervised and unsupervised techniques.

In summation, SSL overcomes the drawbacks of both supervised and unsupervised learning by using a partially labeled dataset to train a machine learning model.