Sorting items is easy when you know what the items are as it relates to your sorting objective. However, when you’re unsure, sorting can be challenging and time-consuming. Custom classifiers are intelligent systems built to assist humans with characterizing items and then sorting them into categories (or classes). Machines are then taught to identify them through a training process.

In machine learning (ML) applications, classifiers help to make decisions about input data. There are, however, many pre-processing steps that help with transforming the raw data before it’s fed into the classifier, and post-processing steps carried out using the result(s) from the classifier.

In this first article of a two-part series, you’ll learn more about classifiers, the relationship between classifiers and the rest of the ML process, and architecture options to be aware of when building classifiers. (Clarify what they will learn here)

What Are Custom Classifiers?

A custom classifier is a ML model that has been trained to understand a particular kind of data (text, audio, and images) concerning a predefined category. Before a classifier is built, you have to define the classes that data will eventually be sorted into, which is the learning objective of the ML process. When the objective is specified, the specific data that’s required to build the system is acquired and validated by domain experts.

With the data and the learning objective defined, the engineer goes on to experiment with different ML models with varying algorithms and architectures until the model can classify input data into predetermined categories with reasonable accuracy. How the accuracy is measured and what level of accuracy is reasonable depends on the problem.

With custom classifiers, engineering teams can build more quickly, because the time that would have been spent to design, develop, and deploy an accurate classification system can instead be used to speed up the development of other aspects of the product. Also, by using custom classifiers (for instance on Symbl), resources will not have to be spent to manage, monitor, and modify a production pipeline for classification. On the other hand, using custom classifiers in some cases means incurring the costs of getting access to the service.

Uses of Custom Classifiers

Custom classifiers are useful in almost any field that can be conceptualized. Below are just a few examples of how classifiers can be used:

Object Detection

Object detection classifiers can be trained to identify an object in any image if they have been sufficiently trained with the proper amount of data. The identifying object could be as generic as a tree to something more specific, like a palm tree. Other examples where object detention can be useful are face detection, fault detection, identifying stolen vehicles, tracking people or objects, and more.

Sentiment Analysis

Sentiments refer to how people react to things. Classifiers have proven useful in understanding the responses people give and categorizing them as positive, neutral, or negative. This is useful in understanding movie reviews, user feedback, or monitoring and tracking the tone of conversations.

A custom sentiment classifier on Symbl can also help you identify features/keywords in text or audio data and the corresponding sentiment. This gives more granular feedback as opposed to the general approach of identifying just the overall sentiment of the input data.

Audio Classification

Audio classifiers are useful in understanding audio streams and assigning labels to them based on a learning objective. In this scenario, you can use voice detection to know who’s speaking, what language it is, or the musical genre of a song.

The Input of a Custom Classifier

For a custom classifier to work properly, its input needs to be structured and formatted correctly. Because machines understand numbers, a series of transformations will have to occur to make it become an array of numbers regardless of the form the data comes in.

For example, audio data can be transformed using several libraries. In the code sample below, the librosa library in Python is used. First, the library is imported. You can run pip install librosa to install it or run it on Colab.

Then, some parameters are set, like the sample rate for loading the audio, the duration to be loaded, and the number of samples per track, and, finally, the extraction function is defined.

import librosa

SAMPLE_RATE = 22050

DURATION= 90

SAMPLES_PER_TRACK = SAMPLE_RATE * DURATION

def extract_mfcc_batch(file_path, n_mfcc=13, n_fft=1024, hop_length=512, num_segments=9):

Extract and return an mfcc batch.

mfcc_batch = []

num_samples_per_segment = int(SAMPLES_PER_TRACK / num_segments)

expected_num_mfcc_vectors_per_segment = math.ceil(num_samples_per_segment / hop_length)

signal, sr = librosa.load(file_path, sr=SAMPLE_RATE, duration=DURATION, offset=9)

# process segments, extracting mfccs and storing data

for s in range(num_segments):

start_sample = num_samples_per_segment * s

finish_sample = start_sample + num_samples_per_segment

mfcc = librosa.feature.mfcc(signal[start_sample:finish_sample],

sr=SAMPLE_RATE,

n_fft=n_fft,

n_mfcc=n_mfcc,

hop_length=hop_length

)

mfcc = mfcc.T # A transpose

mfcc_batch.append(mfcc.tolist())

return mfcc_batch

Here, a function called extract_mfcc_batch is defined to load ninety seconds of an audio file and then is split into nine segments. This helps you to extract useful features from the audio file. Also, by splitting the data into nine segments, you get more data points which provides your model with more input data in order to achieve higher accuracy. In this function, an empty list called mfcc_batch is defined, and the number of samples per segment is computed. The librosa.load method is called to load in the audio file, and then the signal is divided into nine segments.

For each segment, the librosa.feature.mfcc method is called to generate Mel-Frequency Cepstrum Coefficients (MFCCs). These coefficients represent a transformation of the audio signal into a form that is similar to how humans perceive sound (making them well suited for audio recognition tasks). This is achieved mainly by taking the Discrete Fourier Transform of the audio signal, converting the frequencies to the MelScale and computing the logarithm of the result. Using this feature, models can be trained on data that represents the human perception of sounds.

Finally, the transpose of the array is generated and appended to the mfcc_batch list.

The Output of a Custom Classifier

The output of a custom classifier is usually an integer representing the predicted class. This could be either 0 or 1 if the problem has a binary classification (in multi-class classification, other integers are included). Typically, a mapping from these integers to the actual classes would already be defined to generate the actual class based on the result of the classifier.

### Existing Classifiers

An assembly of classifiers has already been created to solve various problems. Some of these classifiers include:

- The Inception-ResNet classifier, which was built to identify common objects in context.

- The YAMNet classifier, which was built to identify audio events from the AudioSet ontology.

- The disease classification model, which was built to classify images showing plant diseases.

- The Tiny Video Net classifier, which is trained to recognize actions in videos.

- The Toxicity classifier, which was trained to identify civil comments.

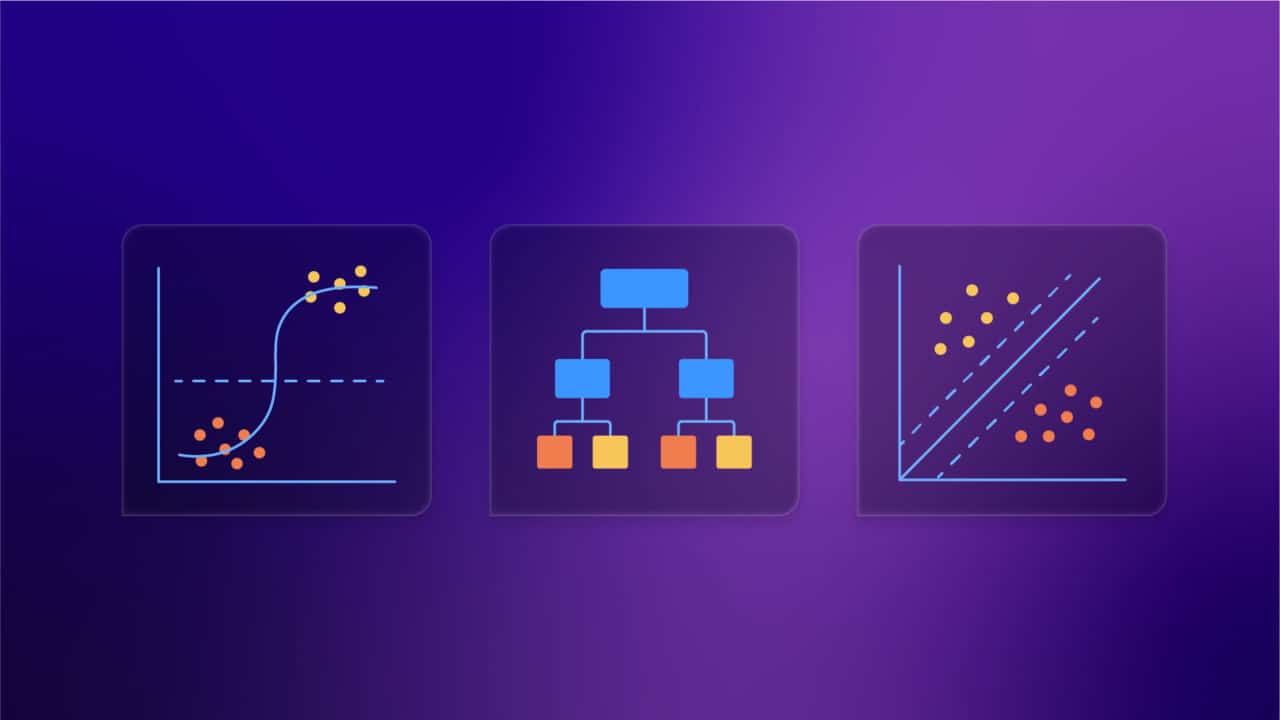

Algorithm Options for Building a Custom Classifier

A range of architecture options have been created to aid the building of efficient ML models and, more specifically, classifiers, including Perceptron, naive Bayes, decision tree, and more.

Perceptron

The Perceptron classifier is a linear classifier that uses supervised learning to achieve its learning objective. In between an input and output layer, the Perceptron has a weighted sum that is carried out. Each node in the input layer gets multiplied by a value known as its *weight*, and the results are summed up with a bias value to give the weighted sum. After this, an activation function is used to map the result from the Perceptron into a bounded range.

Naive Bayes

Naive Bayes is a probabilistic model that assumes that all input features are independent. This is known as conditional independence. Despite this simplifying assumption, naive Bayes classifiers perform reasonably well compared to some other algorithms like logistic regression and k-nearest neighbors. The Bernoulli naive Bayes classifier is used in cases where the features are binary, while the multinomial naive Bayes classifier is used when features are discrete.

Decision Tree

The decision tree classifier works based on successive decisions made from the features in the data using a greedy algorithm. The tree starts at a single point and branches down into a series of branches until the desired maximum depth is reached. Data coming into this tree enters from the root and, depending on its characteristics, makes its way down the branches until it finally gets classified.

Logistic Regression

Logistic Regression is a statistical model that classifies data into discrete classes using a logistic function. By doing this, the results are generated as probabilities.

Logistic regression is used in the gaming industry to select equipment users may want to buy so they can be recommended. Binary logistic regression is more popular, but the algorithm can be extended for multi-class problems.

K-Nearest Neighbor

K-nearest neighbors is an algorithm that works based on the assumption that data points are spatially related, and closer data points belong to the same class. The optimal number of nearest neighbors to consider is usually the goal of the training process.

Artificial Neural Networks

Artificial neural networks/deep learning refers to techniques that try to emulate the workings of the brain’s neural network. They work similar to the Perceptrons, but in this case, there are hidden layers between the input and output where various transformations happen. Depending on the exact nature of these transformations, the model could be a convolutional neural network, long short-term memory neural network, attention-based neural network, and so on.

Support Vector Machine

Support Vector Machines (SVM) is an algorithm that attempts to identify a hyperplane that accurately separates the members of different classes into their groups. To achieve this, data points are first plotted in an n-dimensional space and then the earlier mentioned hyperplane is identified. The goal is to make sure that the plane has the highest possible distance from data points in all classes.

SVMs are used in face detection, bioinformatics, and more.

Custom Classifiers and the ML Application

When used in real-world systems, classifiers are just a stage in a sequence of operations. The entire ML application includes other units like the data pipeline which receives data either in a stream or in batches.

The data that is passed through the system undergoes transformation since machines only understand numbers, and the classification system understands data formatted in a particular way. This is the pre-processing step, and the result is typically an array of numbers, which is when classifiers are used to group these processed data into specific categories.

The classifier (or the ML model) takes in the data and makes a prediction using its trained algorithm. The prediction is the class (or category) that the input data will be assigned to. This information is then typically saved and used either on its own or with other information that will be used to make a decision.

Classifiers are typically retrained after some time to make sure that they consistently perform as expected.

Conclusion

In this first article of a two-part series, you learned that custom classifiers are ML algorithms that sort unstructured data into buckets or classes. You also learned about currently existing classifiers, different algorithms used to build classifiers, and how classifiers interact with the entire ML application.

Symbl.ai enables you to use AI to understand how to get value from and improve the effectiveness of interactive multimedia. By utilizing their custom classifiers, you can develop quickly and improve your productivity.