Summary

In this article we will focus on how to integrate contact center solutions with their recorded calls using Symbl.ai Async API. This integration unlocks key capabilities such as:

- Sentiment analysis in sentences and topics level

- Searching themes and trends that are important to your business

- Analytics of speakers and summarization of conversations that can then be leveraged for multiple purposes such as coaching

- Governance and compliance

- Follow ups and scheduling of next steps

- Data aggregations

- Indexing to search, and more.

Why use your contact center recordings with Symbl?

More and more developers are shifting to develop their apps using the Zoom platform and are looking for adding intelligence beyond simple speech recognition.

Symbl.ai enables you to add the best in class conversation intelligence technology that combines high quality transcripts along with pre-built and programmable insights, actions, sentiments, topics and more. Symbl.ai is a developer platform that has different APIs and SDKs that lets you leverage the power of contextual understanding to understand and comprehend human to human conversations which are usually chaotic.

This is where Symbl.ai technology is targeted for and is the perfect solution to unlock developers to capture conversations in real-time meaning you can analyze, gain instant feedback and pull insights during the call.

What will this integration look like?

Before integration starts, what do I need to have?

Cloud Services

Recorded files:

- Recorded files should be in “public access” (can be set to time limit as well) from your cloud storage to allow Symbl Async API requests access the files for conversation analysis.

- Recorded files length – Symbl conversation Analysis supports files up to 4 hours.

Note: In case you have recorded a file that is above 4 hours in length, you need to split it into multiple files. After submitting the first file using the POST Async API, use the same conversationId provided in the response to make additional PUT Async API requests.

Database:

- Store recording metadata like business/company name, service type customer name and email, representative name, conversation datetime, language code, etc.

- Async request concurrency management – Symbl allows to have up to 50 active requests of active Async APIs (scheduled,in_process) requests per account for non-enterprise deployments

Note: If in your concurrency requirements are greater than 50, reach out to your Symbl representative for other deployment setting options.

- Conversation analysis results

Note: The database type (MongoDB, Google DataStore, etc.) is your choice. Here is a Google DataStore instance example:

Serverless functions:

- Serverless functions (e.g. AWS Lambda/Google Cloud Function/Azure function) should have access to relevant tables in the database or storage endpoints and will serve the key for adding/searching/updating conversation analysis status to/from Symbl. Here is a simple example of an AWS lambda used to generate the Symbl API access token and Async Audio URL API to submit a recorded file from an AWS S3 bucket for conversation analysis.

Note: The Lambda default timeout should be increased from 3 seconds to 3 minutes as best practice.

[javascript]

exports.handler = async function(event) {

var request = require(‘request’);

const authOptions = {

method: ‘post’,

url: ‘https://api.symbl.ai/oauth2/token:generate’,

body: {

type: “application”,

appId: “

appSecret: “

},

json: true

};

let auth = new Promise(resolve => {

request(authOptions, (err, res, body) => {

if (err) {

console.error(‘error posting json: ‘, err);

throw err;

}

console.log(body);

resolve(body);

})

});

await auth.then(body => {

var asyncOptions = {

‘method’: ‘POST’,

‘url’: ‘https://api.symbl.ai/v1/process/audio/url’,

‘headers’: {

‘Authorization’:’Bearer ‘+ body.accessToken,

‘Content-Type’: ‘application/json’

},

‘body’: JSON.stringify({

‘url’: “

‘name’:”The meeting name”,

‘confidenceThreshold’: 0.6,

‘timezoneOffset’: 0,

/*In this example it is shown how to use the separate channel feature in case the

recorded file was done in two separate channels */

‘enableSeparateRecognitionPerChannel’:true,

‘channelMetadata’:[

{

“channel”: 1,

“speaker”: {“name”: “agent name”,

“email”: “[email protected]”

}

},

{

“channel”: 2,

“speaker”: {“name”:”customer name”,

“email”: “[email protected]”

}

},

]

})

};

var asyncAudio = new Promise(resolve => {

request(asyncOptions, (err, res, body) => {

if (err) {

console.error(‘error posting json: ‘, err);

throw err;

}

console.log(body);

resolve(body);

})

})

return asyncAudio.then(body => {/*Store the body data in a DB table*/});

});

};

[/javascript]

- HTTPS endpoint of the serverless function that hosts the HTTP WebHook endpoint.

Symbl

Valid token to use Symbl APIs

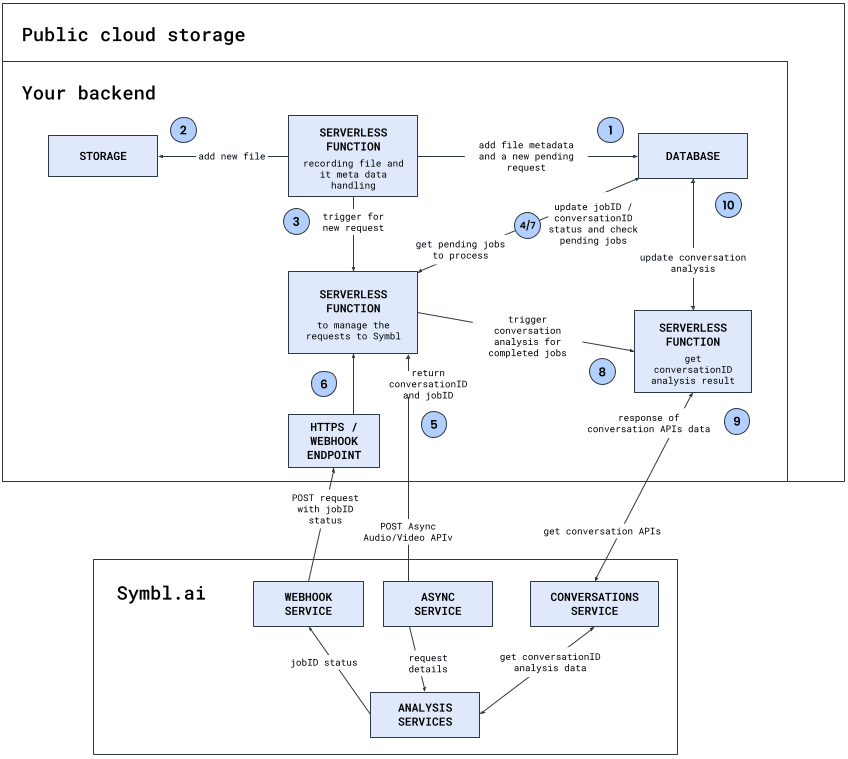

Integration Steps – Initial infrastructure:

Step 1: Database design

Here are a few things to consider to store when you design your database tables and fields:

- “Conversation-metadata” table (Prior to Symbl conversation analysis):

- Unique identifier

- File name

- Added date

- File duration length

- Recording url

- Language code

- Time Zone Offset

- Custom Vocabulary – In case there are specific words that are not common in the conversation spoken language having them will help in getting better results from Speech To Text

- Company/Business name – In case you have different segments.

- Number of speakers

- Recorded in separate channel (True/False) – Recording can be recorded in mono channel or each participant is recorded in separate channel

- Speakers names

- Speakers emails

- “Symbl-Async-Request” table – Holds the request unique identifiers and status associated with the request metadata that are created per Async API request:

- conversationId

- jobId

- jobId status

- datatime

- The “unique identifier” from “Conversation-metadata” table

- Conversation-Intelligence-<names> tables – In case you would like to manage/aggregate different calls intelligence data and/or remove the analysed data from your Symbl account, store the analysed data in different tables per conversation API and the unique conversationId per conversation request.

- Business-UX table for Symbl Pre Built UI usage (Optional):

- Company/Business name

- Logo url

- Favicon url

- Additional UX configurations

Step 2: Create serverless function for adding/searching recordings

When a new recording and/or new metadata are created:

- If recording file was not added: Add the new recording file to your storage

- Add the conversation metadata to “Conversation-metadata” table

- Add a new record to “Symbl-Async-Request” table:

- “jobId status” – “pending”

- “Datetime” – Current time

- “jobId” and “conversationId” with no value

- “Unique identifier” value from “Conversation-metadata” table

- Trigger serverless function for managing requests in next step

Step 3: Create serverless function for managing requests

This serverless function can be triggered in two ways:

#1 – Serverless function trigger from step 2:

- Query Symbl-Async-Request for how many active (scheduled,in_progress) are there:

- If active requests is less than max concurrent (Max is 50 requests):

- Query the oldest “pending” request record with no conversationId, jobId in Symbl-Async-Request

- Send the selected record to Symbl’s Async Audio API request with:

- The serverless function “webhook” endpoint in body request

- Relevant fields that will be taken from metadata like name, url (Recording url), customVocabulary, etc. in body request

- Speaker separation method:

- In case each speaker was recorded in separate channel use the fields “enableSeparateRecognitionPerChannel” with the value “true” and “channelMetadata” with the relevant data in body request

- In case the recording was done only in one channel use the fields “enableSpeakerDiarization” with the value “true” and “diarizationSpeakerCount” with the number of participants in the conversation as part of query params

- Update the selected request in Symbl-Async-Request table with conversationId and jobId values returned from Async API response

- If active requests is less than max concurrent (Max is 50 requests):

Note: In case the file size is large allow the response time to be up to 3 minutes to update the status

#2 – Webhook trigger the serverless function:

- Query for the jobId in “Symbl-Async-Request” table update the jobid status, get the conversationId and return to the webhook 200 success response

Note: In case the Query returned empty result add a retry after a few seconds and then complete the update

- If the returned “jobId status” from Webhook POST request is “completed”:

- Repeat “#1 – Serverless function trigger from step 2”

- Trigger serverless function for managing conversation APIs requests and pass the conversationId

- If the returned “jobId status” from Webhook POST request is “failed” check file and logs to check what went wrong and share the conversationId or jobId with Symbl team for additional analysis.

Step 4: Create serverless function for getting the conversation analysis results

Once this serverless function is triggered with the conversaitonId using the following conversation APIs you can get the conversation’s Speech to Text, Analytics, Trackers, Topics and Summary if you choose you can store it in your database.

In addition for a quick Summary UI implementation of the conversation you can use the same conversationId with the Business-UX table to set Symbl Pre Built UI style and wrap it with iFrame as part of your website/app.

Step 5: Create a cronjob to check if jobId status is as expected (Optional)

Our Symbl system is resilient and stable but like all systems it is always best to verify that the jobId status from webhook POST requests are not stuck. For this purpose you can implement another function with a cronjob to check every 1 hour the jobId status by:

- Query all the active jobs (scheduled, in_progress) and then calculate if the delta of current time to when the request was made is not going above ~40% of the recording length which is the time Symbl should complete the analysis of the recorded file.

- Check the jobId status

Next steps: Try it yourself!

So far we built together an infrastructure for a voice assistant to run in your app that can provide in a live Zoom conversation real-time transcriptions and insights and at the end of the call added speaker separation in a few simple steps so every message, follow up, action items etc. will have its own speaker owner instantly.

But there is more… you can send the live insights for tasks like follow-ups and action items to the relevant assignee, or to a downstream system. With ConversationId you can unlock Summary based of the conversation context to be send at the end of the meeting, sentiments analysis in message and topics level to understand if there were negative or positive sections in the call flow, Analytics of speaker ratio to understand if all participants got to express themself and more.

Explore Symbl conversation APIs and Pre-Built UI to get instant Summary UI of multiple conversation elements in one place.

This conversation intelligence voice assistant can be expanded to a deeper analysis like call centers, coaching and training, meeting summary, data aggregations, indexing to search in real-time Webinars conversations and more.