Enhanced Conversation Intelligence, Data Control, and Optimized Performance with Proprietary Data Protection Peace of Mind

Overview

At Symbl.ai, we offer an on-prem deployment option for our Nebula large language model (LLM), enabling organizations to deploy and utilize our capabilities within their own infrastructure. This deployment includes the Summary feature, which provides four types of conversation summaries: short, long, list, and topic-based. By deploying Symbl.ai on-premise, organizations can have greater control over their data protection, ensure compliance with regulatory requirements, and customize the solution to fit their specific needs.

Key Advantages

Symbl.ai’s on-prem summary model stands out due to its unique strengths and advantages:

Optimized Performance

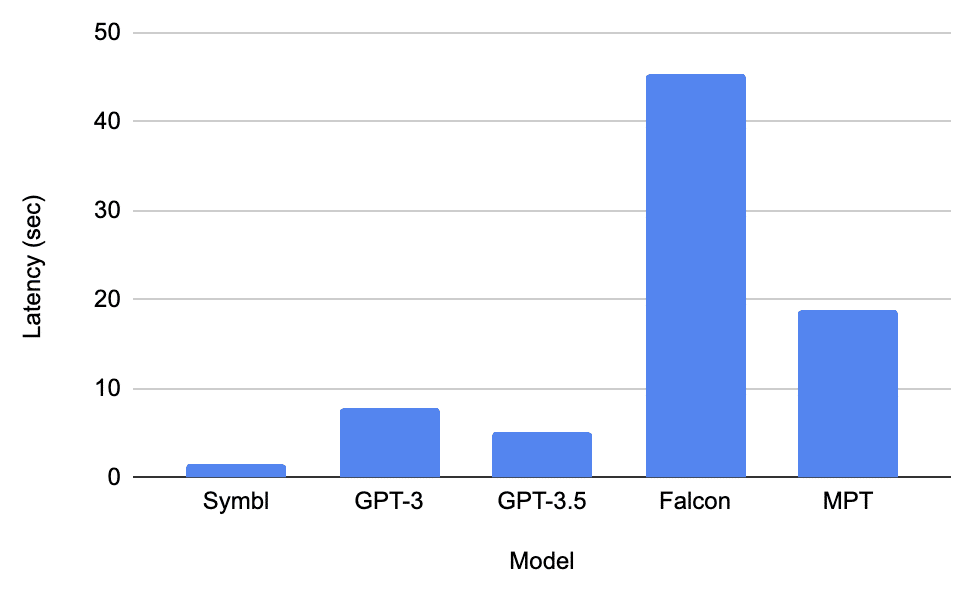

Our language model is based on the state-of-the-art transformer architecture, designed specifically for summarizing long, multi-party conversations across various domains. We have optimized our models to deliver LLM-level quality while utilizing 115 times fewer parameters than GPT-3. This optimization significantly enhances efficiency and processing speed, resulting in average latencies that are 5 times lower than comparable models.

Supports long conversations

Our custom transformer-based model architecture addresses the high variance problem inherent in human conversations. This design ensures consistent performance even at longer sequence lengths, allowing you to process multi-party conversation transcripts up to 3 hours in length whereas mainstream comparable models can only process up to 30 mins of conversations.

| Model | Max Conversation Length (approx) |

| Symbl | 180 mins (3 hours) |

| GPT-3 | 15 mins |

| GPT-3.5 | 30 mins |

| Falcon | 15 mins |

| MPT | 15 mins |

Cost-effective

Symbl.ai on-prem summary deployment enables cost-effective hardware utilization. You can deploy our summarization model on single instances of cheaper GPUs, costing as low as a few dollars per day, while still processing up to thousands of conversations daily. This results in an effective hardware cost of fraction of a cent per conversation, delivering both efficiency and cost savings.

Data Protection

Our on-prem deployment provides enhanced data protection control. By deploying Symbl.ai on-premises, you can manage sensitive information within your own infrastructure, ensuring data protection control. This level of control enables safeguard against unintended data access, data leaks or data breaches.

Secure, Resilient, and Scalable

Our solution follows security best practices, ensuring that it does not require root user access. We provide secure containerization, while you are responsible for securing your infrastructure. The Symbl.ai container includes built-in health checks and logging mechanisms, enabling you to monitor the solution’s health and resilience. This built-in resilience ensures that the system remains robust and stable even during high-demand scenarios. Additionally, our container-based deployment architecture enables horizontal scaling, allowing you to handle increased workloads efficiently while maintaining optimal performance.

Conversation Intelligence

The on-prem deployment of Symbl.ai provides organizations with four types of conversation summaries:

- Short Summary: Provides a concise overview of a conversation, capturing the key points and highlights. It is useful for quick reference and provides a snapshot of the conversation’s main themes.

- Long Summary: Offers a detailed and comprehensive overview of the conversation, including deeper analysis and nuanced insights. It enables a thorough understanding of the conversation.

- List Summary: Presents the conversation’s key points in a bullet-point format, making it easy to scan and extract important information. It provides a structured summary that allows for quick reference and review.

- Topic-Based Summary: Organizes conversation insights based on the main topics or subjects discussed. It helps identify the primary themes covered in the conversation, making it easier to navigate and focus on specific areas of interest.

Getting Started

To get started with the on-prem deployment of Symbl.ai, organizations should ensure the following:

- Host Environment: Set up a host environment with reliable internet access to facilitate communication with the Symbl server. This communication is necessary for access token generation, usage reporting, and downloading container updates.

- Container Orchestration Platform: Choose a container orchestration platform that aligns with your organization’s infrastructure and requirements. Popular choices include Kubernetes or Docker Swarm, which provide the necessary tools for managing and deploying containers.

- Hardware Requirements: Ensure that your infrastructure meets the hardware requirements for deploying and running Symbl.ai containers. This includes having sufficient CPU, memory, and storage resources to support the containerized deployment.

Next Steps

To explore the on-prem deployment options available with Symbl.ai, contact Sales.