With millions of monthly downloads and a thriving community of over 100,000 developers, LangChain has rapidly emerged as one of the most popular tools for building large language model (LLM) applications.

In this guide, we explore LangChain’s vast capabilities and take you through how to build a question-and-answer (QA) application – using Symbl.ai’s proprietary LLM Nebula as its underlying language model.

What is LangChain?

LangChain is an LLM chaining framework available in Python and JavaScript that streamlines the development of end-to-end LLM applications. It provides a comprehensive library of building blocks that are designed to seamlessly connect – or “chain” – together to create LLM-powered solutions that can be applied to a large variety of use cases.

Why Use LangChain to Build LLM Applications?

Some of the benefits of LangChain include:

- Expansive Library of Components: LangChain features a rich selection of components that enable the development of a diverse range of LLM applications.

- Modular Design: LangChain is designed in a way that makes it easy to swap out the components within an application, such as its underlying LLM or an external data source, which makes it ideal for rapid prototyping.

- Enables the Development of Context-Aware Applications: one of the aspects at which LangChain excels is facilitating the development of context-aware LLM applications. Through the use of prompt templating, document retrieval, and vector stores, LangChain allows you to add context to the input passed to an LLM to produce higher-quality output. This includes the use of proprietary data, domain-specific information that an LLM hasn’t been trained on, and up-to-date information.

- Large Collection of Integrations: LangChain includes over 600 (and growing) built-in integrations with a wide variety of tools and platforms, making it easier to incorporate an LLM application into your existing infrastructure and workflows.

- Large Community: as one of the most popular LLM frameworks, LangChain boasts a large and active user base. This has resulted in a wealth of resources, such as tutorials and coding notebooks, that make it easier to get started with LangChain, as well as forums and groups to assist with troubleshooting.

Just as importantly, LangChain’s developer community consistently contributes to the ecosystem, submitting new classes, features, and functionality. For instance, though officially available as a Python or JavaScript framework, the LangChain community has submitted a C# implementation.

LangChain Components

With a better understanding of the advantages it offers, let us move on to looking at the main components within the LangChain framework.

- Chains: the core concept of LangChain, a chain allows you to connect different components together to perform different tasks. As well as a collection of ready-made chains tailored for specific purposes, you can create your own chains that form the foundation of your LLM applications.

- Document Loaders: classes that allow you to load text from external documents to add context to input prompts. Document loaders streamline the development of retrieval augmented generation (RAG) applications, in which the application adds context from an external data source to an input prompt before passing it to the LLM – allowing it to generate more informed and relevant output.

LangChain features a range of out-of-the-box loaders for specific document types, such as PDFs, CSVs, and SQL, as well as for widely used platforms like Wikipedia, Reddit, Discord, and Google Drive. - Text Splitters: divide large documents, e.g., a book or extensive research paper, into chunks so they can fit into the input prompt. Text splitters overcome the present limitations of context length in LLMs and enable the use of data from large documents in your applications.

- Retrievers: collect data from a document or vector store according to a given text query. LangChain contains a selection of retrievers that correspond to different document loaders and types of queries.

- Embedding Models: these convert text into vector embeddings, i.e., numerical representations that an LLM can process efficiently. Embeddings capture different features of text from documents that allow an LLM to compare their semantic meaning with the user’s input query.

- Vector Stores: used to store documents for efficient retrieval after they have been converted into embeddings. The most common type of vector store is a vector database, such as Pinecone, Weaviate, or ChromaDB.

- Indexes: separate data structures associated with vector stores and documents that pre-sort, i.e., index, embeddings for faster retrieval.

- Memory: modules that allow your LLM applications to draw on past queries and responses to add additional context to input prompts. Memory is especially useful in chatbot applications, as it allows the bot to access previous parts of its conversation(s) with the user to craft more accurate and relevant responses.

- Prompt Templates: allow you to precisely format the input prompt that is passed to an LLM. They are particularly useful for scenarios in which you want to reuse the same prompt outline but with minor adjustments. Prompt templates allow you to construct a prompt from dynamic input, i.e., from input provided by the user, retrieved from a document, or derived from an LLM’s prior generated output.

- Output Parsers: allow you to structure an LLM’s output in a format that’s most useful or presentable to the user. Depending on their design, LLMs can generate output in various formats, such as JSON or XML, so an output parser allows you to traverse the output, extract the relevant information, and create a more structured representation.

- Agents: applications that can autonomously carry out a given task using the tools it is assigned (e.g., document loaders, retrievers, etc.) and use an LLM as its reasoning – or decision-making – engine. LangChain’s strength in loading data from external data sources enables you to provide agents with more detailed, contextual task instructions for more accurate results.

- Models: wrappers that allow you to integrate a range of LLMs into your application. LangChain features two types of models: LLMs, which take a string as input and return a string, and chatbots, which take a sequence of messages as input and return messages as output.

How to Build a QA Bot: A Step-By-Step Implementation

We are now going to explore the capabilities of LangChain by building a simple QA application with Nebula LLM.

Our application will use a prompt template to send initial input to the LLM. The model’s response will then feed into a 2nd prompt template, which will also be passed to the LLM. However, instead of making separate calls to the LLM to achieve this, we will simply construct a chain that will execute all the actions with a single call.

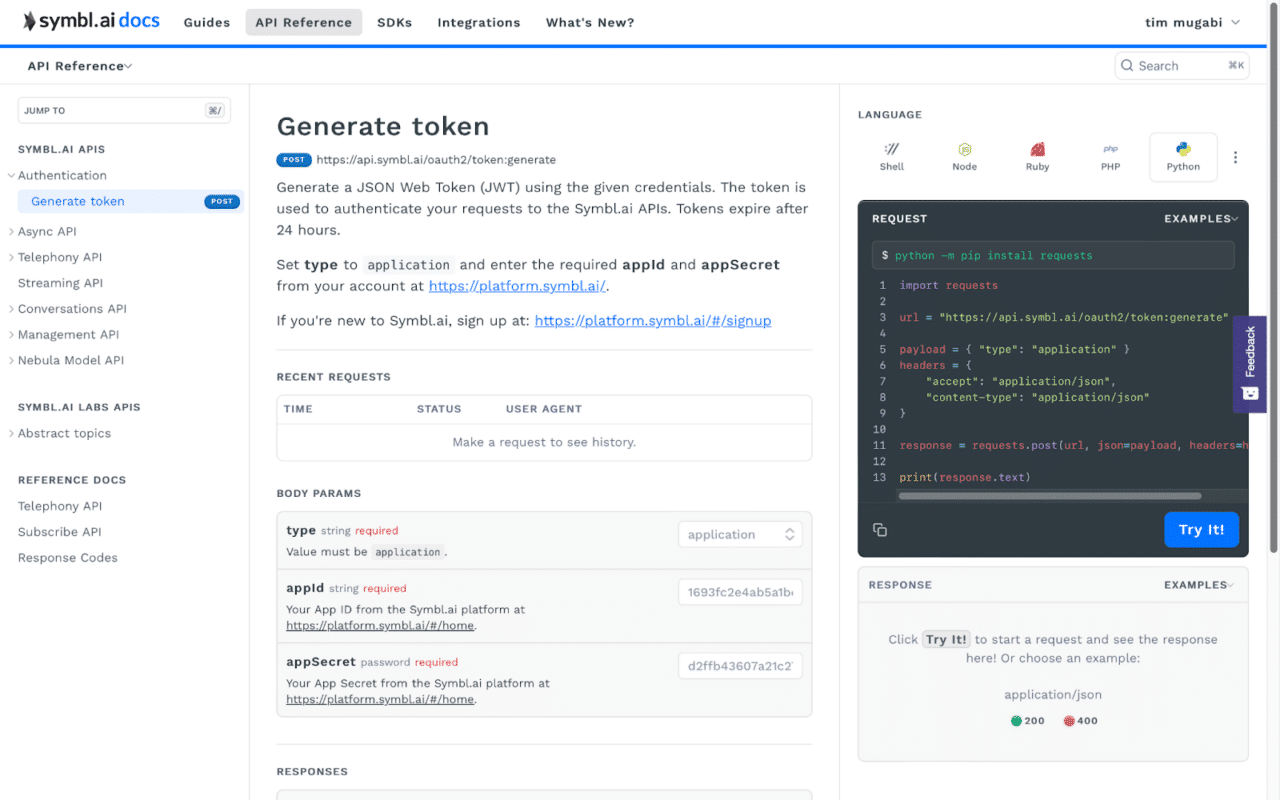

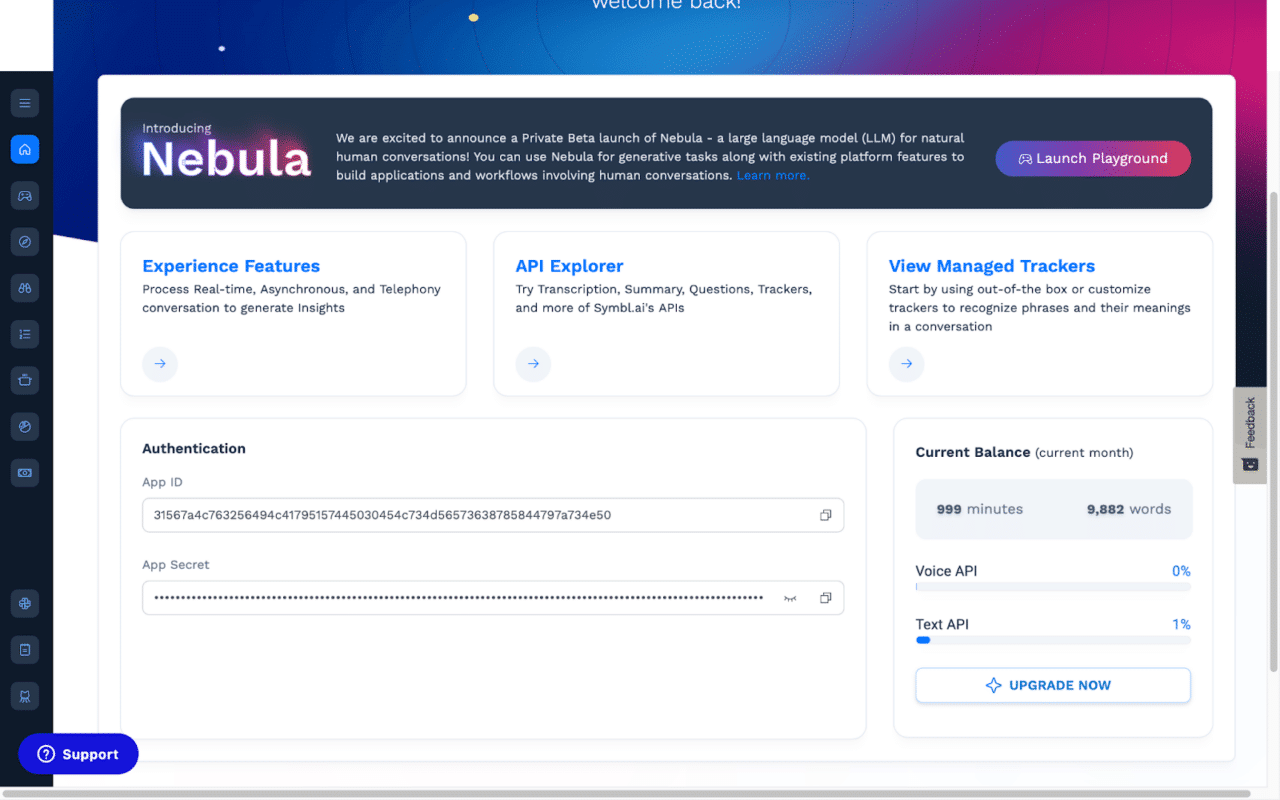

Additionally, to access Nebula’s API, you will need an API key, which you can obtain by signing up to the Symbl.ai platform and using your App ID and App Secret.

Setting Up Your Environment

First, you need to set up your development environment by installing LangChain. There are three options depending on what you intend to use the framework for:

install langchain: the bare minimum requirementsinstall langchain [llms]: to include all the modules required to integrate common LLMsinstall langchain [all]: to include all the modules required for all integrations.

While we do not require the dependencies required for all integrations, we do want those related to LLMs, so we are going with option 2 as below:

The following code will install the requisite libraries:

# For Python Version < 3

pip install langchain [llms]

# For Python Version 3 and above

pip3 install langchain [llms]

Loading the LLM

With your development environment correctly configured, the next step is loading our LLM of choice – which, in this case, is Nebula LLM.

To use Nebula LLM, we are first going to leverage LangChain’s extensibility and create a custom LLM wrapper: extending LangChain’s LLM class to create our own NebulaLLM class. Our custom wrapper includes a _call method, which sends an initial system prompt (to establish context for the LLM) and the user’s input prompt – and returns Nebula’s response. This will enable us to call Nebula LLM in LangChain in the same way as an OpenAI model or an LLM hosted on HuggingFace.

import requests

import json

from langchain.llms.base import LLM

from typing import Optional

class NebulaLLM(LLM):

def __init__(self, api_key: str):

self.api_key = api_key

self.url = "https://api-nebula.symbl.ai/v1/model/chat"

#Implement the class’ call method

def _call(self, prompt: str, stop: Optional[list] = None) -> str:

#Constructing the message to be sent to Nebula

payload = json.dumps({

"max_new_tokens": 1024,

"system_prompt": "You are a question and answering assistant. You are professional and always respond politely.",

"messages": [

{

"role": "human",

"text": prompt

}

]

})

#Headers for the JSON payload

headers = {

'ApiKey': self.api_key,

'Content-Type': 'application/json'

}

#POST request sent to Nebula, containing the model URL, #headers, and message, then assigned to response variable

response = requests.request("POST", url, headers=headers, data=payload)

return response['messages'][-1]['text']

#Property methods expected by LangChain

@property

def _identifying_params(self) -> dict:

return {"api_key": self.api_key}

@property

def _llm_type(self) -> str:

return "nebula_llm"

The two properties at the end of the code snippet are getter methods required by LangChain to manage the attributes of the class. In this case, they provide access to the Nebula instance’s API key and type.

Additionally, in the _call method, we passed an optional list, which is intended to contain a series of stop sequences that Nebula should adhere to when generating the response. However, in this case, the list is empty and is included to ensure compatibility with LangChain’s interface.

Creating Prompt Templates

Next, we are going to create the prompt templates that will be passed to the LLM and specify the format of its input.

The first prompt template takes a location as an input and will be passed to Nebula LLM. It will then generate a response containing the most famous dish from said location that will be used as part of the second prompt passed to Nebula LLM. The second prompt then takes the dish returned from the initial prompt and generates a recipe.

from langchain import PromptTemplate

#Creating the first prompt template

location_prompt = PromptTemplate(

input_variables=["location"],

template = "What is the most famous dish from {location}? Only return the name of the dish",

)

#Creating the second prompt template

dish_prompt = PromptTemplate(

input_variables=["dish"],

template="Provide a short and simple recipe for how to prepare {dish} at home",

)

Creating the Chains

Finally, we are going to create a chain that takes a series of prompts and runs them in sequences in a single function call. For your example, we will use LLMChain and a SimpleSequentialChain that combines both chains and runs them in sequence.

As well as the two chains, we’ve also passed the sequential chain the argument Verbose = True, which will cause the chain will show its process and how it arrived at its output.

# Create the first chain

chain_one = LLMChain(llm=llm, prompt=location_prompt)

# Create the second chain

chain_two = LLMChain(llm=llm, prompt=dish_prompt)

# Run both chains with SimpleSequentialChain

overall_chain = SimpleSequentialChain(chains=[chain_one, chain_two], verbose=True)

final_answer = overall_chain.run("Thailand")

Note, that when calling SimpleSequentialChain, the order in which you pass the chains to the class is important. As chain_one determines the output for chain_two – it must come first.

Potential Use Cases for a QA bot

Here are a few ways that a question-and-answer LLM application could add value to your organization.

- Knowledge Base: through fine-tuning or RAG, you can supply a QA bot with a task or domain-specific domain to create a knowledge base.

- FAQ System: similarly, you can customize a QA bot to answer questions that are frequently asked by your customers. As well as addressing a customer’s query, the QA bot can direct them to the appropriate department for further assistance, if required. By delegating your FAQs to a bot, human agents have more availability for issues that require their expertise – and more customers can be served in less time.

- Recommendation Systems: alternatively, by asking pertinent questions as well as answering them, a QA bot can act as a recommendation system, guiding customers to the most suitable product or service from your range. This allows customers to find what they are looking for in less time, boosts conversion rates, and, through effective upselling, can increase the average revenue per customer (ARPC).

- Onboarding and Training Assistant: QA bots can be used to streamline your company’s onboarding process – making it more interactive and efficient. A well-designed question-and-answer LLM application can replace the need for tedious forms: taking answers to questions as input and asking the employee additional questions if they didn’t supply sufficient information. Similarly, it can be used to handle FAQs regarding the most crucial aspects of your company‘s policies and procedures.

Additionally, a QA bot can help with your staff’s ongoing professional development needs, allowing an employee to learn at their own pace. Through the quality of answers given by the user, the application can determine their rate of progress and supply training resources that match: providing additional material if the user appears to be struggling while glazing over concepts with which they are familiar.

Conclusion

In summary:

- LangChain is an LLM chaining framework available that enables the efficient development of end-to-end LLM applications

- Reasons to use LangChain to develop LLM applications include:

- An expansive library of components

- Modular design

- Enables the development of context-aware applications

- Large collection of integrations

- Large community

- The core LangChain components include:

- Chains

- Document loaders

- Text splitters

- Retrievers

- Embedding models

- Vector Stores

- Indexes

- Memory

- Prompt templates

- Output parsers

- Agents

- Models

- The steps for creating a QA bot with LangChain and Nebula include:

- Setting up your environment

- Loading the LLM

- Creating prompt templates

- Creating the chains

- Potential Use Cases for a QA bot include:

- Knowledge base

- FAQ system

- Recommendation systems

- Onboarding and training assistant

LangChain is a powerful and adaptable framework that provides everything you need to develop performant and robust LLM applications. We encourage you to develop your comfort with its ecosystem by going through the LangChain documentation, familiarizing yourself with the different components on offer, and better understanding which could be most applicable to your intended use case.

Additionally, to discover how Nebula LLM can automate a variety of customer service tasks and transform your company’s unstructured interaction data into valuable insights, trends, and analytics, visit the Nebula Playground and gain exclusive access to our innovative proprietary LLM.