Machine Learning Drift is a common phenomenon that occurs once the machine learning algorithm is deployed to production. It can adversely affect the overall performance of your machine learning model if not monitored closely and mitigated at the right time.

This article will provide an overview of machine learning drift and various types of drift, as well as cover some practical techniques to eliminate drift.

What is Machine Learning Drift?

Machine learning and AI models are built on the assumption that historical data projects an accurate representation of the future. But in a fast-changing world, this is rarely the case. The COVID-19 pandemic and the Russia-Ukraine war are two examples of unprecedented events that impacted model predictions.

Drift is a phenomenon where a model degrades over time in terms of performance; one observes a sudden decrease in the model performance compared to the training performance.

Types of Model Drift

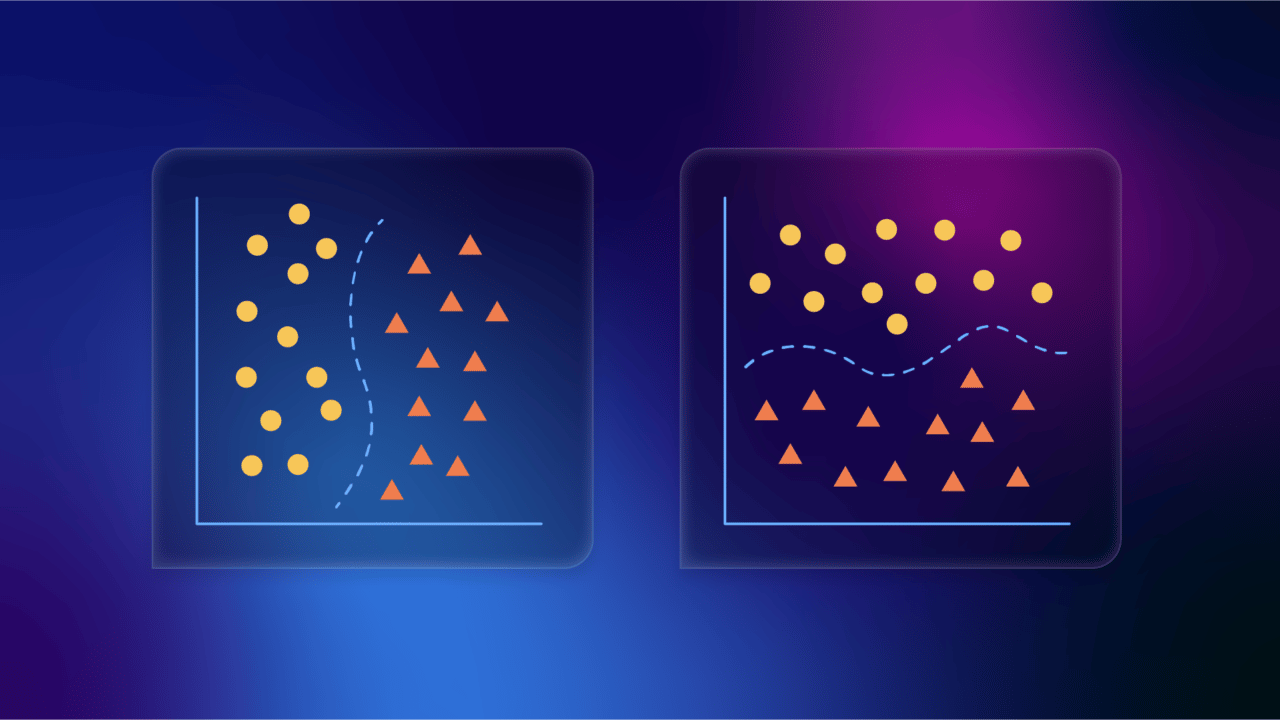

The two main types of Model Drift are as follows:

- Concept Drift: Concept Drift occurs when input data hasn’t changed, but the user behavior has changed, leading to a change in relationships between the input and target variables. One example of this is when the COVID-19 pandemic changed buyer behavior. People started purchasing more hand sanitizers and masks and spent less on travel. Any consumer-focused model that was trained pre-pandemic wouldn’t have been able to predict this behavior. Hence, there was a a decrease in model accuracy.

- Data Drift: Data Drift occurs when the properties of input or output data have changed.

Data drift can further be divided into two types:

Label Drift: This occurs when output data shifts. For example, if you were trying to build a model to predict if an applicant should receive a credit card and a large proportion of credit-worthy applications start showing up.

Feature Drift: This occurs when input data shifts. For example, for the same model described above, if one of the input variables was income and most of the incomes of applicants increase or decrease.

Causes of Drift

- Changes in user behavior: Commonly, user behavior will evolve, leading to changes within the input data. This will show up eventually in the model performance.

- Bias data: Data Drift can occur as a result of bias in the input data. By “bias” I mean that your training data might favor one population over the other. This can cause the model to be biased as well, leading to inaccurate model predictions.

- Training data is not an accurate representation: It is possible that the training or input data that was used to train the model is not an accurate representation of the actual data, which can lead to Data Drift as well. As an example, you might have used consumer data from the USA to train your model but launched the product in India; because users have unique patterns, you will observe model deterioration.

Detecting Drift

The obvious way to detect drift is to monitor the accuracy of the performance. However, in some cases it might not be as straightforward to calculate this accuracy. There are other alternative methods you can use in such cases—two are described below:

- Kolmogorov-Smirnov (K-S) test: The KS test is a test used to compare the training and post-training data. The null hypothesis states that the distributions for both datasets are identical. If the alternate hypothesis is accepted, we can conclude that the model has drifted.

- Population stability Index (PSI): PSI is another metric that can detect population changes over time. PSI<0.1 means no significant population changes, whereas PSI≥0.2 means significant population change.

Dealing with Drift

Drift is an inevitable phenomenon, so it is better to be prepared and deploy the following mechanisms that can detect it well in advance, which will give you enough time to mitigate it.

- Monitor the model: The model’s performance is bound to change over time. This doesn’t mean that the relationship between input variable and output has changed, it just means that the model was not trained on this particular segment of data, so it doesn’t know how to act on it. Hence, monitoring the model is necessary. Companies can develop their frameworks to do that. They can also integrate frameworks such as AWS Sage Maker, Deep Checks, etc., that exist in the marketplace today.

- Training and test data should be consistent: Training and test data should be synced. Check that both of them are in the same period and similar location.

- Retraining and redeployment: A scenario could exist where the only option is to retrain the model. It is imperative to be prepared for such a scenario. At this point, it might make more sense to analyze the feature importance and add/delete a few that are the leading cause of drift.

- Data monitoring: Sudden changes in the data are one of the causes of data drift. It is vital to have data quality mechanisms in place that could flag issues with the data. This will also help you to backtrack the data drift issue and assist in faster capture and hence remediation.

- Unboxing the black box: The concept of explainable AI and responsible AI is gaining popularity because it allows you to understand the model output; having such frameworks will ensure that in the case of a shift in the machine learning model performance you can get to the root of the issue instantly. There are open source frameworks available to leverage like AX360 by IBM, What if by Tool by Google to name a few. There are some popular techniques as well such as LIME(Local Interpretable Model Agnostic Explainations )

- Data Quality Checks: It’s crucial to have Data Quality Checks in place. Sometimes the drift can be caused by deteriorating data quality. There could be bias in data causing the model performance to decay over time.

- Developing Statistical Metrics: Model performance metrics can be used for tracking the performance of supervised learning models. Statistical models including AUC and ROC can be set in place.

Drift can seem to be a challenging problem to solve. However, with the proper mechanisms in place it can be curbed and dealt with as it occurs.

Connect with Supreet Kaur on LinkedIn.