This article demonstrates how to use Symbl.ai’s Conversation Intelligence capabilities in tandem with a live video calling app built with the Vonage Video API.

Vonage is a global leader in cloud communications with its wide range of offerings such as Unified Communications, Contact Centers, Communications APIs, and more. One of the offerings in their Communications APIs is the “Vonage Video API”, which allows users to program and customize live video applications.

Video Calls have seen a huge spike in the pandemic era, but they were already on an upwards trend for the past several years, being one of the most preferred ways for many people to connect. However, with this increase in adoption, it has become even more critical for developers of video platforms to take the leap from just facilitating these calls to helping more users actually connect. They need to enable conversations.

Symbl.ai provides out-of-the-box Conversational Intelligence capabilities to deeply analyze the spoken conversations that happen over video applications. One of these capabilities is Live Transcription or Speech-to-Text. This allows you to convert the conversations from a verbal form to a textual form and display it to users. By enabling this feature, you ensure that your app is accessible to all audiences.

Combining Vonage Video and Symbl.ai’s Conversation Intelligence, we demonstrate how to build an accessible, engaging video calling application.

How Symbl.ai’s Features Enhance Vonage Video Calling

Before we start building, let’s have a quick look at the available features. This video app integrated with Symbl.ai’s Real-time APIs provides the following out-of-the-box conversational intelligence features.

- Live Closed Captioning: Live closed captioning is enabled by default and provides a real-time transcription of your audio content.

- Live Sentiment Graph: Live Sentiment Graph shows how the sentiment of the conversations evolves over the duration of the conversation.

- Live Conversation Insights: Real-time detection of conversational insights such as actionable phrases, questions asked, follow-ups planned.

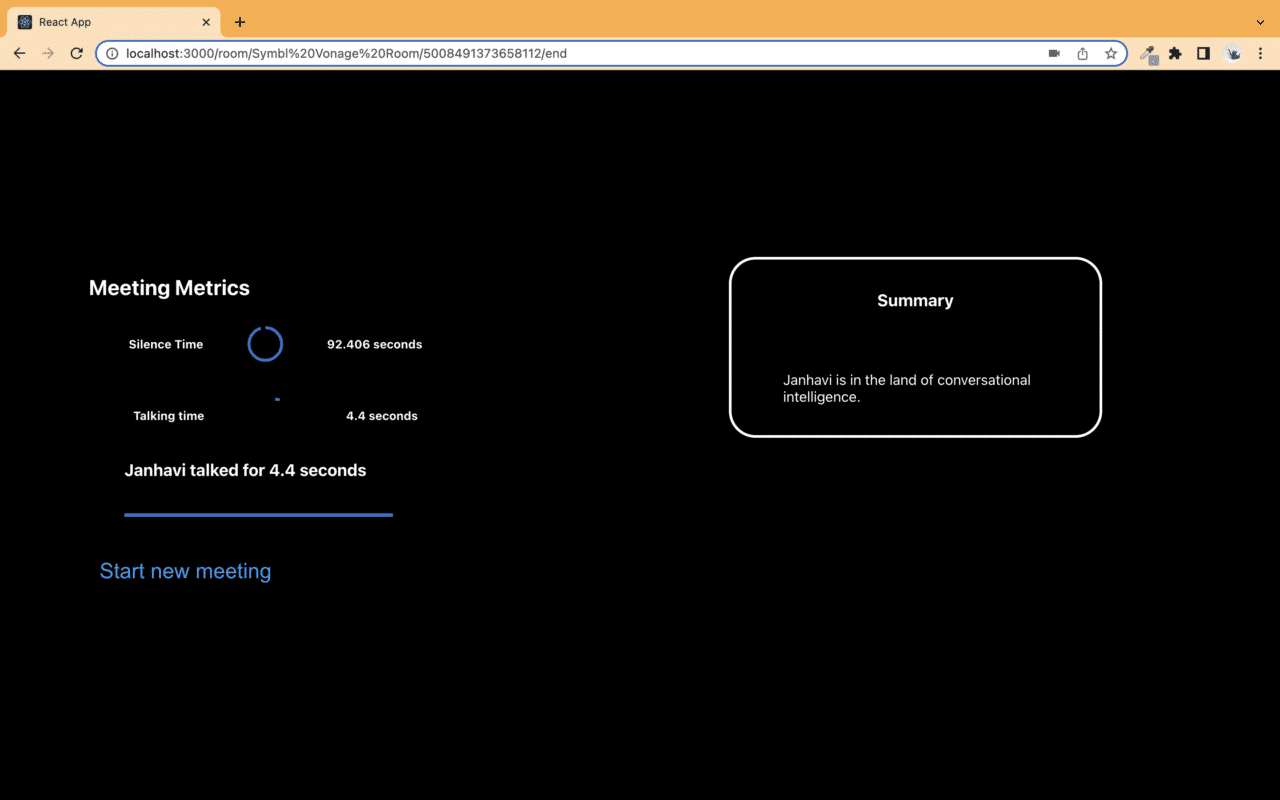

- Post-call Speaker Analytics: After the call ends, the app will show speaker talk-times, ratios, overlaps and silences, and talking speeds per speaker.

- Post-call Automatic Summary: Using Symbl.ai’s Summarisation capabilities, you can generate a full-conversation summary at the end of the call.

- Video conferencing with real-time video and audio: This allows for real-time use cases where both the video, audio (and its results from Symbl.ai’s back-end) need to be available in real-time. It can be integrated directly via the browser or server.

- Enable/Disable camera: After connecting your camera, you can enable or disable the camera when you want.

- Mute/unmute mic: After you connect to your device’s microphone you can mute or unmute when you want.

To see the end result in action, you can watch this video:

Video Vonage Calling Architecture

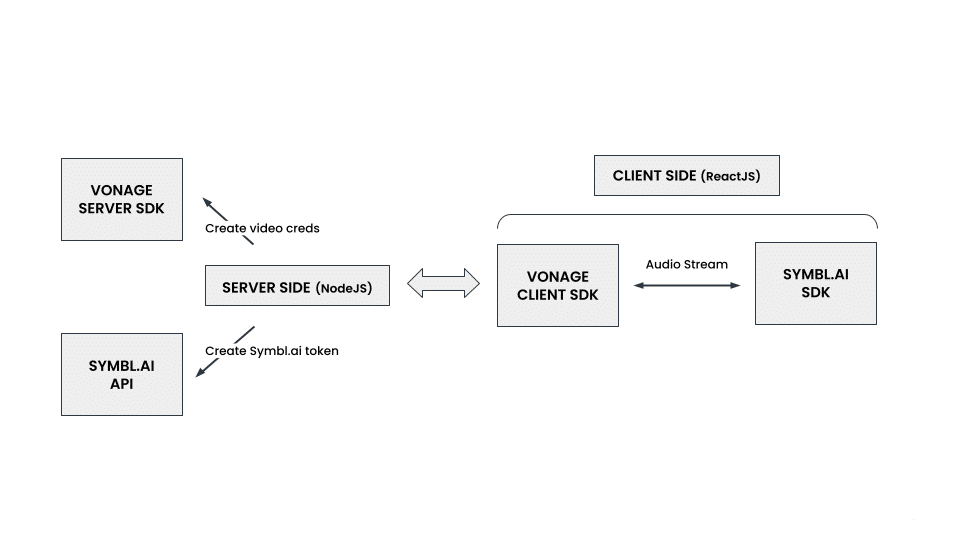

The basic video call app has two major parts, a backend server, and a client-side Web application. The backend server serves authentication tokens for Vonage services to the client-side of the application. The client-side of the application then handles the video call sessions, users, and media. This is done using the Vonage Client SDK.

To use Symbl.ai, we need an authentication token from Symbl.ai and the audio streams of the user over a WebSocket. In the demo, we re-use the same backend server to get the Symbl.ai access token.

For the intelligence, we hook into the client-side application and stream audio to Symbl.ai over WebSocket. The conversational intelligence generated is sent back to the client app and then displayed.

For a more in-depth explanation of the application architecture, see the README of the application.

How to Build the Demo Vonage Video Calling App

This app was built using the Vonage Client Web SDK and Symbl.ai’s Web SDK (v 0.6.0) and Create React App.

Pre-requisites

For this project, you need to first have the following ready:

- Symbl.ai Account: Sign-up to Symbl.ai Platform and gather your credentials (App ID, App Secret). Symbl.ai offers a free plan that would be more than sufficient for this project.

- Vonage Account: You have a Vonage Video API account. If not, you can sign up for free here.

- Node.js v10+: Make sure to install the current latest version, minimum v10.

- NPM v6+: Node versions v6 or latest is required.

Integration Steps

Step 1: Set up your code

On your local machine, clone the demo code by running the following command:

git clone https://github.com/nexmo-se/symblAI-demo.git |

Once cloned, navigate into the root folder of the code.

cd symblAI-demo |

From the root folder, install the node modules using:

npm install |

* Note: The full list of dependencies can be found in the package.json file.

Step 2: Setting your credentials in .env file

If you want to run the project in the dev environment, create a .env.development file, otherwise, if you want to run the project in the production environment, then create a .env.production file.

Similar to the .env.example file, populate your .env files with the following variables:

VIDEO_API_API_KEY= "1111" VIDEO_API_API_SECRET="11111" SERVER_PORT=5000 SYMBL_API = "https://api.symbl.ai/oauth2/token:generate" appSecret = "1111111" appId = "11111" # Client Env Variables REACT_APP_PALETTE_PRIMARY= REACT_APP_PALETTE_SECONDARY= REACT_APP_API_URL_DEVELOPMENT= REACT_APP_API_URL_PRODUCTION=

Step 3: Running the code

Dev

To run the project in development mode, in one terminal/console window, start the backend server with the following command:

npm run server-dev |

In another terminal/console window, start the frontend application by running:

npm start |

Open http://localhost:3000 to view the application in the browser.

Production

To run the project in production mode, you can build the bundle file with the following command:

npm run build |

Then start the backend server by running:

npm run server-prod |

Open http://localhost:5000 to view the application in the browser.

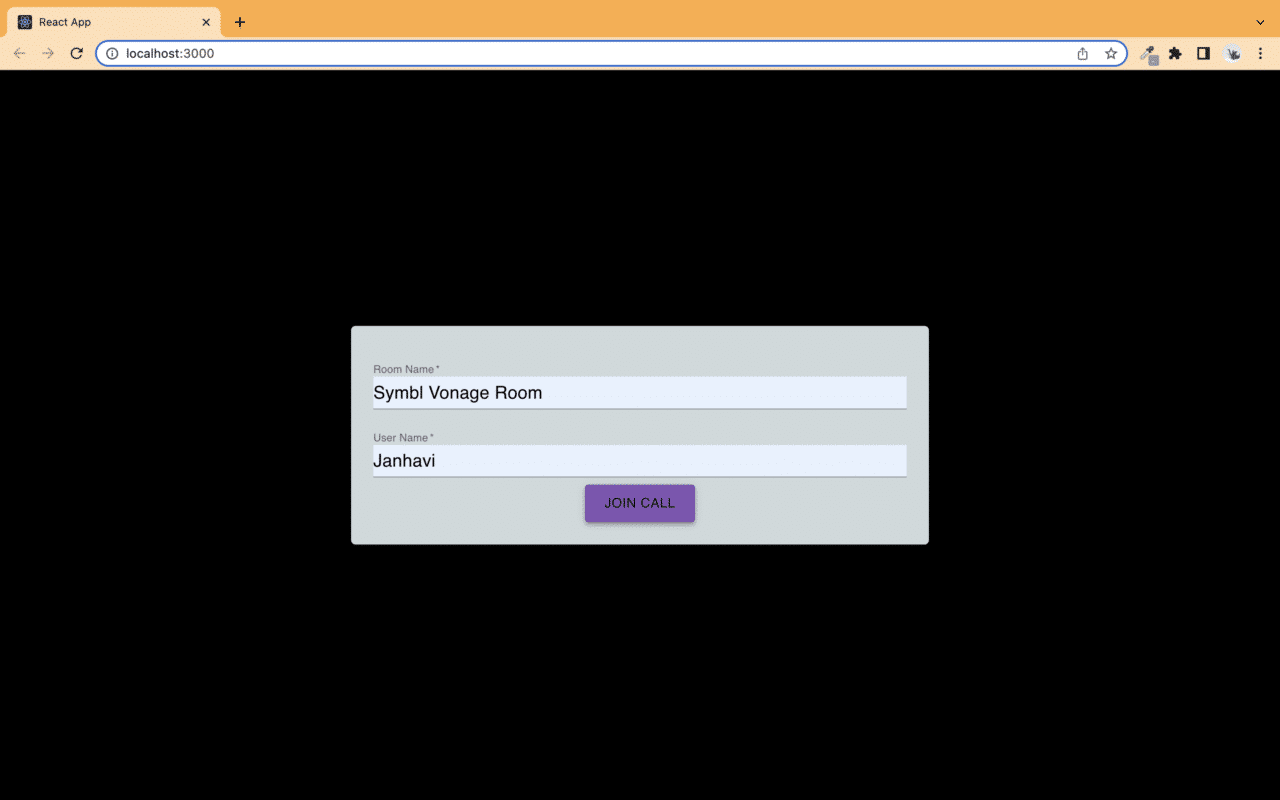

You would first see this screen as the landing page:

Enter a room name and your name and click “Join Call.”

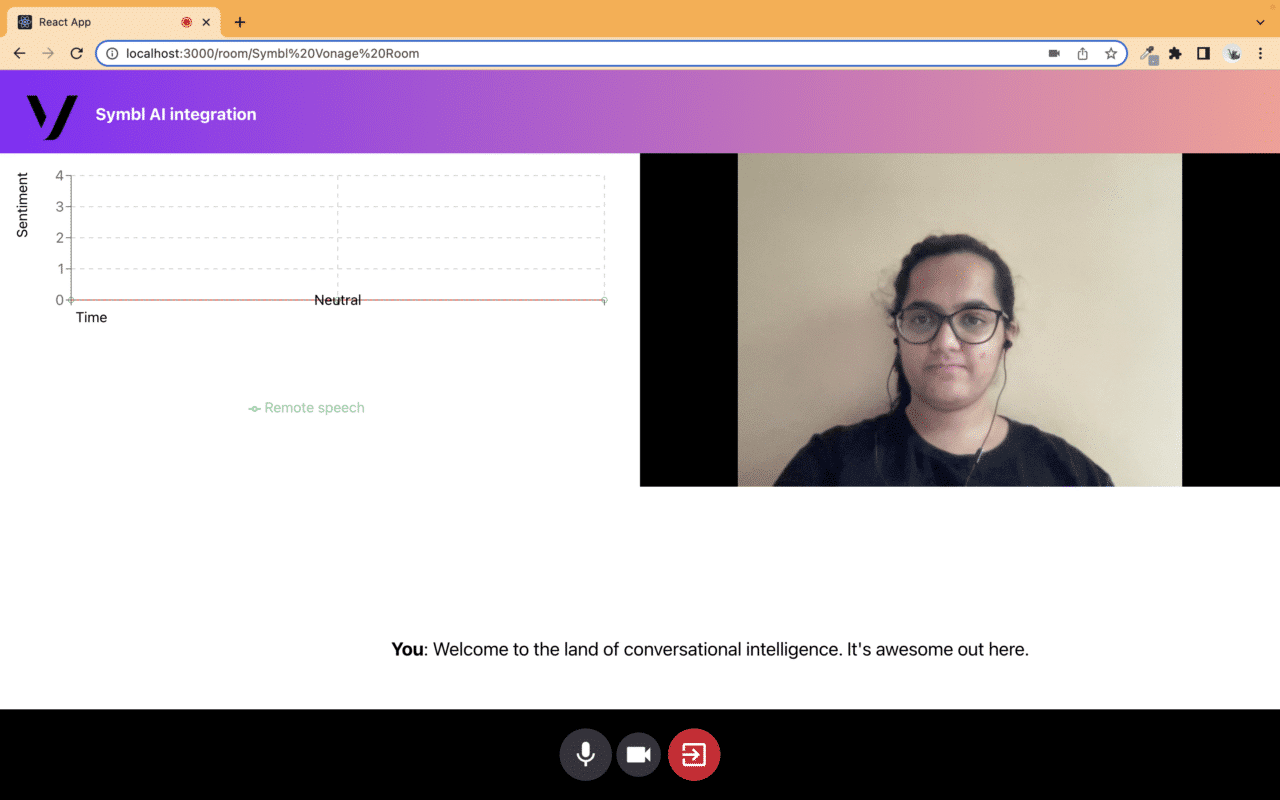

Now we enter the meeting room and you can see yourself on video, and a live sentiment graph on the left of the screen. As the conversation progresses, this graph will update to show the change in sentiment over the course of the call. At the bottom of the screen, you also see live captions. You can use the mic and camera buttons to toggle your connected audio/video devices.

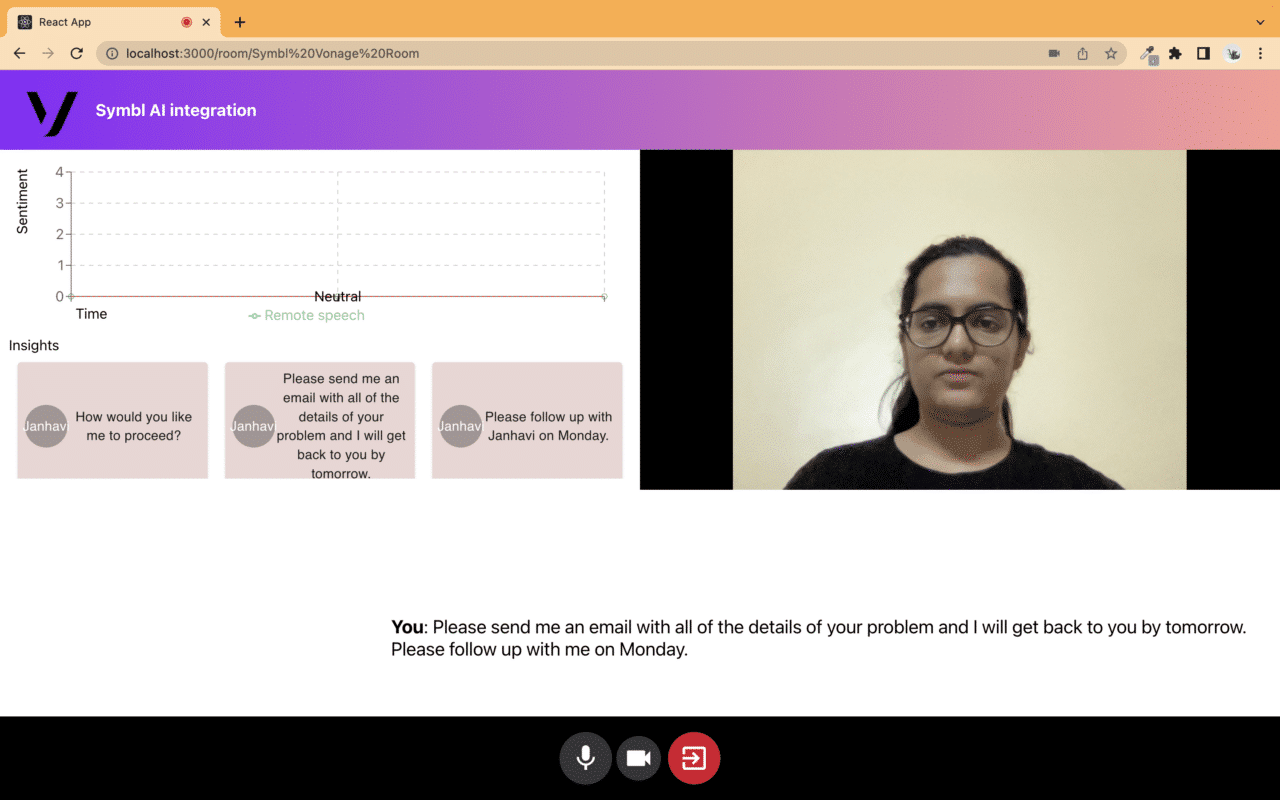

Symbl also picks up Questions, Action Items, and Follow-ups which can be seen under the Sentiment Graph on the left.

Once you are done with the call, you can click the red exit call button to come to the final page. This page will load the speaker analytics and a summary of your call.

*Note: It may take a few seconds for the metrics and summary to be generated and loaded.

API Reference

Find comprehensive information about our Streaming APIs in the API Reference section, and about our Web SDK in the SDKs section.

You can find more information on the Vonage Client SDK in their documentation.

Symbl.ai Insights with Vonage: Next Steps

To learn more about different Integrations offered at Symbl.ai, go to our Integrations Directory.

Community

This guide is actively developed, and we love to hear from you! If you liked our integration guide, please star our repo!

Please feel free to create an issue or open a pull request with your questions, comments, suggestions, and feedback, or reach out to us at [email protected], through our Community Slack or our forum.