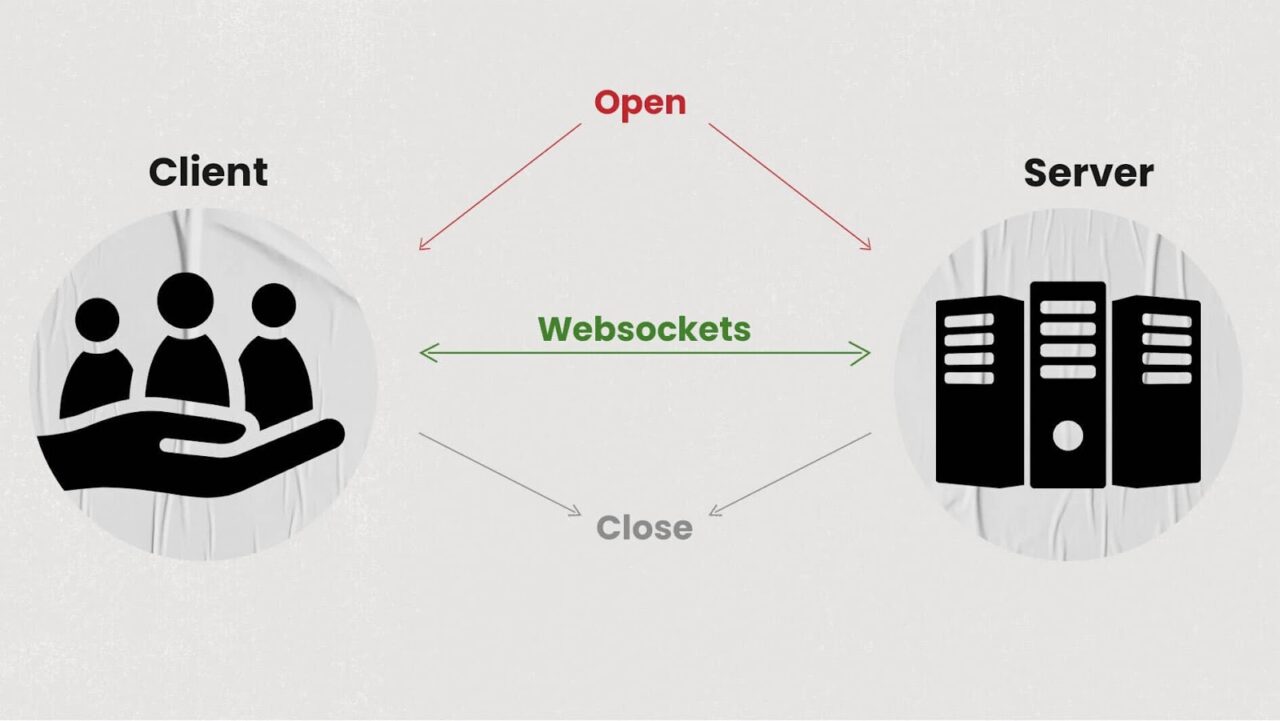

A WebSocket is a protocol for establishing two-way communication streams over the Internet. By using an API for WebSockets you can capture conversation intelligence insights in real-time. Symbl.ai’s Streaming API easily integrates directly into JavaScript WebSocket software, enabling access to conversational AI in real-time conversations.

WebSocket is a protocol that Real-Time Communications (RTC) or Real-Time Engagement (RTE) companies use for establishing two-way or multi-member voice, video, messaging, or broadcast streams over the internet. Many of the most famous RTC/RTE companies, such as Twilio, Vonage, or Agora, use WebSockets for their mobile or browser Software Development Kits (SDK). For example, “Zoom” employs WebSockets as its underlying technology to transfer voice and video at the same time to all participants on the call.

Adding real-time insights to your app with an API

If you have a WebSocket stream set up with one of those companies but don’t know how to record a call, perform live transcription, or run algorithms on the results of a conversation, then you need to use an API. An API for WebSockets enables developers to build voice, video, chat, or broadcast applications, with the ability to capture insights in real-time using artificial intelligence. One API you can use is from Symbl.ai‘s Telephony API, however, Symbl.ai’s Websocket easily integrates directly into JavaScript software to enable real-time conversations.

WebSockets for Symbl.ai

WebSockets facilitate communications between clients or servers in real time without the connection suffering from sluggish, high-latency, and bandwidth-intensive HTTP API calls. Specified as ws or wss, unencrypted or encrypted, a WebSocket endpoint is a persistent bi-directional communication channel without the overhead of HTTP residuals, such as headers, cookies, or artifacts. Here’s Symbl.ai’s API for WebSockets: wss://api.symbl.ai/v1/realtime/ Due to the full-duplex nature of millisecond-accurate state synchronization, many APIs operate under WebSocket messages from publishers to subscribers. TokBox describes its channel software’s method names entirely from WebSockets’ perspective with subscriber or publisher method names.

Best Practices

Although you can already find a detailed guide on best practices for audio integrations with Symbl, here’s the 101 on adopting Symbl.ai’s real-time WebSocket API.

- Separate channels per person: Make sure the audio for each person is on a separate channel or over separate WebSocket connections for optimal results.

- Lossless Codecs: Use FLAC or LINEAR16 codecs, if bandwidth is not an issue, or, if it is, Opus, AMR_WB, or Speex codecs.

How to use Symbl’s JavaScript WebSockets API

To achieve live transcription with Symbl.ai’s JavaScript WebSocket’s API in the browser, all you have to do is:

- Set up your account

- Configure the WebSocket endpoint

- Run the code

- Transcribe in real-time.

We’ll go through each step.

1. Set up

Register for an account at Symbl (i.e. https://platform.symbl.ai/). Grab both your appId and your appSecret. With both of these you should authenticate either with a cURL command or with Postman to get your x-api-key. Here’s an example with cURL:

curl --insecure --request POST "https://api.symbl.ai/oauth2/token:generate" \

-H "accept: application/json" \

-H "Content-Type: application/json" \

-d "{ \"type\": \"application\", \"appId\": \"your_app_id\", \"appSecret\": \"your_app_secret\" }"

2. Configure the WebSocket endpoint

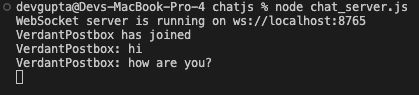

Now create a file named chat_server.js and implement the server. You can refer to this blog post, as it goes more in detail to aspects of the code files. It is written in Python, but still maintains similar logic.

const WebSocket = require('ws');

const server = new WebSocket.Server({ port: 8765 });

let connected = [];

const usernameGenerator = {

0: "VerdantPostbox",

1: "PrudentNecktie",

2: "BreezyCocktail",

3: "HelpfulPoodle",

4: "ReassuringSpeedboat"

};

server.on('connection', (ws) => {

const userCount = connected.length;

const username = usernameGenerator[userCount];

console.log(`${username} has joined`);

connected.push({ ws, username });

ws.on('message', (message) => {

const formattedMessage = `${username}: ${message}`;

console.log(formattedMessage);

// Broadcast message to all other clients

connected.forEach(({ ws: client }) => {

if (client !== ws && client.readyState === WebSocket.OPEN) {

client.send(formattedMessage);

}

});

});

ws.on('close', () => {

connected = connected.filter(client => client.ws !== ws);

console.log(`${username} left`);

});

});

console.log('WebSocket server is running on ws://localhost:8765');

Next, create a file named ‘chat_client.js’ and implement the client:

const WebSocket = require('ws');

const readline = require('readline');

const rl = readline.createInterface({

input: process.stdin,

output: process.stdout

});

const ws = new WebSocket('ws://localhost:8765');

ws.on('open', () => {

console.log('Connected to the chat server');

rl.setPrompt('');

rl.prompt();

rl.on('line', (line) => {

ws.send(line);

rl.prompt();

});

});

ws.on('message', (message) => {

console.log(message);

});

ws.on('close', () => {

console.log('Disconnected from the server');

rl.close();

});

3. Run the code

Before running the code, make sure you have all the necessary dependencies installed. You can use npm to install the ws library for WebSocket support in Node.js. Run the following command in your project directory:

npm install ws

Now, you can run your script with Node.js:

node your_script.js

4. Transcribe in real-time

To enable real-time transcription, integrate Symbl.ai’s WebSocket API into the server. This involves sending audio data through the WebSocket connection, where Symbl.ai processes it and returns transcription results in real-time. You can further extend the existing message event to handle audio streams and output transcriptions in the same way messages are handled in the example.

Conclusion

By following the steps outlined in this guide, you’ve learned how to set up a WebSocket connection, configure it to handle real-time data exchange, and integrate it with Symbl.ai’s API for live transcription. This setup demonstrates the powerful capabilities of WebSockets in enabling real-time conversation intelligence, providing a seamless, low-latency communication channel that’s perfect for applications needing immediate insights from live conversations.

With these foundational pieces in place, you can now build sophisticated real-time applications, adding features like live transcription, sentiment analysis, and more. Whether you’re working on a customer support platform, a real-time collaboration tool, or any other application requiring instant communication and analysis, Symbl.ai’s WebSocket API offers a flexible and powerful solution. Go ahead, take this knowledge, and start innovating in the space of real-time engagement!