Hello again! If you just read Help! I Want to Make My Apps Conversation Aware!, this blog is the second in the series aimed at helping you integrate conversation processing into your applications. This post focuses on enabling developers to run code that can mine contextual data on your local laptop using an audio source. We will walk through example code interacting with the APIs and discuss how to modify or write code to achieve more complex results and interactions.

Prerequisites and Setup

If you haven’t done this already, you will need to set up a development environment to run these applications. Thankfully, the documentation team has done an excellent job providing instructions to help you; we will focus on using Node.js for our environment.

Why Node.js?

There is a good intersection between video/audio streaming platforms that use Node. Just a reminder, you will need the App ID and Secret values from the Symbl platform to run these programs, so don’t forget to grab your free account.

Let’s Get Started

The Getting Started Samples repo on GitHub is the best place to help us navigate the APIs, and since we are using a public repo, everyone will have access to the code discussed here. Several pull requests have merged to support this content, but this PR provides examples for each type of API in the platform and an easy way of running them. We won’t cover every API call in the repository, but we’ll cover a couple that can serve as a blueprint.

To install and configure everything needed, visit the install guide on the repository. Alternatively, in a terminal, run the following and replace the APP_ID, APP_SECRET, and SUMMARY_EMAIL in the .env file afterward:

git clone https://github.com/symblai/getting-started-samples.git

cd getting-started-samples

npm install

cp .env.default .env

To execute these examples, we will use a script at the root of the repository called run-example.sh. The syntax is pretty simple:

Syntax: run-example.sh [API_TYPE] [PROJECT_NAME] <SDK_LANG>

API_TYPE: realtime or telephony

If PROJECT_NAME is missing, a list of available projects will be displayed on

screen based on the API_TYPE selected.

Currently, the only accepted value for SDK_LANG is node, but is a optional parameter.

To list examples for realtime projects, run:

run-example.sh realitime

To the realtime tracker example, run:

run-example.sh realitime tracker

Go for it if you feel comfortable enough to kick the tires. Otherwise, watch the video below:

Async API

Now let’s pick apart the Getting Started Sample for https://docs.symbl.ai/docs/topics. Almost all Async APIs behave precisely in the same way and with the same steps:

- Login

- Process a Form of Communication

- Check for Status

- Obtain Intelligence.

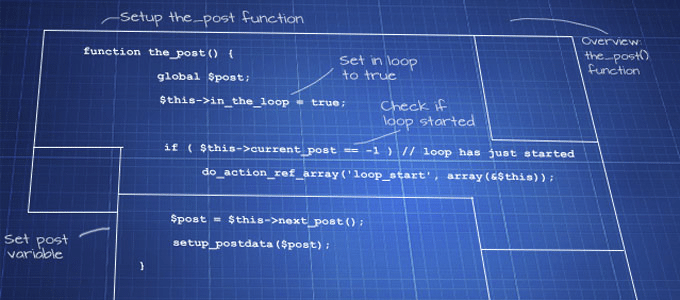

This is evident while using the API Explorer but is worth calling out. The first three steps are always the same no matter what Intelligence you are trying to derive from a conversation. Looking at the Topic code below, the Login and Post functions are generic and, therefore, placed into common libraries. Then the only difference between Topics and Action-Items is your API call.

const common = require('../../common/common.js');

const post = require('../../common/post-file.js');

const intelligence = require('../../common/intelligence.js');

const util = require('util');

async function main() {

/* Login and get token */

var token = await common.Login();

/* Post the audio file to Symbl platform */

var result = await post.Post(token, process.env.FILENAME);

/* Process the Topics for the audio file */

var topics = await intelligence.Topics(token, result.conversationId);

var output = JSON.parse(topics);

console.log(util.inspect(output, false, null, true));

}

main();

Because those operations are entirely discrete, if you want to explore multiple contexts, you can modify any Async example and string the API calls together because the Symbl platform derives the intelligence or data post-process of the conversation.

/* Process the Topics for the audio file */

var topics = await intelligence.Topics(token, result.conversationId);

var output = JSON.parse(topics);

console.log(util.inspect(output, false, null, true));

/* Process the ActionItems for the audio file */

var actionItems = await intelligence.ActionItems(token, result.conversationId);

var output = JSON.parse(actionItems);

console.log(util.inspect(output, false, null, true));

The REST API behind the Login, Post File, Status, and get Intelligence are super straightforward. In fact, if you look at the intelligence.js file, when you Obtain the Intelligence from the conversation, that last API call in the example, the only thing changing is the API endpoint URI.

For example, Topics ishttps://api.symbl.ai/v1/conversations/${conversationId}/topics versushttps://api.symbl.ai/v1/conversations/${conversationId}/action-items. The code for intelligence.js demonstrates that point by just swapping the last part of the URI between topics and action-items.

Streaming API

Now that we have looked at the Async API, let’s quickly look at the Streaming API. The Streaming API is probably even more direct. There are three steps to using real-time intelligence processing:

- Get the proper JSON configuration.

- Log in to the platform.

- Attach the audio stream to WebSocket.

For coding simplicity, the login and connecting the WebSocket are done in the startCapturing function call. If you want specifics, look at streaming.js for the implementation details.

var common = require('../../common/common.js');

var streaming = require('../../common/streaming.js');

/* Get basic configuration */

config = common.GetConfigScaffolding();

/*

1. Configure to receive action items

2. When Symbl detects action items, the event will be triggered onInsightResponse. This can be overwritten.

*/

config.insightTypes = ['action_item'];

config.handlers.onInsightResponse = (data) => {

// When an insight is detected

console.log('onActionItemResponse', JSON.stringify(data, null, 2));

};

/* Start real-time Topic gathering */

streaming.startCapturing(config);

Looking Forward

I hope you enjoyed this coding walkthrough where we broke down these examples and explained how the APIs work via code. The next post in this series will cover a real-world and practical example of implementing a good-sized project to capture conversation intelligence. We will incorporate audio and/or video via a streaming platform and attempt to extract some conversation intelligence from it.