Despite the immense advantages, until recently, the cost and time involved meant that developing a bespoke large language model (LLM) was only reserved for companies with the deepest resources. However, with all the tools and frameworks that streamline building an LLM from scratch, and place tailored language models within reach of most organizations – there’s no escaping the fact that it remains a time and resource-intensive endeavor.

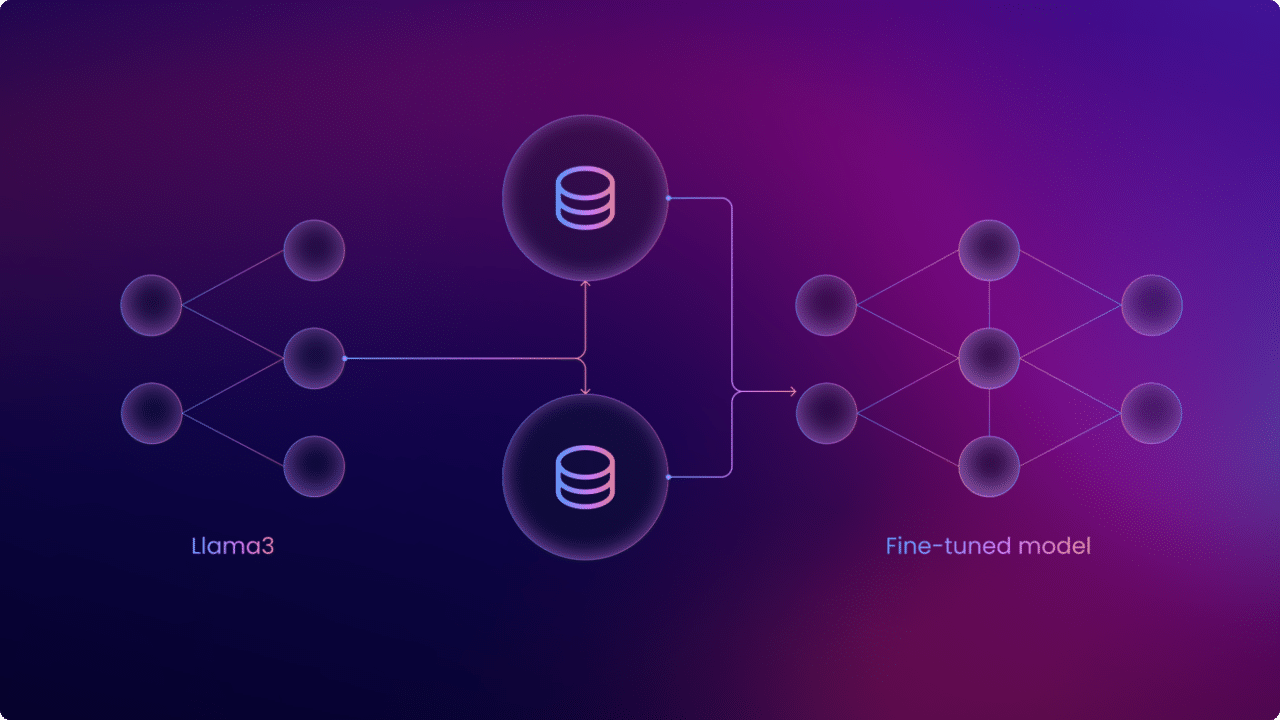

Fortunately, there’s an alternative to creating your own LLM: fine-tuning an existing base, or foundation, model. By fine-tuning a base LLM, you can leverage the considerable work undertaken by a skilled AI development or research team and reap the benefits of a personalized LLM – all while avoiding the required time and expense.

With this in mind, this guide takes you through the process of fine-tuning an LLM, step-by-step. We will demonstrate how to download a base model (Llama 3), acquire and prepare a fine-tuning dataset, and configure your training options.

What is Fine-Tuning?

Fine-tuning is the process of taking a pre-trained base LLM and further training it on a specialized dataset for a specific task or knowledge domain. The pre-training stage involves feeding the LLM vast amounts (typically, terabytes) of unstructured data from various internet sources, i.e., “big web data”. In contrast, fine-tuning an LLM requires a smaller, better-curated, and labelled domain or task-specific dataset.

After its initial training, an LLM will have developed an enormous range of parameters (billions or even trillions; the larger the model, the greater the number of parameters) that it uses to predict the best output for a given input sequence. However, as the LLM is exposed to previously unseen fine-tuning data, many of its output predictions will be incorrect. The model must then calculate the loss function, i.e., the difference between its predictions and the correct output, and adjust its parameters in relation to the fine-tuning data. After the fine-tuning data has passed through the LLM several times, i.e., several epochs, it will result in a new neural network configuration with updated parameters that correspond to its new task or domain.

Why Do You Need to Fine-Tune a Base LLM?

After a model’s pre-training, it has a detailed, general understanding of language – but lacks specialized knowledge. Fine-tuning an LLM exposes it to new, specialized data that prepares it for a particular use case or use in a specific field. This presents several benefits, which include:

- Task or Domain-Specificity: fine-tuning an LLM on the distinct language patterns, terminology, and contextual nuances of a particular task or domain makes it more applicable to a specified purpose. This increases the potential value that an organization can extract from AI applications powered by the model.

- Customization: similarly, fine-tuning an LLM to adopt and understand your company’s brand voice and terminology enables your AI solutions to offer a more consistent and authentic user experience.

- Reduced costs: fine-tuning allows you to create a bespoke language model without having to train one from the ground up. This represents a huge saving in computation costs, personnel expenses, energy output (i.e., your carbon footprint), and time.

Why Fine-Tune an LLM for Customer Service Use Cases?

Now that we’ve looked at the general advantages of fine-tuning, the question is, why fine-tune an LLM for customer service in particular? Here are some examples of the potential capabilities of an LLM that’s been optimized as a customer service agent.

- Authentic chatbots: an LLM fine-tuned for customer service tasks can be used as a bespoke chatbot tailored to the specific needs of your customers. This includes speaking in your company’s defined brand voice, using the same distinct phrases and questions as your human agents. Similarly, it could understand the specific nature of your customers’ queries, including the associated terminology.

- Sentiment analysis: a customer service LLM can detect the sentiment of a conversation, i.e., how a customer really feels and to what extent. This can be performed in real-time, to aid human agents in communicating more effectively, or afterwards, in a training capacity, to improve their skills going forward.

- Content generation: an LLM can generate a variety of content related to customer interactions, which can help organizations improve their customer service levels. This includes:

- Call summaries: summarizing a conversation, or multiple conversations, so human agents can quickly determine the nature of an interaction – or best prepare themselves for subsequent conversations.

- Key insights: similarly, condensing conversations down into a few salient points for quick analysis.

- Follow-up questions: formulating follow-up questions to guide a conversation towards a successful resolution.

Applying an LLM to customer service tasks in this way offers an organization several benefits, which include:

- Saving Time: by automating common parts of your organizational workflow, a customer service LLM saves time for your customers and staff. Customers won’t have to wait for assistance from a human agent as often, allowing them to successfully resolve their query in less time. Similarly, human agents won’t have to spend time addressing simple queries and can dedicate themselves to problems that warrant their skills.

- Productivity: with simpler tasks handled by AI agents, your staff can undertake more complicated, and value-adding, activities, which increase the overall productivity of your team.

- Customer satisfaction: addressing a customer’s queries quickly and effectively – without potentially waiting for someone to get back to them – boosts customer satisfaction rates. The happier your customers are, the greater their loyalty, strengthening your brand connection. This will boost customer retention rates and lower your marketing spend in the long term – because it costs more to attract new customers than to keep existing ones.

Fine-tuning Llama 3 For Customer Service: A Step-By-Step Implementation

Now it is time to take you through how to fine-tune an LLM for customer service – step by step.

For our example, we’re going to use HuggingFace’s Transformer library as it offers several features that streamline the process of fine-tuning an LLM. Firstly, HuggingFace provides access to a huge variety of pre-trained models (over 650,000) without having to download and host them locally. It also contains the powerful Trainer class that is optimized for training and fine-tuning transformer-based models. HuggingFace’s Trainer supports a vast range of training configurations for customizing the fine-tuning rocess – without requiring you to write your own training loop.

We’re going to use the recently released Llama 3 as our base model because, as with all models in the Llama family, it was designed with fine-tuning in mind – and is more adaptable than other open-source language models.

Install Libraries

The first step is configuring your development environment by installing the appropriate Python libraries. To fine-tune our Llama 3 model for customer service, we will need to install:

- Transformers: the main library that provides the functionality for fine-tuning.

- Pytorch: HuggingFace’s libraries integrate with the PyTorch, TensorFlow, and Flax machine learning libraries, so you must also install one of them to use the Transformers library. In our case, we will be using PyTorch.

- Datasets: a HuggingFace library that grants us access to its over 140,000 datasets.

- Evaluate: another HuggingFace library that provides metrics to assess the model’s performance during fine-tuning.

The following code will install the requisite libraries:

# For Python Version < 3

pip install torch transformers datasets evaluate

# For Python Version 3 and above

pip3 install torch transformers datasets evaluateDownload Base Model

With your environment configured, the next step is downloading the Llama 3 base model. We will be using the Instruct variation, as opposed to the pure base model, as it has been optimized for dialogue and, consequently, is better suited for our customer service use case.

Downloading Llama 3 with the Transformers library is simple and is accomplished with the following code:

from transformers import AutoModelForCausalLM

model = AutoModelForCausalLM.from_pretrained("meta-llama/Meta-Llama-3-8B-Instruct”)Prepare Fine-Tuning Data

Next, you need to prepare the data that you will use to fine-tune the base LLM, which requires you to do three things:

- Acquire data

- Tokenize the dataset

- Divide the data into training and evaluation subsets

Acquiring Data

For our fine-tuning example, we are going to use a dataset hosted on HuggingFace, telecom-conversation-corpus, which contains over 200,000 customer service interactions. Alternatively, if you had your own fine-tuning dataset, you would only need to replace the name of the dataset with the directory path where it is saved.

from datasets import load_dataset

#If you have your own dataset, replace name of HuggingFace dataset with file path

ft_dataset = load_dataset("talkmap/telecom-conversation-corpus"

)Create a Tokenizer

After loading our dataset, we need to tokenize the data it contains, i.e., convert it into sub-word tokens that are easier for the LLM to process. We will use the tokenizer associated with the Llama 3 model, as this ensures the text is split in the same way as during pre-training – and uses the same corresponding tokens-to-index, i.e., vocabulary.

We are then going to create a simple tokenizer function that takes the text from the dataset, tokenizes it, and applies padding and truncation where necessary. Padding or truncation is often required because input sequences aren’t always the same length; this is problematic because tensors, high-dimensional arrays that text sequences are converted to in order to be processed by the LLM, need to be the same shape. Padding ensures uniformity by adding a padding token to shorter sequences while, conversely, truncating a longer sequence will make it shorter so it conforms to the tensor’s shape.

Finally, to apply the tokenizer_function to the entire dataset, we will use the map method from the Datasets library. Additionally, by passing batched=True as an argument, we enable the dataset to be tokenized in batches.

from transformers import AutoTokenizer

# Load tokenizer

tokenizer = AutoTokenizer.from_pretrained("meta-llama/Meta-Llama-3-8B-Instruct")

# Define tokenizer function

def tokenize_function(examples):

return tokenizer(examples["text"], padding="True", truncation=True)

tokenized_dataset = ft_dataset.map(tokenize_function, batched=True)Divide the Dataset

The last step in preparing our fine-tuning data is dividing it into training and testing subsets. This will ensure that the model sees different data points during its fine-tuning and evaluation, which will help to avoid overfitting, i.e., where the model can’t generalize to unseen data.

While many HuggingFace datasets are already divided into training and testing subsets, ours is not. Fortunately, we can use the train_test_split() function to divide the dataset for us. By specifying a test_size of 0.1, we create a testing subset that’s 10% the size of the training subset.

tokenized_dataset = tokenized_datasets.train_test_split(test_size=0.1, shuffle=True)Lastly, because our chosen dataset has over 200,000 interactions, we are going to reduce the size of our own datasets for the sake of expediency, by selecting a range of 1000.

training_dataset = tokenized_datasets["train"].select(range(1000))

testing_dataset = tokenized_datasets["test"].select(range(1000))Set Hyperparameters

We are now going to set the hyperparameter configurations for fine-tuning our model by creating a TrainingArguments object that contains our training options.

It is important to note that the TrainingArguments class accepts a lot of parameters – 109 to be exact. The only required parameter is output_dir, which specifies where to save your model and its checkpoints, i.e., snapshots of the model’s state after specified intervals. Aside from setting the output_dir, you can choose not to specify any other parameters – and use the default set of hyperparameters.

To provide an example of some other training options, however, we will pass the following parameters to our TrainingArguments object.

- Learning rate: controls how quickly the model is updated in response to its loss function, i.e., how often it made incorrect predictions. A higher learning rate expedites fine-tuning but can cause instability and overfitting, while a lower learning rate increases stability and reduces overfitting but increases training time.

- Weight decay: the rate at which the learning rate decreases.

- Batch size: determines how much data the model processes at each interval: larger batch sizes accelerate training while small batches require less memory and computation power.

- Number of training epochs: how many times the entire dataset is passed through the model.

- Evaluation strategy: how often the model is evaluated during fine-tuning, with the options being:

- no: No evaluation is performed

- steps: evaluation is performed after every number of training steps (as determined by another hyperparameter

eval_steps. - epoch: evaluation is performed at the end of each epoch.

- Save strategy: how often the model is saved during fine-tuning, with the options being:

- No: the model isn’t saved.

- steps: the model is saved after every number of training steps (as determined by another hyperparameter

save_steps). - epoch: the model is saved at the end of each epoch

- Load best model at end: if the best model found during fine-tuning is loaded at completion.

With our chosen hyperparameters, our TrainingArguments will be configured as shown below:

from transformers import TrainingArguments

#Define hyperparameter configuration

training_args = TrainingArguments(

output_dir="llama_3_ft",

learning_rate=2e-5,

per_device_train_batch_size=16,

per_device_eval_batch_size=16,

num_train_epochs=2,

weight_decay=0.01,

evaluation_strategy="epoch",

save_strategy="epoch",

load_best_model_at_end=True

)Establish Evaluation Metrics

HuggingFace’s Evaluate library offers a selection of tools that allow you to assess your model’s performance during and after fine-tuning. There are three types of evaluation tools:

- Metric: used to evaluate a model’s performance. Examples include accuracy, precision, and perplexity.

- Comparison: used to compare two models. Examples include exact match and the Mcnemar test.

- Measurement: allows you to investigate the properties of a dataset. Examples include text duplicates and toxicity.

We are going to choose accuracy as our performance metric, which will tell us how often the model predicts the correct outputs from the fine-tuning dataset. We will write our evaluation strategy as a simple function, compute_metrics, that we can pass to our trainer object.

import numpy as np

import evaluate

metric = evaluate.load("accuracy")

def compute_metrics(eval_pred):

metrics, labels = eval_pred

predictions = np.argmax(metrics, axis=-1)

return metric.compute(predictions=predictions, references=labels

Fine-Tune the Base Model

With the elements of our trainer object configured, all that’s left is putting it all together and calling the train() function to fine-tune our Llama 3 base model.

trainer = Trainer(

model=model,

args=training_args,

train_dataset=small_train_dataset,

eval_dataset=small_eval_dataset,

compute_metrics=compute_metrics,

)

trainer.train()

Common pitfalls when fine-tuning an LLM

Despite its immense benefits and the availability of tools like the HuggingFace libraries that streamline the process, LLM fine-tuning can still present several challenges. Here are some of the pitfalls you are likely to encounter when fine-tuning a language model.

- Catastrophic Forgetting: due to its parameters being altered by the fine-tuning data, the LLM may “forget” its prior knowledge and capabilities acquired during pre-training.

- Overfitting: where an LLM consistently makes accurate predictions on its training dataset but fails to perform as well on testing data. This often occurs because the testing data is similar, or the same as the training data – so the model learns it too well and can’t generalize to new data points. Other factors include the dataset being too small, being of poor quality (inaccuracies, biases, etc.), or the model being trained too long.

- Underfitting: where an LLM displays poor predictive abilities during both training and testing This could be due to a model being too simple (too few layers), a lack of data, poor quality data, or a lack of training time.

- Difficulty Sourcing Data: the success of fine-tuning a model depends on the amount and quality of data at your disposal. Depending on the proposed use case and the specificity of the knowledge domain, it can be difficult to source sufficient amounts of fine-tuning data.

- Time-Intensive: with the time it takes to gather the requisite datasets, as well as to implement the fine-tuning process, evaluate the model, etc., fine-tuning an LLM can require substantial amounts of time.

- Increasing Costs: although far less expensive than training an LLM from scratch, when considering the costs involved in sourcing data in addition to computational and staff costs, fine-tuning can still be a costly process.

How Nebula LLM Has Been Fine-Tuned

Nebula LLM is Symbl.ai’s proprietary large language model specialized for human interactions. Fine tuned on well-curated datasets containing over 100,000 business interactions across sales, customer success, and customer service and on 50 conversational tasks such as chain of thought reasoning, Q&A, conversation scoring, intent detection, and others, Nebula is ideal for customer service use cases: .

- Real time Agent Assistance: Extract key insights and trends to help human agents on live calls and enhance customer support. For instance: generating conversational summaries, generating responses to address objections, handling moments of customer frustration by suggesting script changes, and more.

- Call scoring: With Nebula LLM you can score conversations, based on performance criteria such as communication & engagement, question handling, forward motion, , and others. This can be used to assess a human agent’s performance and enable targeted coaching.

- Automated customer support: Nebula LLM can be used to power chatbots to perform common customer support tasks, such as Q&A.

Conclusion

In summary:

- Fine-tuning is the process of taking a pre-trained base LLM and further training it on a specialized dataset for a specific task or knowledge domain.

- Fine-tuning an LLM exposes it to new, specialized data that prepares it for a particular use case or use in a specific field. The benefits of fine-tuning include:

- Task or domain-specificity

- Customization

- Reduced costs

- Use cases for LLMs fine-tuned for customer service include:

- Realistic chatbots

- Sentiment analysis

- Content generation

- Applying an LLM to customer service tasks can offer an organization several benefits, such as:

- Saving time

- Productivity

- Customer satisfaction

- The steps for fine-tuning a base LLM include:

- Installing libraries

- Downloading a base model

- Preparing fine-tuning data

- Acquiring data

- Tokenizing the dataset

- Dividing the data into training and evaluation subsets

- Setting hyperparameters

- Establishing evaluation metrics

- Fine-tuning the base model

Common pitfalls when fine-tuning an LLM include:

- Catastrophic forgetting

- Overfitting

- Underfitting

- Difficulty sourcing data

- Time requirements

- Increasing costs

Fine-tuning is an intricate process but can transform the potential of AI applications when applied correctly. We encourage you to develop your understanding and skills with further experimentation. This could include setting different hyperparameters, using different datasets, and attempting to fine-tune a variety of base models You can learn more by referring to the resources we have provided below.

Alternatively, if you’d prefer to sidestep the process of fine-tuning an LLM altogether, Nebula LLM is specialized to support your organization’s customer service use cases. To learn more about the model, sign up for access to Nebula Playground.

Additional Resources

- HuggingFace Transformers Library

- HuggingFace Datasets Library

- HuggingFace Evaluate Library

- Nebula Developer Documentation