The launch of ChatGPT has sparked significant interest in generative AI, and people are becoming more familiar with the ins and outs of large language models.

Many individuals, including myself, have become fascinated with understanding the inner workings of this powerful tool. It’s worth noting that prompt engineering plays a critical role in the success of training such models. In carefully crafting effective “prompts,” data scientists can ensure that the model is trained on high-quality data that accurately reflects the underlying task.

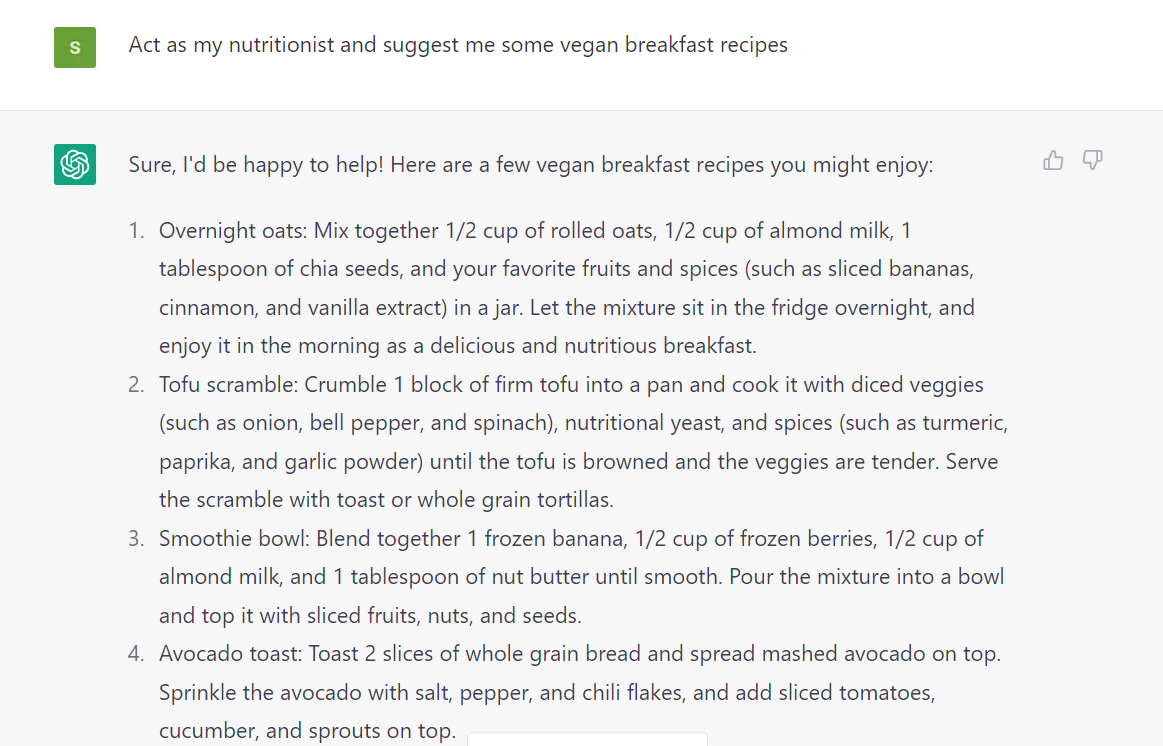

Prompts are set of instructions that are given to the model to get a particular output. Some examples of prompts include:

1. Act as a Data Scientist and explain Prompt Engineering.

2. Act as my nutritionist and give me vegan breakfast recipe suggestions.

3. Imagine you are a songwriter and create a song based on the lives of data scientists.

Prompt Engineering is a Natural Language Processing (NLP) technique to create and fine-tune prompts to get accurate responses from the model. It is imperative to spend time fine-tuning the prompts for your required use. If not, it can lead to model hallucinations.

4 benefits of prompt engineering

- Improved accuracy: Prompt engineering can improve the accuracy of AI models by providing them with more specific and relevant information. This can lead to more effective outcomes and better decision-making.

- Increased efficiency: Prompt engineering can increase AI models’ efficiency by reducing the time and effort required to train them.

- Customizability: It can improve the flexibility of AI models by allowing them to be used for a broader range of tasks.

- Increased user satisfaction: Prompt engineering can increase user satisfaction with AI models by making them more user-friendly and intuitive.

Industry-specific use cases of prompt engineering

Healthcare: Prompt engineering is being used to improve the accuracy of medical diagnosis, develop new treatments, and personalize healthcare. For example, prompt engineering can summarize a patient’s medical history and symptoms, identify potential drug interactions, and create personalized treatment plans.

Finance: In finance, prompt engineering is used to create intelligent assistants that can provide personalized investment advice or help customers with financial planning. These assistants can provide more effective and relevant guidance by customizing prompts based on a customer’s financial goals and risk tolerance.

Education: Prompt engineering personalizes learning, provides feedback on assignments, and creates engaging learning experiences. For example, prompt engineering can generate a personalized learning plan for each student, provide feedback on essays and code, and create interactive stories and games.

Tips and tricks to generate effective prompts

1. Understand the use case in detail to add “keywords” while generating prompts.

Instead of the prompt “Write a blog on the life of a data scientist”, you can instead phrase it as, “Act like a data scientist and write a blog on the life of a data scientist covering various aspects of their life such as the career journey, what to expect in the job and challenges.”

2. Test and refine the prompts based on the output.

If the desired output is not received in the first iteration, try to add more keywords or details to modify the response

3. Reduce information that doesn’t serve any purpose in the prompt.

For example, instead of saying write a short, precise, and exciting description of XYZ product, use “Use 3-5 sentences to write the product description.”

Limitations and challenges

Bias in prompt generations: Prompt engineering can introduce bias into the training data if the prompts are not carefully designed. This can lead to biased models against certain groups of people or produce inaccurate or misleading results.

Difficulty in generating effective prompts: Generating effective prompts can be challenging, requiring domain expertise and a deep understanding of the underlying task. Poorly designed prompts can lead to inaccurate or irrelevant model output.

Limited flexibility: Prompt engineering can limit the flexibility of language models, as they are trained to produce output based on a specific prompt. This can make adapting the model to new tasks or scenarios challenging.

Time-consuming: Prompt engineering can be time-consuming, requiring significant manual effort to design and test prompts. This can make scaling prompt engineering to larger datasets or more complex tasks challenging.

Lack of generalization: Language models trained using prompts may not generalize well to new and unseen data. This can limit the usefulness of the model in real-world applications.

Data privacy concerns: Prompt engineering requires access to large amounts of training data, which may contain sensitive information about individuals. This raises concerns about data privacy and the potential misuse of personal information.

Ethical considerations: Prompt engineering raises ethical concerns around the responsible use of language models, particularly in healthcare, finance, and education. There is a need for guidelines and regulations to ensure that language models are used responsibly and ethically.

By carefully crafting effective prompts, data scientists can ensure that the model is trained on high-quality data that accurately reflects the underlying task. This, in turn, can lead to more accurate and relevant model outputs, resulting in improved performance on various tasks. As such, prompt engineering has emerged as a crucial area of research in natural language processing and machine learning.