State-of-the-art deep learning is needed for natural conversations with a conversation intelligence system and to be as close to the sophistication of a human brain as possible. But it’s not enough alone. The system also needs deep understanding to model, generalize, and then run analytics using all of its knowledge, just as a human would.

Deep learning for natural conversations

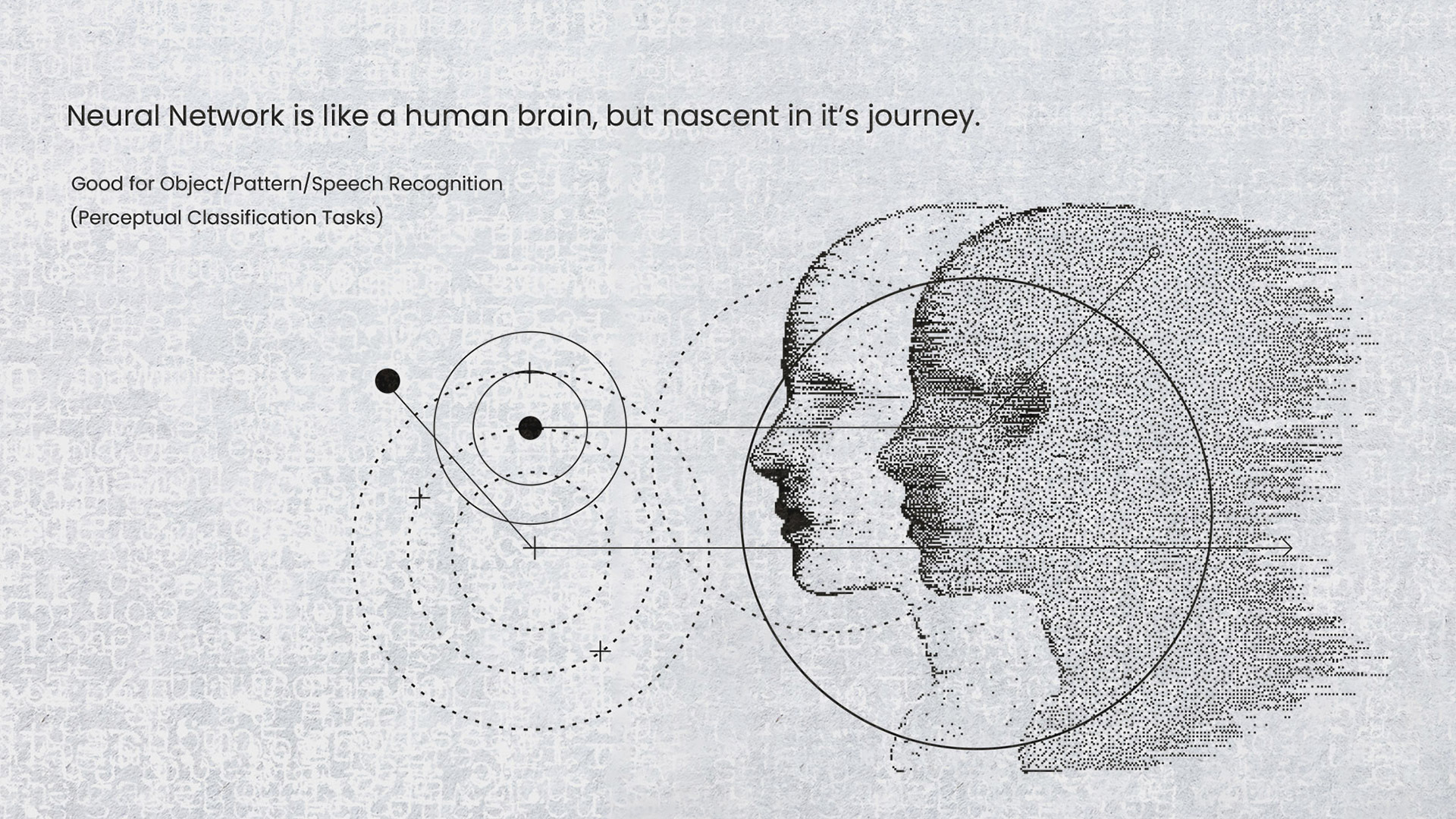

The human brain is one of the most efficient computing machines we know of — it’s an extremely sophisticated neural network capable of abstract thinking.

Artificial neural networks are the most cutting edge algorithms right now. They represent the structure of a human brain modeled on the computer, with neurons and synapses organized into layers.

Artificial neural networks and their present uses.

Deep learning differs from machine learning

Machine learning is a set of statistical analytics tools which help you model the patterns in data. It then learns based upon the rules that you formulate, and sometimes a human might intervene to correct its errors.

Deep learning uses large amounts of data, takes longer to train, and is computation heavy. But this means that it can model patterns in a sophisticated manner, as compared to traditional statistical methods of machine learning techniques. This increased scale the data provides a greater capacity to learn patterns; for example, finding out stock market trends is a less complicated task for a computer than recognizing speech or faces which is more than a mathematical formula. This makes deep learning a good approach for more complex modelling to recognize sophisticated patterns.

But deep learning in conversation intelligence doesn’t match the sophistication of a human brain. This is because deep learning is just that — learning. It doesn’t go to that next level of understanding. At this deep learning stage, the system learns and exploits statistical patterns in data (and is really good at doing that with lots of training) rather than learning/understanding its meaning in a flexible and generalized way as humans do.

Let’s consider an example …

When you say, “I can recognize faces”, you don’t need to first learn what a face is because you already know. An understanding of what a face is would require you to have a more conceptual understanding. Taking it to another level, you don’t need to consider why people have faces. This is more abstract and requires pattern recognition and for your brain to model it, which in turn leads to a deep understanding.

Machines can’t do this intuitively. They need a mechanism and high-level techniques which arise from deep learning. Even these things won’t lead to a human level of understanding, rather an ability to generalize knowledge to make more general decisions without relying purely on patterns. This is an example of operating in an open domain where the model can conceptualize data from one domain and use it intelligently in another.

Taking conversation intelligence to the next level with deep understanding

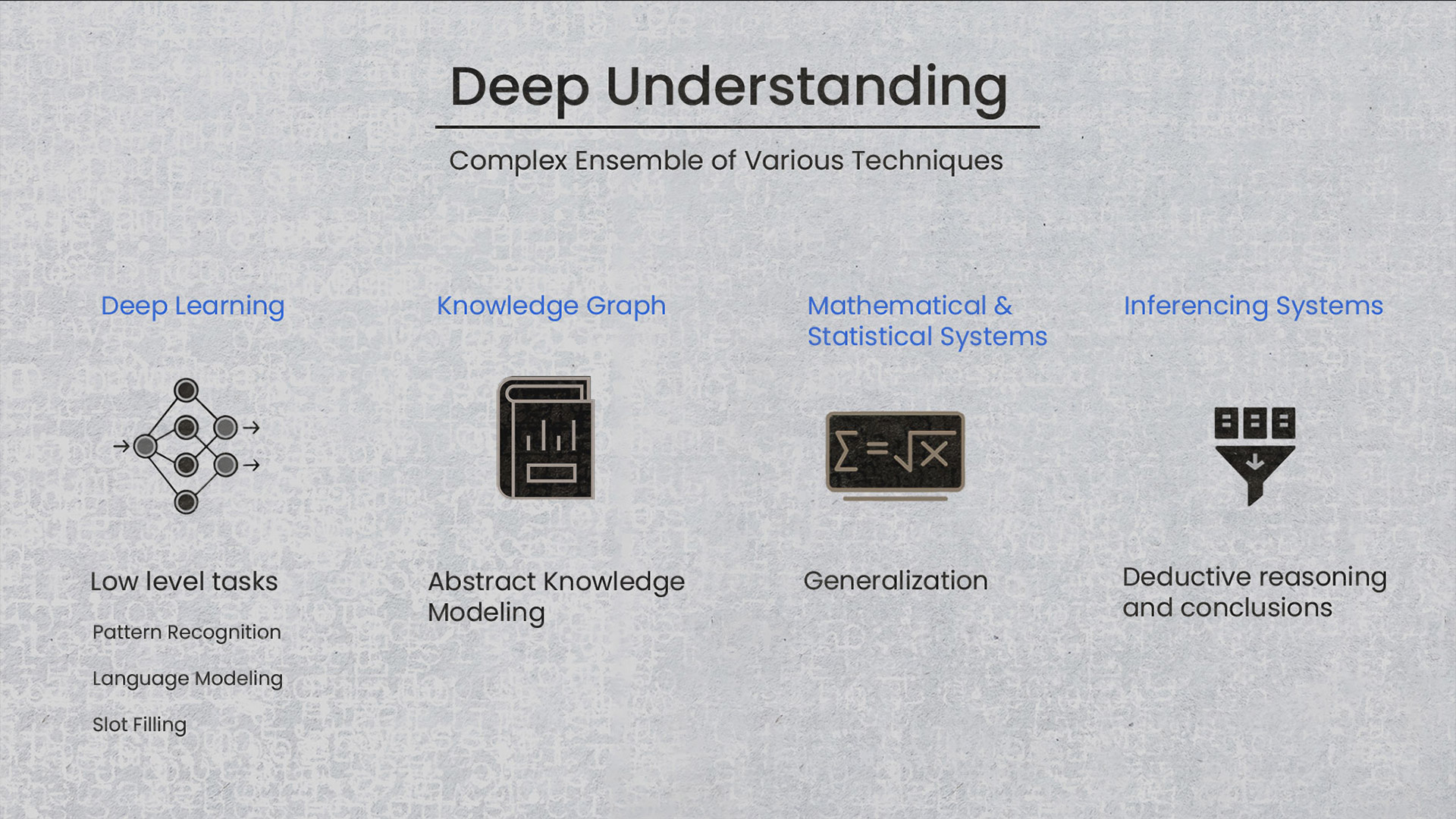

Techniques used in deep understanding intelligence.

Conversation intelligence systems solve problems, just like the human brain can. They learn how the human brain works by learning to recognize patterns. With just deep learning you can’t ask the AI to truly understand what it has recognized. For example, it’ll understand that some apples are red but not all of them are red. There’s no real understanding of what it means to be a red or a green apple, or even an apple, but it recognizes the words.

Imagine that you have a deep learning system that can detect human faces but it is not able to deal with the wider concepts – this is where it falls short. It has no capacity to reason the patterns it detects. To do this, a thinking mechanism is needed that has a knowledge of the world and is capable of remembering things.

- Deep learning is sophisticated pattern recognition.

- Abstract knowledge modelling provides the necessary knowledge required to have a “thinking” element.

- Just modelling the knowledge is not enough, you also need a statistical system to generalize that knowledge. For example, the system can understand how a car and truck work but doesn’t understand the nuances of the difference between the two. An ability to generalize means the system can apply knowledge, such as they both contain an internal combustion engine, to something else, like, a motorbike.

- Inferencing systems should be able to take all of the information and draw conclusions from it.

So, how do you build this in a more sophisticated way so your system has deep understanding, not just learning?

Well, it’s a combination of modeling matched with deep learning. Deep learning alone is where the system is trained, or just programmed, but add deep understanding into that and the system is educated. It needs to understand what an apple is, and that there are more colors, evolution factors, etc.

This is the knowledge acquired and then you need to build upon that baseline knowledge using conversation data. You can model and generalize and then run analytics from all of your knowledge just as a human would. After this, you would broaden your inferences based on what you learn from your knowledge base.

In following this process, the conversation intelligence will learn and understand how to find the logical steps needed and link them together to reach a conclusion. As you can see, it’s a complex system with lots of moving pieces working together.

Deep learning to deep understanding and how it all works together

Deep learning is just one element of a very complicated ensemble of techniques ranging from statistical to deductive reasoning. Deep learning, like techniques, can be applied with a huge amount of data to solve the low-level tasks which are simple enough to be modeled as a pattern recognition problem.

The brain is highly complex, involving multiple components with different architectures interacting synergistically. Current artificial deep neural networks are roughly based on models of a few different regions of the deep neural cortex of the human brain (although the brain does a lot more than recognize patterns in structured schemes of data).

In the same way, you need a generalized AI mechanism to model those patterns, in a connected and multi-dimensional way, with enough control over it. Enough to modify your hypothesis of the world, enrich them by adding more abstract relationships, and get rid of any which are no longer relevant.

You’ll need a mathematical approach for generalization and modeling to build a multi-dimensional knowledge graph that is sophisticated enough to capture the generalized relationships between the concepts and causes in the world that the CI system is exposed to. It has to generalize concepts at the most fundamental levels like space, time, and objects to achieve deep understanding.

But your knowledge is only useful if it can be applied and you have the ability to reason. A conversation intelligence system should be able to deduce and come to conclusions based on the knowledge it has.

Symbl.ai harnesses both deep learning and deep understanding. Symbl’s API can learn and pull insights in real time, without you having to code it yourself, and this brings you the most sophisticated Conversation Intelligence API available. Learn more here.

Further reading:

- Deep learning and neural network guide

- Video: The Future of AI: from Deep Learning to Deep Understanding, Ben Goertzl.

- Can we copy the brain?