Sentiment analysis is the process of detecting underlying positive, negative or neutral sentiment in text, voice, and video conversations. For businesses, it helps to inform their market research and customer service approach; but for developers it’s difficult to get right with existing sentiment analysis APIs. Symbl.ai’s Sentiment API is flexible, extensible and user-friendly so you can easily transcribe and capture sentiments in real-time over WebSockets.

Why perform sentiment analysis?

Sentiment analysis applies natural language processing (NLP) to text, voice, video conversations to detect underlying positive, negative or neutral sentiment. While this would certainly be handy in relationships, it’s mostly used by businesses to keep tabs on brand reputation, detect attitudes in customer feedback, and generally understand their customers’ needs better.

When done in real time, sentiment analysis is particularly useful for capitalizing on key moments in a conversation or for damage control. If you can identify critical issues in real-time, you can take action right away. For example, if a customer is getting out of hand on a support call, a trained AI could detect the escalating negative sentiments and suggest the agent’s next action or even alert a manager.

Many big-name companies and even governments use sentiment analysis to get instant insights from social media and measure the public’s reaction to a recent ad or announcement. Expedia Canada, for example, found its brand perception dipping after airing a TV commercial. Turns out that most people online despised the violin playing chaotically in the background, to the point that they were being “put off” the brand itself.

So, Expedia aired a follow-up commercial showing the offending violin being smashed, and managed to turn the negative conversations around the campaign into positive ones.

Clearly, sentiment analysis is a useful ability to have. But to capture all those handy insights from social or other platforms, you’ll need some help.

The problem with capturing real-time sentiment analysis

Human language is complex. Even humans themselves have trouble grasping nuances like sarcasm, irony, bias and slang, so it’s no wonder machines struggle to analyze it accurately.

Take for example the following sentence, “I just missed my train. Fantastic.”

Most humans would catch the sarcastic tone and identify the negative sentiment, but unless a machine has contextual understanding, it cannot pick up on that sarcasm and might naively categorize the sentiment behind the word “fantastic” as positive.

So, if you want the most accurate sentiment analysis you’ll need specialized software that’s built on NLP and contextual understanding. The problem is that, for the most part, these capabilities are walled off behind large software development kits (SDKs) or siloed in applications that don’t give you the granularity, flexibility or usability of a fully programmable API.

But don’t fret, we know of a real-time sentiment analysis API that has it all and enables you to generate actionable analytics on a user-by-user, user-by-call, or call-by-call basis — so you never miss out on any high-value insights from your conversations.

Meet Symbl.ai’s WebSockets Sentiment API

Symbl.ai’s Sentiment API is part of a comprehensive suite of conversation intelligence APIs designed to swiftly analyze human conversations and surface the most valuable insights — all in real time.

The Sentiment API, in particular, works over aspect-based sentiment analysis, which is a text-based technique that categorizes data by aspect and identifies the sentiment attributed to each one. It works with Symbl.ai’s Messages API to perform real-time transcription on each text message, then extracts the sentiment and attributes it to a relevant aspect. These “aspects” are what Symbl.ai calls topics, which are essentially the main subjects talked about in a conversation.

Furthermore, unlike most APIs in this market, Symbl.ai’s Sentiment API is:

Non-assumptive: Symbl.ai doesn’t assume emotion when analyzing polarity (positive, negative, neutral). Instead, it relays a value with respect to negativity or positivity. You can also define and then freely adjust the value threshold after testing and verifying the results.

Non-invasive: Rather than analyze a speaker’s actual voice — a very personal aspect of their identity that they might feel uncomfortable having put under a microscope — Symbl.ai’s results are pulled from the conversation transcripts (i.e. messages), which are just cold, hard texts and much less intimate.

Fully extensible: Every result provided in the analysis, from the messages to the topics pulled from the conversation, is completely programmable. This means savvy developers like yourself can fling those analytics into other APIs for even further insights, or use them to build your own stats and data around sentiment.

User-friendly: It just makes life easier for developers. Symbl.ai’s APIs give you out-of-the-box integrations so you can easily harness a human-level understanding across different domains – without upfront training data, wake words, or custom classifiers.

So how can you implement this magical-sounding API?

How to run sentiment analysis with Symbl.ai

To see Symbl.ai’s sentiment analysis API in action, you have to process a conversation using Symbl.ai.

Symbl.ai handles the audio/video streaming through the ever-popular WebSocket protocol to transcribe and capture sentiments in real-time. This means you can integrate it directly via a browser or server.

So, step zero is to set up Symbl.ai’s WebSockets API for real-time transcription in your browser. Once you’ve configured the WebSocket endpoint, run the code, and are the proud owner of a transcription — you can start the sentiment analysis.

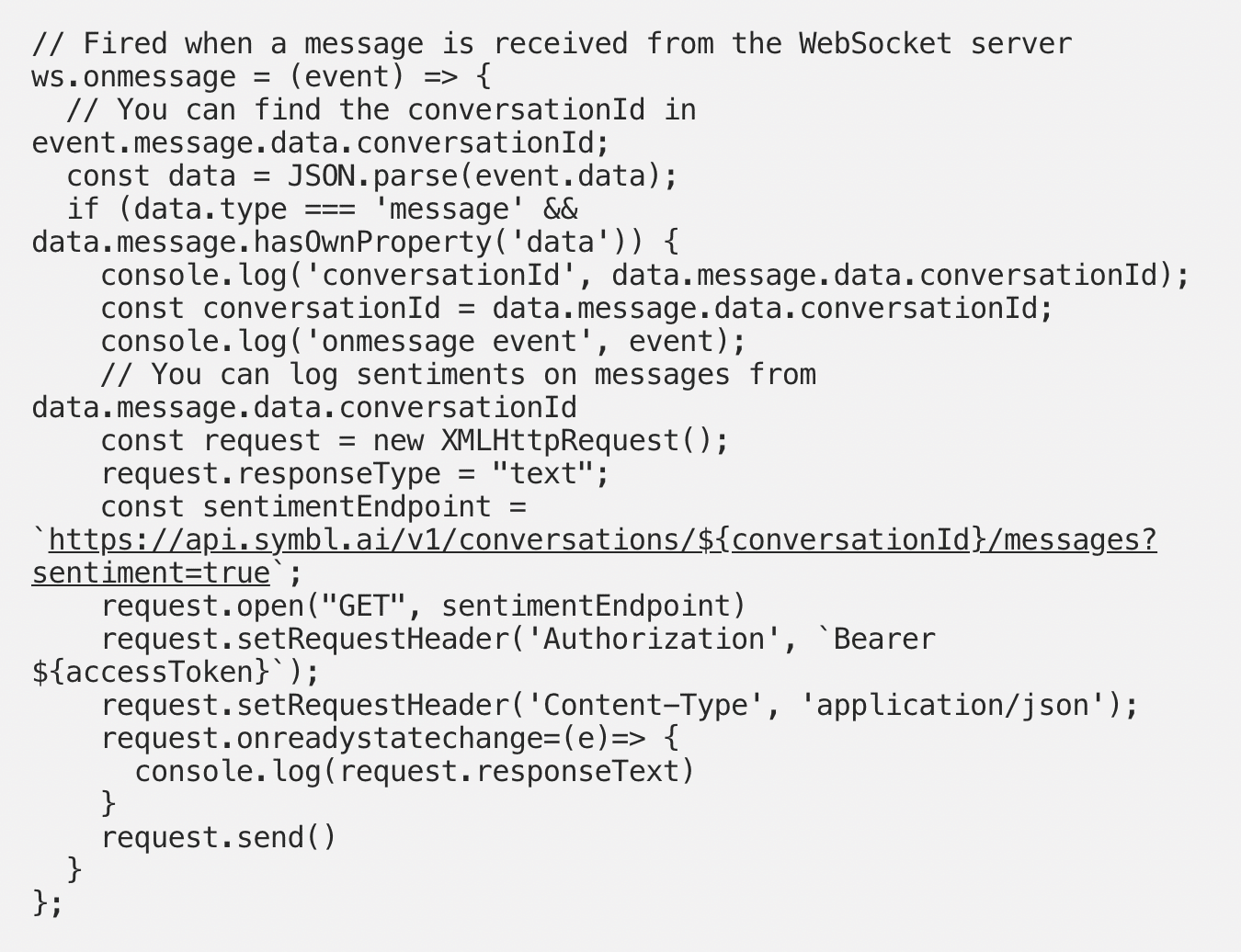

1. The first step is to capture the conversationId from the WebSocket data:

const conversationId = data.message.data.conversationId;2. Next, you have to create the request. There are multiple libraries for handling HTTP requests, so feel free to refactor the XMLHttpRequest here.

const request = new XMLHttpRequest();3. Now configure the request, setting the response’s type to text.

request.responseType = "text";4. Configure the endpoint:

entEndpoint = 'https://api.symbl.ai/v1/conversations/${conversationId}/messages?sentiment=true';5. Set the request:

request.open("GET", sentimentEndpoint)6. Set the request’s headers:

request.setRequestHeader('Authorization', `Bearer ${accessToken}`); request.setRequestHeader('Content-Type', 'application/json');7. Create a function to log the resulting sentiments in the console.

request.onreadystatechange=(e)=> { console.log(request.responseText) }8. Finally, send the request.

request.send()And voila! Here’s the sentiment analysis API implemented in your ws method for onmessage in your WebSocket:

Furthermore, you can apply your conversationId to new API calls and access other valuable conversation data, including:

- Speaker ratio: The time each speaker talks and listens for

- Talk time: How long they speak for

- Silence: How long they don’t speak for

- Pace: Words per minute during the conversation

- Overlap: When several speakers are talking over each other

These are also fully programmable and allow savvy developers, like yourself, to create application logic or notifications that significantly augment their conversational experiences. (Although if you get stuck, just jump into Symbl.ai’s Slack channel for help.)

With Symbl.ai’s flexible and programmable APIs in your corner, transcribing a speaker and capturing their sentiments in real time is just the tip of the iceberg.

Additional reading

To learn more about the wonders of sentiment analysis, check out these helpful sources:

- Sentiment Analysis: Concept, Analysis and Applications

- Sentiment Analysis Algorithms and Applications: A Survey

- A Comprehensive Guide to Aspect-based Sentiment Analysis

- How to use Symbl.ai’s voice API over WebSocket to generate real-time insights