Conversation Intelligence can automatically clip and index your long-form voice and video content (like podcasts, webinars, and conferences) facilitating easy content distribution. You can use conversation intelligence API’s to achieve this automatically – indexing by topic, parent-child hierarchy, or Q&As – for an improved user experience.

The problem with long-form voice and video content

We have all experienced the rise of digital content, with podcasts, webinars, and conferences held remotely. If users can easily access the parts of long-form content that interest them the most without having to spend hours navigating recordings, and creators can distribute their content in a focused and tailored way to their audience, everyone benefits.

Done well, identifying which content is good for a certain audience is smart and useful. This is because the audience is more likely to be engaged and trust that your message/product/brand understands what they want and need. This is similar to what Netflix does when it shows different TV shows to different geographical audiences across the world.

How to navigate long-form content with speech recognition

Long form content is hard to navigate because it contains free-flowing, continuous information.

Most businesses today manually clip long form content into smaller, consumable pieces for distribution and consumption. To do this someone has to review the content to see what is being discussed at each point and then clip or add tags to the video or audio (a tedious process). When you’ve clipped the file, you can create an index from which you can navigate or pull out topics or information, or skip to the section you’re most interested in.

Another way to navigate long-form voice or video content is to get a transcription, and then you can use that to search for keywords and topics.

The problem is, these methods are time-consuming and there are not many solutions that can automatically and contextually understand the content to identify the different topics it contains.

…Enter conversation intelligence.

Conversation intelligence helps you level up the value of long-form content

Symbl.ai offers a comprehensive suite of conversation intelligence APIs that can analyze human conversations and speech without having to use upfront training data, wake words, or custom classifiers.

Summaries

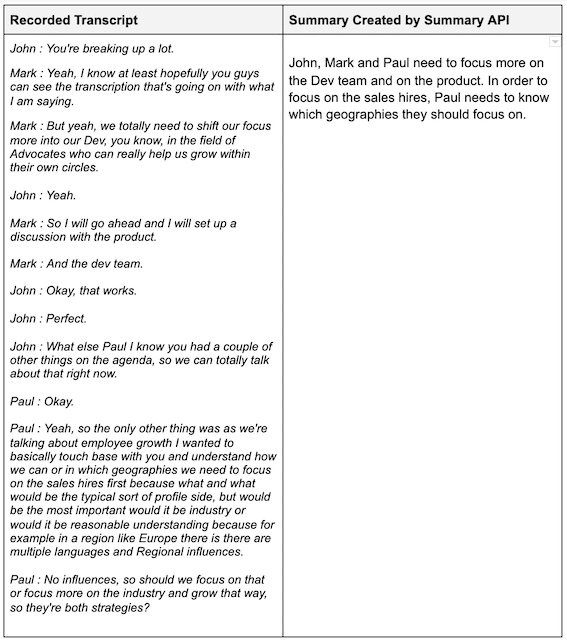

One of Symbl.ai’s features that’s really useful for long form voice/video content is the Summary API. With this API you can create summaries of your long form content in real time or after it has ended. Using Summary API, you can create summaries that are succinct and context-based. Here’s an example of a summary generated by Symbl.ai from a recorded transcript:

Indexing

WIth conversation intelligence you can create automatic indexing of voice or video content. You can index by topic, using a parent-child hierarchy, or by Q&A.

Three ways to index long-form content with Symbl.ai’s conversation intelligence API:

1. Topics

Symbl.ai can identify topics, which are the main subjects talked about, and add them to the long-form voice or video content. Every time a context switch happens in the content, Symbl.ai’s topic algorithm detects the change and extracts the most important topics out of it.

Every topic has one or many Symbl.ai Message IDs, which is a unique message identifier of the corresponding messages or sentences. Topic A might be the main theme of your content and might have six different Message IDs (which refer to timestamps in the conversation). Being able to automatically identify topics in context makes it easier to search your content because you don’t need to think about specific keywords.

Once you have the topic(s) of the content and their Message IDs, then you can automatically index your long-form voice or video content and give users a flexible and easy way to navigate or search hours of recordings.

2. Parent-Child Hierarchy

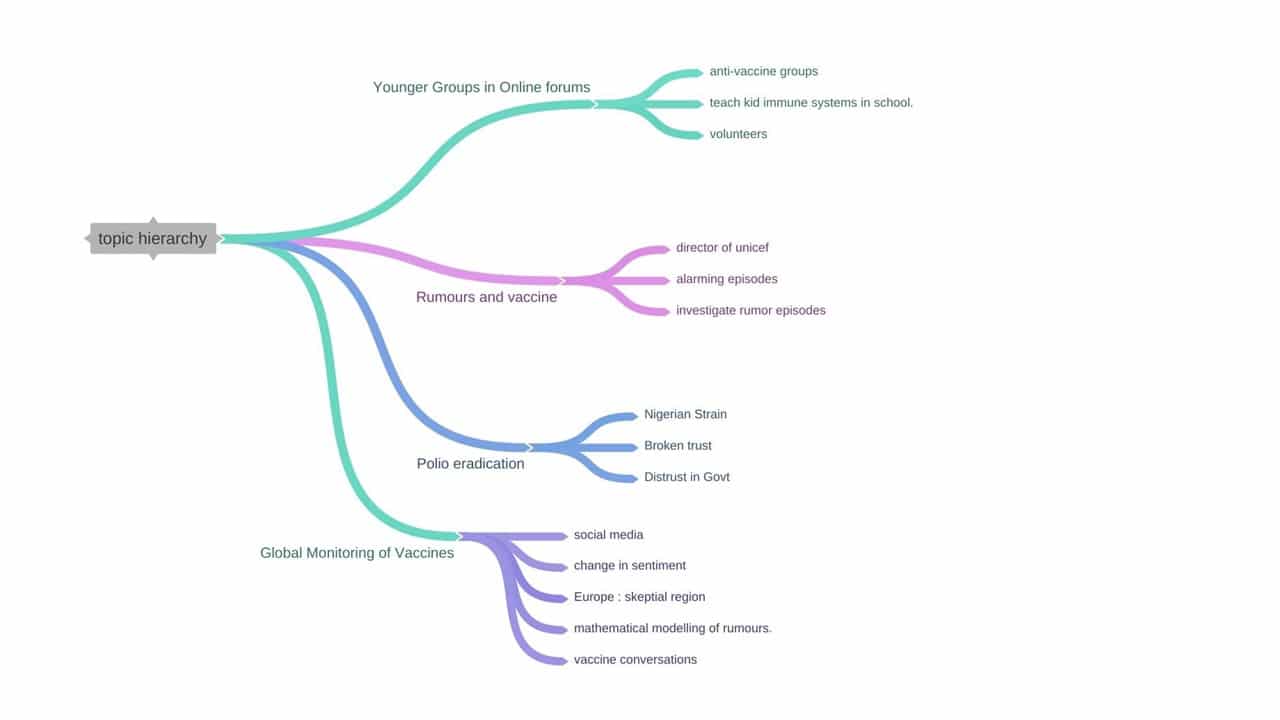

Any conversation or presentation can have multiple related topics that can be organized into a hierarchy for better insights and consumption. Symbl.ai’s Topic Hierarchy algorithm finds a pattern in the conversation and creates parent (global) topics and each parent topic can have multiple child (local) topics within it.

Example of parent-child topic hierarchy in a conversation.

- Parent Topic: The highest-level organization of content ideas. These are the key points on which the speakers expanded and discussed at length.

- Child Topic: These are the subtopics that aggregate or are originated from the parent topic. Child topics are linked to the parent topic as they form the time chunks of the parent topics in a certain way.

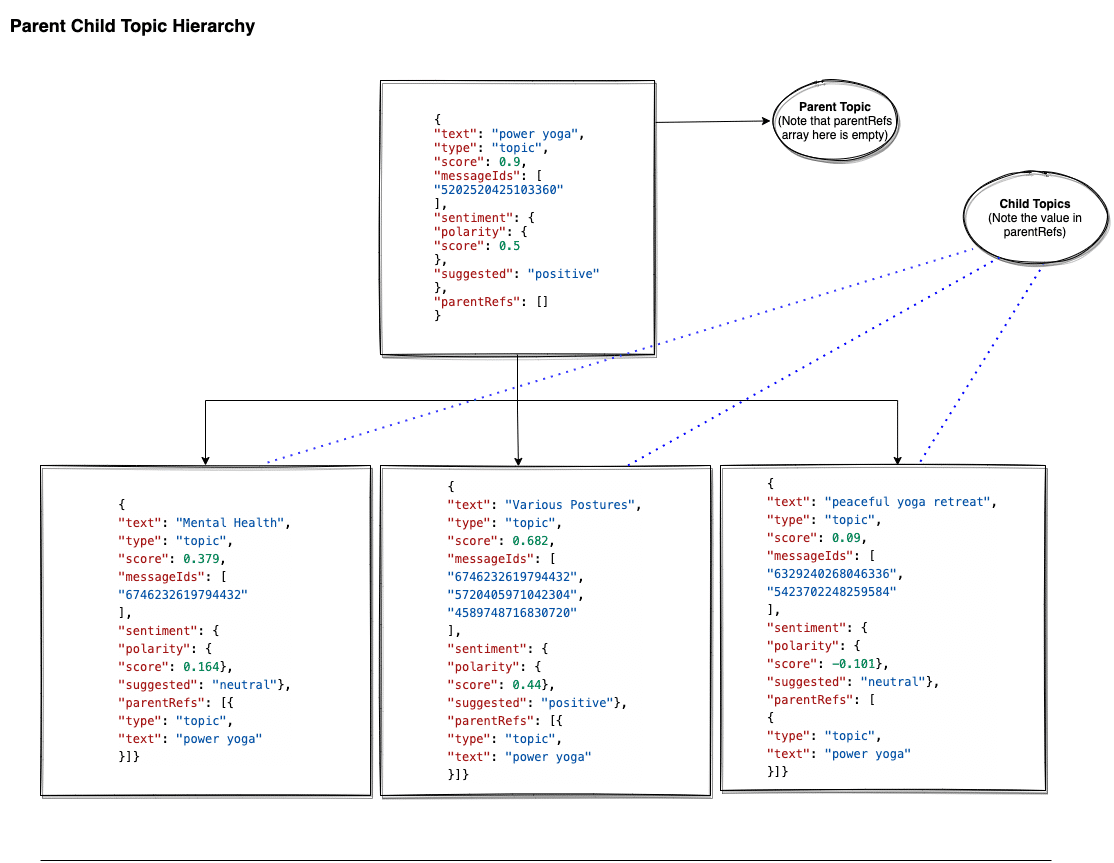

Example of a parent-child topic hierarchy with Symbl.ai Topics API.

Once the long-form content is split into parent-child topics, you can then use these to build a timeline and split the content very easily using the corresponding Message IDs.

3. Clips of Q&As and Sentiments

You can create clips of the most important questions and their answers and then display them however you want. For example, you can take a podcast and clip the Q&As throughout and compile them into a highlight reel. You could do this with all the Q&As or surface certain ones by topic.

The same process can be followed to create clips corresponding to sentiments, so for example you could clip all the positive, neutral, or negative sentiments within the voice/video content and use them to create another highlight reel.

Upgrade the user experience with Symbl.ai

In this busy world, your ability to automatically create a summary or comprehensive view of one or many videos or recordings saves everyone time and helps people consume the content easily. And once you have indexed your long-form content you maximize your chances of increasing user engagement and retention with greater accessibility for end users.

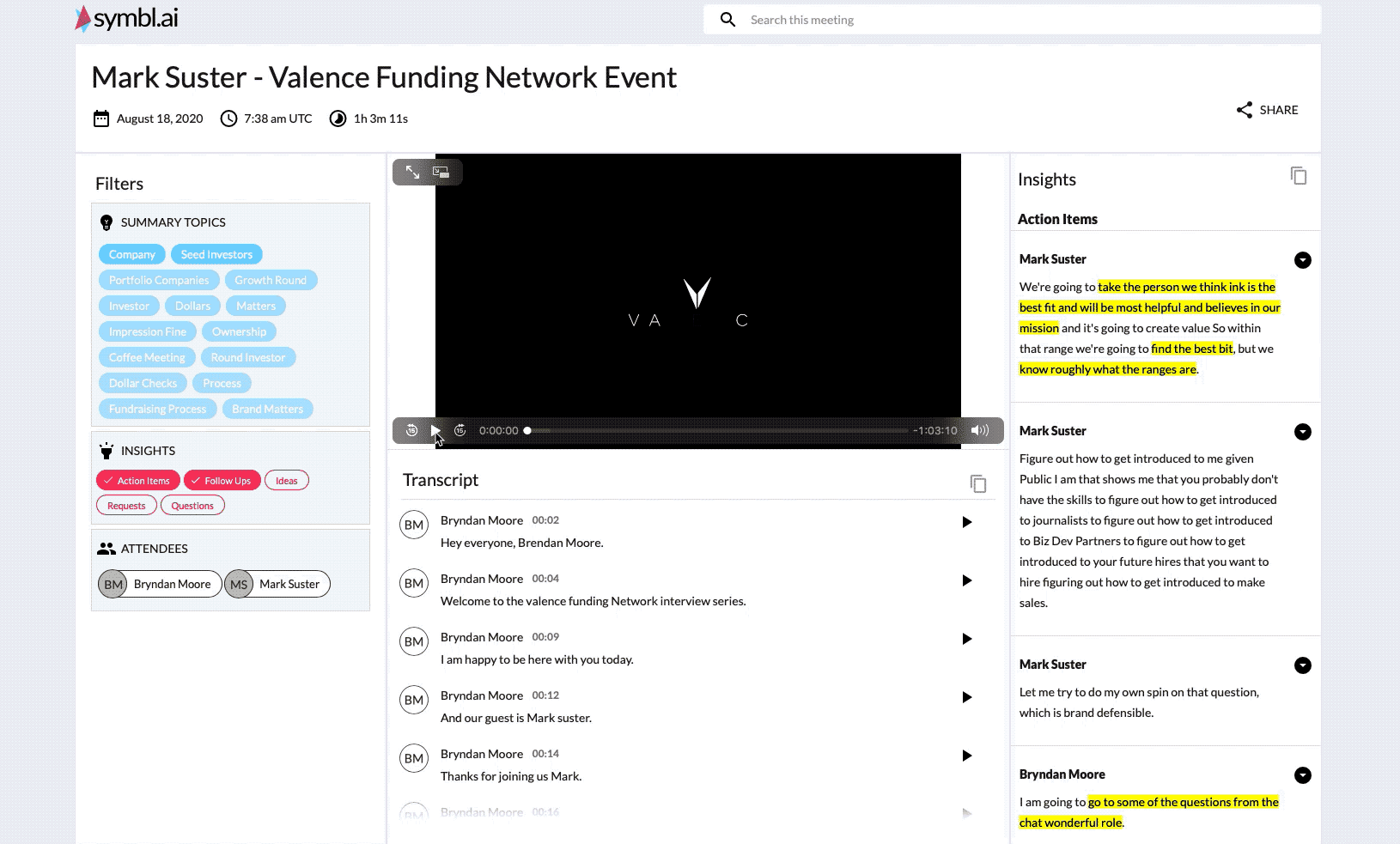

You can also spin up a customized video summary experience that further enhances your experience for recordings with your own brand and get to market fast.

Example of a customized video summary experience with Symbl.ai

Learn more about Symbl.ai‘s suite of APIs to automatically index your long-form audio content.