Speech recognition software is designed to capture human to human conversations either in real-time or asynchronously. A more accurate record of the conversation requires testing the system and evaluating its efficacy.

Anyone who has used AI for transcription can tell you that automatic speech recognition software (ASR) has come a long way in recent years.

If you’re developing ASR tech, you know that before you can release it into the wild, you have to make sure that it’s interpreting what’s being said well enough to not frustrate your users (even if the mistakes are hilarious).

Live captioning gone wrong (source)

When evaluating an ASR system, you can compare what the system can capture against a conversation that has already been accurately transcribed. This lets you look at the two conversations and compare the accuracy of them based on certain metrics.

To help you avoid these frustratingly funny faux-pas, you can use the following metrics to evaluate how effective your ASR is at accurately capturing human to human conversations (H2H), like sales calls or company meetings.

Word error rate

This is the most common metric used to evaluate ASR. Word error rate (WER) tells you how many words were logged incorrectly by the system during the conversation.

The formula for calculating WER is: WER = (S+D+I)/N

- S = substitutions: When the system captures a word, but it’s the wrong word. For example, it could capture “John was hoppy” instead of, “John was happy”.

- D = deletions: These are words the system doesn’t include. Like, “John happy”.

- I = insertions: When the system includes words that weren’t spoken, “John sure was happy”.

- N = total number of words spoken: How many words are contained in the entire conversation.

WER is a good starting point when evaluating an ASR system because it gives you a base number to work with. You know the overall accuracy as a percent for your system. A WER of 25% or less is considered average.

The one thing that WER doesn’t tell you is where the mistakes happened. For that, you can use these metrics:

Levenshtein distance

The Levenshtein distance is a string metric that measures the distance between two words. This means it calculates the difference between two words based on how many characters need to be changed to get there.

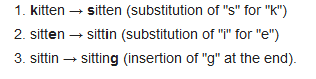

For example, the Levenshtein distance between bird and bard is one. The difference between kitten to sitting would be three because you’d have to make three substitutions to get there.

Going from kitten to sitting (source)

Like a lot of the metrics involved with evaluating ASR, Levenshtein distance ties back to WER because it measures insertions and deletions. It helps by providing a more in-depth look at what changes are being made, rather than just how many changes happen as a whole.

Number of word-level insertions, deletions, and mismatches

This metric tells you how accurate your translation is at the word level. When you compare what the system captures to the original text, you get an output that tells you how many insertions, deletions, and mismatched words happened.

Number of phrase-level insertions, deletions, and mismatches

Similar to the last metric, phrase-level insertions, deletions, and mismatches tell you how accurate your system is at capturing what was said. The difference is that this measures the accuracy at the phrase level, meaning whole sentences or paragraphs.

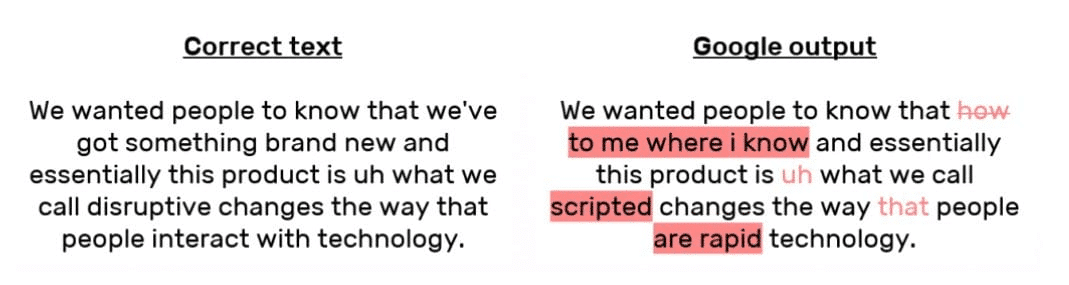

Phrase level insertions (source)

Color highlighted text comparison to visualize the differences

Once you’ve used ASR to capture the sample text, you can compare it to the original. Color highlighted text provides you with a visual representation of the accuracy. It helps you see whether or not your ASR is understanding what is being said at glance, rather than having to analyze WER.

This is useful when you’re fine-tuning your system and want to quickly see how accurate it is.

General statistics about the original and generated files

This is another high-level stat. This information is data like character count, word count, new lines, and file size. When you compare this information in the original file and the one generated by the ASR, they should be identical. The discrepancy between this information gives you a bird’s eye view of the accuracy of your system.

Creating better ASR with speech recognition APIs

All this data helps you understand how effective your ASR is at processing H2H conversations. You might find that you don’t need the system to be perfect, but if you’re to use your ASR to help build a conversational AI system, then the more accurate the better.

To create a system that includes the maximum amount of customization, you can use a speech recognition API that provides the following features:

- Real-time Speech Recognition via Streaming and Telephony for unlimited length with less than 300 ms latency

- Word-level timestamps

- Punctuation, as well as sentence boundary detection

- Speaker diarization (speaker separation)

- Channel separated audio/video files

- Custom vocabulary to recognize your custom keywords and phrases

- Sentence-level sentiment analysis included in the output

- Multiple language support

These features are pretty standard for most speech recognition APIs. Symbl’spowerful ASR API offers these standard features, but we take it one step further by allowing our customers to use features like:

- Key phrase detection to identify key parts of the conversation indicating important information and actions

- Pre-formatted, ready to render transcripts

- Enhance the output with speakers using external speaker talking events

- Indexed with Named Entities and Custom Entities

- Indexed with topics and insights

- Support for all the audio and video codecs in asynchronous API

Customizable features like these allow you to better capture conversations as they’re happening. They also allow you to create the exact kind of ASR system that you need.

When your ASR is capable of accurately understanding both what’s being said and the proper context for the conversation, you can really unlock the power of your H2H conversations. One of the fastest ways to do this is by using a conversational API that gives you everything you need to integrate conversational AI into your voice platform.

With Symbl’s suite of flexible APIs, you can quickly add intelligence to your projects and do things like analyze sales calls to identify the most effective tactics, accurately transcribe and summarize e-learning lectures, follow up on important action items by automatically scheduling meetings, and even fact-check speech asynchronously or in real-time. If you want to compare how well your speech-to-text or speech recognition engine is doing against a human-generated transcription, check out our Automatic Speech Recognition (ASR) Evaluation utility on Github.

Additional reading

If you want to learn more, you can check out the following resources.

- Automatic Speech Recognition (ASR) Evaluation

- The Levenshtein Distance Algorithm

- Word-level Speech Recognition with a Dynamic Lexicon