Real time Voice AI for

Customer Calls

Internal Meetings

Support Calls

Sales Calls

Podcasts, Webinars, and Videos

Internal and Outbound Calls

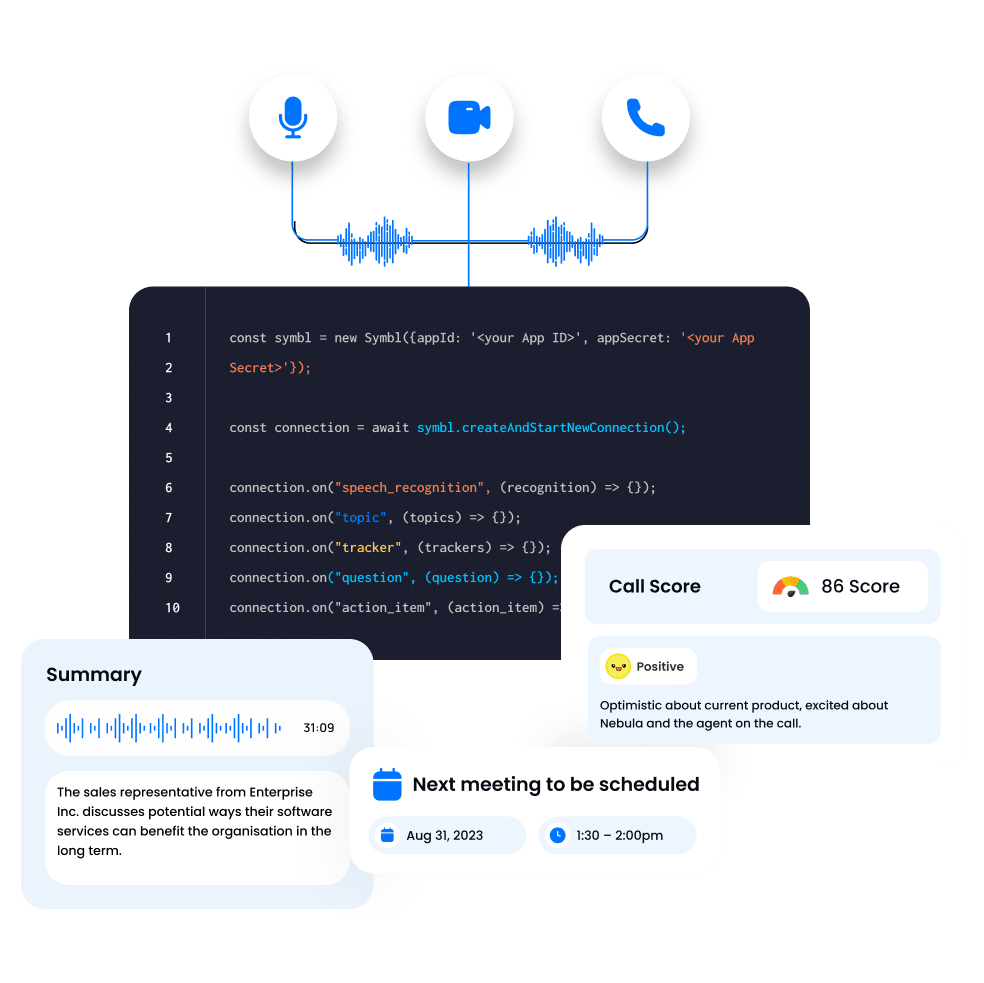

Generate actionable insights from unstructured live calls with our end-to-end real time AI platform.

Trusted by Companies of All Sizes

Tap into multimodal interactions in real-time

AI Teams

Fine-tune and build real-time RAG use cases with Nebula LLM and Embeddings

Product Teams

Build live experiences in your products and workflows, for example voice bots or live assist for specific roles, using low code APIs and SDKs

Revenue Teams

Get real time notifications on customer churn signals and business growth opportunities

Data Teams

Uncover patterns and predictions by combining conversation metadata with the rest of your data pipeline.

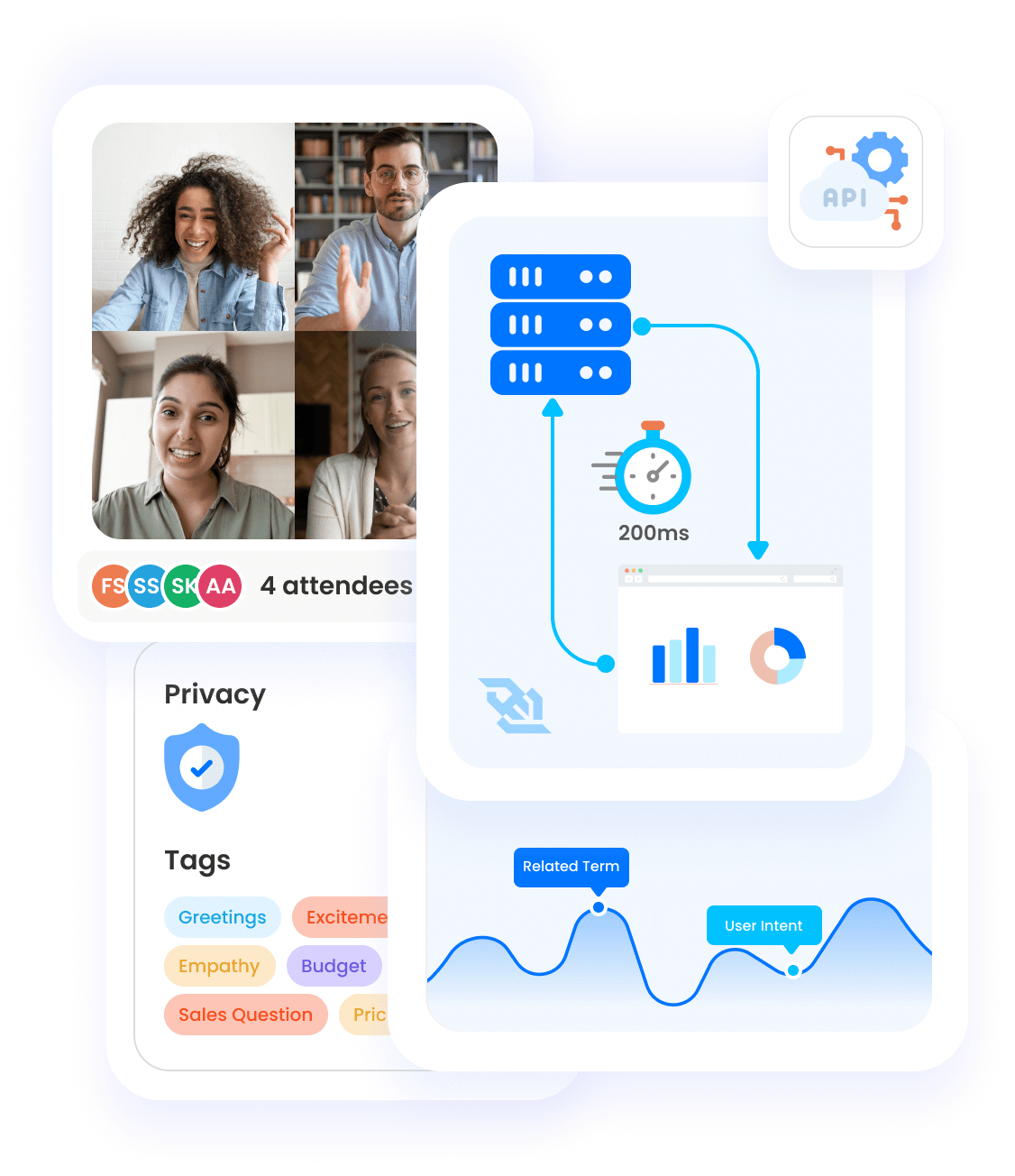

Why Symbl

- E2E real time AI platform: Stream live calls, extract conversation insights (such as questions, topics, sentiment, and more), and generate contextual responses with Nebula

- Powered by Nebula LLM: Nebula is Symbl.ai’s proprietary LLM that is specialized to understand human interactions in streaming mode.

- Low latency: Stream processing enables efficient, real time data analytics, reducing latency and improving response times.

Built On Secure, Scalable LLM

Nebula is a specialized LLM that is pre-trained with a large corpus of diverse business interaction data across channels, types of conversations, participants, conversation lengths and industries.

High accuracy on generative tasks

It is specialized on conversational tasks such as conversation scoring, intent detection, chain of thought reasoning, question answering, and topic detection.

Low latency

Nebula is optimized for lower token latency, ~40 ms, that enables generative AI experiences in real-time with Embeddings

Secure

A smaller model that can be scaled cost effectively and deployed, fine tuned on private cloud.

By Developers, For Developers

Integrate real time AI into your applications with a few lines of code today

const fetch = require('node-fetch');

const url = 'https://api.symbl.ai/v1/process/video/url';

const options = {

method: 'POST',

headers: {

accept: 'application/json',

'content-type': 'application/json',

authorization: YOUR ACCESS TOKEN;

},

body: JSON.stringify({url: 'https://youtube/samplefile.wav', name: '', diarizationSpeakerCount: 4, "enableSpeakerDiarization": true})

};

fetch(url, options)

.then(res => res.json())

.then(json => console.log(json))

.catch(err => console.error('error:' + err));

const fetch = require('node-fetch');

const url = 'https://api.symbl.ai/v1/process/audio/url';

const options = {

method: 'POST',

headers: {

accept: 'application/json',

'content-type': 'application/json',

authorization: YOUR ACCESS TOKEN;

},

body: JSON.stringify({url: 'https://symbltestdata.s3.us-east-2.amazonaws.com/newPhonecall.mp3', name: '', diarizationSpeakerCount: 2, "enableSpeakerDiarization": true})

};

fetch(url, options)

.then(res => res.json())

.then(json => console.log(json))

.catch(err => console.error('error:' + err));

const fetch = require('node-fetch');

const url = 'https://api.symbl.ai/v1/process/text';

const options = {

method: 'POST',

headers: {

accept: 'application/json',

'content-type': 'application/json',

authorization: YOUR ACCESS TOKEN;

},

body: JSON.stringify({messages: [

{

"payload": {

"content": "Hey, this is Amy calling from Health Insurance Company, I wanted to remind you of your pending invoice and dropping a quick note to make sure the policy does not lapse and you can pay the outstanding balance no later than July 30, 2023. Please call us back at +1 459 305 3949 and extension 5 urgently. ",

"contentType": "text/plain"

},

"from": {

"name": "Amy Brown (Customer Service)",

"userId": "[email protected]"

},

"duration": {

"startTime": "2020-03-06T03:27:16.174Z",

"endTime": "2020-03-06T03:27:16.774Z"

}

},

], name: "ASYNC-1692941230681"})

};

fetch(url, options)

.then(res => res.json())

.then(json => console.log(json))

.catch(err => console.error('error:' + err));

const phoneNumber = PHONE_NUMBER;

const emailAddress = EMAIL_ADDRESS;

const authToken = YOUR ACCESS TOKEN;

const payload = {

"operation": "start",

"endpoint": {

"type" : "pstn",

"phoneNumber": phoneNumber,

"dtmf": '{DTMF_MEETING_ID}#,,{MEETING_PASSCODE}#'

},

"actions": [{

"invokeOn": "stop",

"name": "sendSummaryEmail",

"parameters": {

"emails": [

emailAddress

]

}

}],

"data" : {

"session": {

"name" : "My Meeting"

}

}

}

let request = new XMLHttpRequest();

request.onload = function() {

}

request.open('POST', 'https://api.symbl.ai/v1/endpoint:connect', true);

request.setRequestHeader('Authorization', `Bearer ${authToken}`)

request.setRequestHeader('Content-Type', 'application/json');

request.send(JSON.stringify(payload));

const {Symbl} = window;

(async () => {

try {

const symbl = new Symbl({ accessToken: 'YOUR_ACCESS_TOKEN' });

const connection = await symbl.createConnection();

await connection.startProcessing({

config: { encoding: "OPUS" },

speaker: { userId: "[email protected]", name: "Symbl" }

});

connection.on("speech_recognition", (speechData) => {

const { punctuated } = speechData;

const name = speechData.user ? speechData.user.name : "User";

console.log(`${name}:`, punctuated.transcript);

});

connection.on("question", (questionData) => {

console.log("Question:", questionData["payload"]["content"]);

});

await Symbl.wait(60000);

await connection.stopProcessing();

connection.disconnect();

} catch(e) {

// Handle errors here.

}

})();

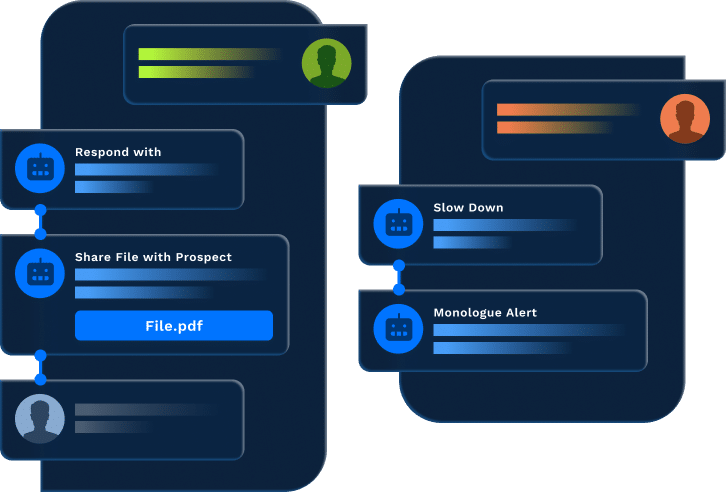

Build real time voice AI experiences

Process data stream (audio) with Streaming API, detect triggers (questions, trackers, or topics) in the conversation, and extract contextual information based upon triggers. You can stream data directly to agents or Nebula LLM for generating responses – in low latency & high accuracy.

Realtime Assist

Voice Bots

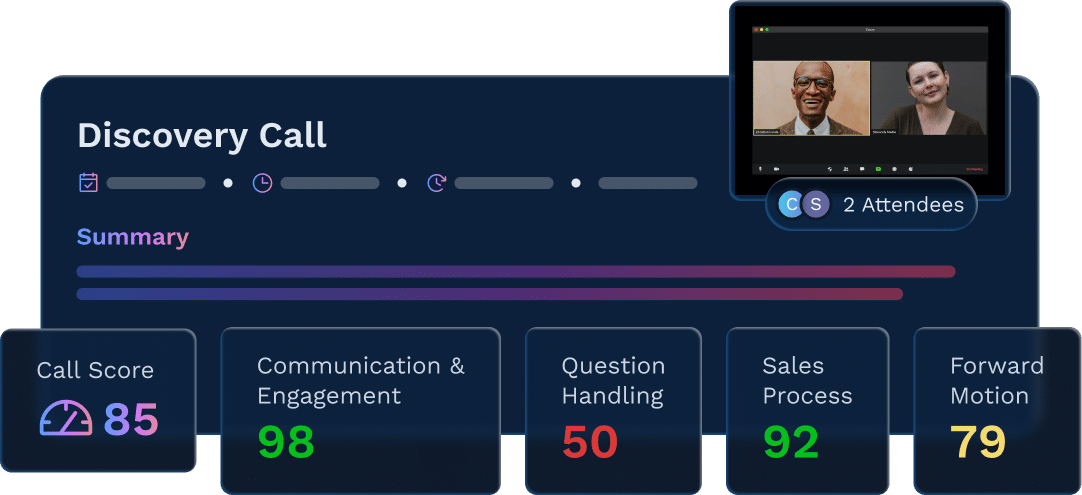

Call Score

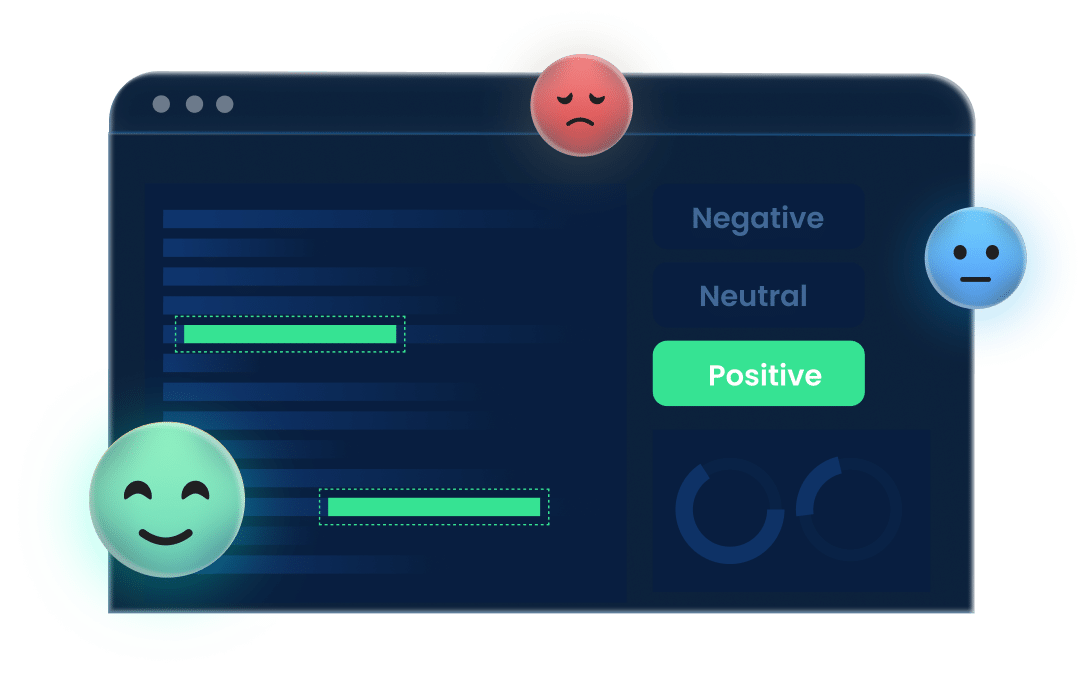

Live Sentiment Analysis

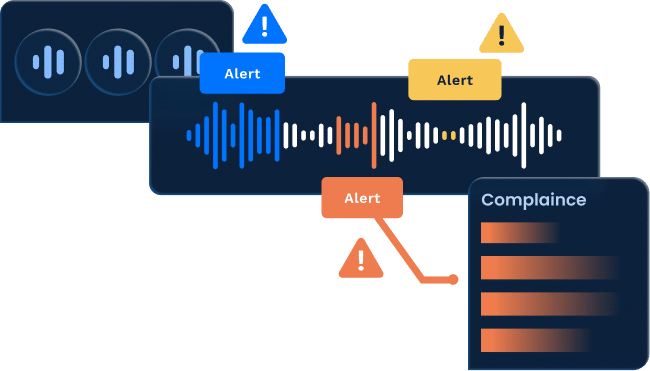

Real time alerts for Compliance

ROI From Solutions

-

30%

Improved Customer Sentiment

-

75%

Acceleration in Time To Market

-

50%

Improved Data Accuracy

-

28%

Acceleration in Revenue